I bought two Viking U20040 U.2 to 4X M.2 carriers, and I just wanted to add some notes for those considering getting these since I couldn't find much information about them online.

Top and bottom M.2 slots with loaded inland 2280 1TB drives. Notice on the top left shot it has one capacitor.

Size comparison with an HGST HUSMM8040ASS20 on the left and an intel DC S3520 on the right.

PCIe Topology

PCIe Topology

- These carriers can only accept 2280 M.2 drives.

- It uses a PCI switch. The Switchtec PM8531 PFX PCIe Gen 3 fanout switch.

- Being an U.2 carrier, only 4 PCIE Gen 3 lane are connected to the switch.

- The switch provides two lanes to each M.2.

- The entire frame of the drive is metal and acts as a heatsink.

- The carrier alone uses around 7-8 watts. Measured at the wall with a kill-a-watt.

- The carrier can get very hot. Air flow is required.

- There's a management interface for the PCIe switch on the carrier used to monitor statistics, temperature and configuration. You will need to install the driver and the user tools to use it in Windows. This driver isn't required for normal operations and isn't that hard to find. I couldn't find precompiled user tools though. I was able to compile the switchtec-user tools using the source from their github, but they don't seem to work properly. If you would like to try compiling it, you can get the

- Apparently, there’s a driver to enable dual-port support for HA applications. It partitions the drive into (2) 2 lanes, allowing two different systems or CPUs to access it. I’m unable to find more information or the driver.

- The brochure mentions it has PLP, but I don't think it does. At least not the carrier. They're very likely referring to the drives they would usually include with these carriers.

- You don't need to populate all M.2 slots, but you do need to at least have one. The management device otherwise reports an issue to windows.

- Each drive is passed independently as its own device to the system. Makes sense, since this is a switch, not an HBA. No built-in raid just to be clear.

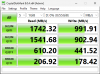

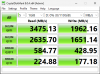

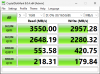

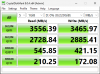

I did some quick benchmarks using CrystalDiskMark with two different brands and configs.

(4)

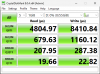

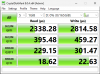

Optane p1600x 58gb

With a single drive, it was very close to the limit of the 2 PCIe gen 3 lanes provided to it in sequential reads and the limit of the drive itself for writes. Random reads and writes were excellent as well, but that's just Optane flexing its muscle.

Adding a 2nd drive in a stripe doubled sequential reads and writes. Random reads and writes at higher queue depths presented a slight improvement. No doubt the full 4 PCIe lanes are being used in this case.

Adding the 3rd drive didn't do much for sequential reads as expected due to the 4 PCIe lanes on the U.2 interface. Sequential writes saw another decent boost. Random reads and writes saw very little change. They are within margin of error.

Adding the 4th drive pretty much maxed out the bandwidth of the x4 interface with sequential reads and writes. Again, the random reads and writes saw very little difference. Infact, random Q1T1 barely changed at all in all 4 tests.

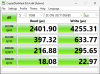

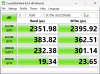

(4) Inland (Microcenter Brand) 1TB using a Phison E12.

I striped all 4.

Sequential read and writes pretty much maxed out the 4 PCIe lanes.

Random reads and write speeds are normal. No anomalies were noticed.

Some interesting ideas on what to do with these carriers:

- Using an M.2 to U.2 adapter to have 4 SSD drives on a cheap consumer board without using other slots.

- Putting four of them on a PCIE x16 card with bifurcation enabled to have 16 drives on a single PCIe slot.

- Or, like in my case, having eight drives on my Dell Precision T7820 using only the two front NVME U.2 drive bays, leaving the PCIe slots available for other uses (Had to increase airflow on HDD zone by 30% in bios).

So far, I think the Viking U20040 is not bad if you're looking into more density rather than performance. Sure, having dedicated PCIe lanes to each SSD would be excellent for performance, but that's not always possible or needed.

Dang, this post went longer than expected. Hope it helps others who like me were looking into more information about this carrier.