Well due to the Great deals section, I just got 4 x 8TB hard drives which is now making me think of how to set it up in my home environment. I don't have a lot of content to store. Most of my home files are stored in VM WS2012r2 Essentials server with two 6TB drives. One hdd is used for content and the other hdd is for backup of that content. I also backup to one external 5TB hdd and online cloud. So as you can see 32TB of raw storage is overkill for just storing my content.

On to my brainstorming.

I have 4 esxi nodes all with local storage. My intel server has 8 x 3.5 hdd trays and 8 2.5 7mm trays (only good for ssd drives). The dell server has 8 2.5 hdd trays. The Supermicro is in a pc case and can hold various 3.5/2.5 hdd. The forth is just my htpc acting like a node for testing so super limited.

for testing so super limited.

What i like to do is use the 4 x 8tb drives as main storage for home content (5-6TB) and then setup the rest of the storage pool for my VMs on the four esxi nodes via NFS/ISCSI. Then re-cycle down the other hdds I have to a backup pool for the home content and VMs. (2 x 6 tb RED 3.5, 3 x 3TB RED 3.5 plus various other hdds). I also like to keep it somewhat simple design.

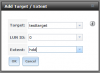

My though is to put the 4x 8tb drives on super micro server, since it's always on and runs my home prod servers. It's limited as it only has 2 pcie slots and max 32GB ram. Currently I have the onboard lsi card flashed to IT mode and pass-through to WS2012R2E VM server with 2x6TB RED drives attached. I was thinking of adding 4 x 8tb drives to LSI card since it has 8 slots and maybe add 1-2 ssd drives. Then using WS to share the storage out to ESXI nodes via iscsi. I havent researched this yet, but I think Starwind vsan can do this and it is free?

Any thoughts on doing the above on the Windows Sever? If possible I was planning to just use the two onboard nics for VMs traffic and replace the quad nic pcie with 10GB card and plug it into my switch. The dell and intel are already hooked up with 10GB. The 10 GB would be how the storage is shared out.

On to my brainstorming.

I have 4 esxi nodes all with local storage. My intel server has 8 x 3.5 hdd trays and 8 2.5 7mm trays (only good for ssd drives). The dell server has 8 2.5 hdd trays. The Supermicro is in a pc case and can hold various 3.5/2.5 hdd. The forth is just my htpc acting like a node

What i like to do is use the 4 x 8tb drives as main storage for home content (5-6TB) and then setup the rest of the storage pool for my VMs on the four esxi nodes via NFS/ISCSI. Then re-cycle down the other hdds I have to a backup pool for the home content and VMs. (2 x 6 tb RED 3.5, 3 x 3TB RED 3.5 plus various other hdds). I also like to keep it somewhat simple design.

My though is to put the 4x 8tb drives on super micro server, since it's always on and runs my home prod servers. It's limited as it only has 2 pcie slots and max 32GB ram. Currently I have the onboard lsi card flashed to IT mode and pass-through to WS2012R2E VM server with 2x6TB RED drives attached. I was thinking of adding 4 x 8tb drives to LSI card since it has 8 slots and maybe add 1-2 ssd drives. Then using WS to share the storage out to ESXI nodes via iscsi. I havent researched this yet, but I think Starwind vsan can do this and it is free?

Any thoughts on doing the above on the Windows Sever? If possible I was planning to just use the two onboard nics for VMs traffic and replace the quad nic pcie with 10GB card and plug it into my switch. The dell and intel are already hooked up with 10GB. The 10 GB would be how the storage is shared out.