So I have always wanted a ZFS HA SAN for the house, and a project at work introduced me to OSNexus QuantaStor, as well as their amazing CEO/CTO Steven Umbehocker who ended up handling my sales call during December a year or so ago while his sales staff was I guess doing holiday stuff. After talking with him about my personal setup he informed me that they have a free/community edition of their full-featured product, this includes HA, FC, scale up and out, and you can get up for 4 licenses to play with it kinda however you want. It is a bit of a learning curve coming from TrueNAS for the past 10+ years but overall, I am very happy with it. The community edition only supports 40T (soon more) of raw storage under a single license, but for home use, that’s probably more than I am going to use for how I have things setup.

Anyway tl;dr, here is a look at my new ZFS HA SAN.

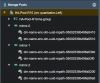

I am using a SuperMicro SBB 2028R-DE2CR24L. It is a dual node server where both nodes have access to all 24 SAS bays up front and even has room for SAS expansion out of the back. I have replaced the 10G NICs that came with it with 25G Broadcom P225P.

I am using 6 Samsung PM1643 3.84T SAS SSDs for the pool.

I have a RAM upgrade on the way to take this from 64G per node to 256G per node and for now, the dual E5-2650 v3’s are enough for the load that I am pushing.

I am running the QuantaStor Technology Preview version of the software so I can use ZSTD compression and be on a rather new build of OpenZFS as well as it being an easy upgrade path from QuantaStor 5 to QuantaStor 6 when it comes out.

Open to questions and comments.

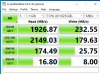

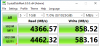

3,218MB/s Read 3,134MB/s write

Anyway tl;dr, here is a look at my new ZFS HA SAN.

I am using a SuperMicro SBB 2028R-DE2CR24L. It is a dual node server where both nodes have access to all 24 SAS bays up front and even has room for SAS expansion out of the back. I have replaced the 10G NICs that came with it with 25G Broadcom P225P.

I am using 6 Samsung PM1643 3.84T SAS SSDs for the pool.

I have a RAM upgrade on the way to take this from 64G per node to 256G per node and for now, the dual E5-2650 v3’s are enough for the load that I am pushing.

I am running the QuantaStor Technology Preview version of the software so I can use ZSTD compression and be on a rather new build of OpenZFS as well as it being an easy upgrade path from QuantaStor 5 to QuantaStor 6 when it comes out.

Open to questions and comments.

3,218MB/s Read 3,134MB/s write