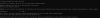

C:\WINDOWS\system32>fio-status -a

Found 4 ioMemory devices in this system with 1 ioDrive Duo

Driver version: 3.2.15 build 1699

Adapter: Dual Controller Adapter

Dell ioDrive2 Duo 2410GB MLC, Product Number:7F6JV, SN:US07F6JV7605128D0008

ioDrive2 Adapter Controller, PN:F4K5G

SMP(AVR) Versions: App Version: 1.0.35.0, Boot Version: 0.0.8.1

External Power Override: ON

External Power: NOT connected

PCIe Bus voltage: avg 11.63V

PCIe Bus current: avg 2.75A

PCIe Bus power: avg 31.02W

PCIe Power limit threshold: 49.75W

PCIe slot available power: unavailable

Connected ioMemory modules:

fct1: Product Number:7F6JV, SN:1231D1459-1111

fct3: Product Number:7F6JV, SN:1231D1459-1111P1

fct4: Product Number:7F6JV, SN:1231D1459-1121

fct5: Product Number:7F6JV, SN:1231D1459-1121P1

fct1 Attached

SN:1231D1459-1111

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 0 Upper of ioDrive2 Adapter Controller SN:1231D1459

Powerloss protection: protected

Last Power Monitor Incident: 26 sec

PCI:0d:00.0

Vendor:1aed, Device:2001, Sub vendor:1028, Sub device:1f71

Firmware v7.1.17, rev 116786 Public

576.30 GBytes device size

Format: v500, 140699218 sectors of 4096 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 55.61 degC, max 56.11 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.49V, max 2.50V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 8.13 PB, 96.73% remaining

Lifetime data volumes:

Physical bytes written: 265,507,035,945,724

Physical bytes read : 185,626,851,430,832

RAM usage:

Current: 39,674,816 bytes

Peak : 40,290,496 bytes

Contained VSUs:

fct1: ID:0, UUID:9fb9db0d-acf3-4368-812d-946cfa5a56de

fct1 State: Online, Type: block device

ID:0, UUID:9fb9db0d-acf3-4368-812d-946cfa5a56de

576.30 GBytes device size

Format: 140699218 sectors of 4096 bytes

fct3 Attached

SN:1231D1459-1111P1

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 0 Upper of ioDrive2 Adapter Controller SN:1231D1459

Powerloss protection: protected

PCI:0d:00.0

Vendor:1aed, Device:2001, Sub vendor:1028, Sub device:1f71

Firmware v7.1.17, rev 116786 Public

576.30 GBytes device size

Format: v500, 140699218 sectors of 4096 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 55.61 degC, max 56.11 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.49V, max 2.50V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 8.13 PB, 96.73% remaining

Lifetime data volumes:

Physical bytes written: 265,506,978,157,300

Physical bytes read : 185,626,777,918,328

RAM usage:

Current: 39,670,656 bytes

Peak : 40,273,856 bytes

Contained VSUs:

fct3: ID:0, UUID:54d333ed-2ec4-4964-97c3-19c23e328454

fct3 State: Online, Type: block device

ID:0, UUID:54d333ed-2ec4-4964-97c3-19c23e328454

576.30 GBytes device size

Format: 140699218 sectors of 4096 bytes

fct4 Attached

SN:1231D1459-1121

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 1 Lower of ioDrive2 Adapter Controller SN:1231D1459

Powerloss protection: protected

Last Power Monitor Incident: 26 sec

PCI:07:00.0

Vendor:1aed, Device:2001, Sub vendor:1028, Sub device:1f71

Firmware v7.1.17, rev 116786 Public

576.30 GBytes device size

Format: v500, 140699218 sectors of 4096 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 59.06 degC, max 60.04 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.49V, max 2.50V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 8.13 PB, 96.74% remaining

Lifetime data volumes:

Physical bytes written: 265,088,491,556,636

Physical bytes read : 185,154,759,318,888

RAM usage:

Current: 39,670,656 bytes

Peak : 40,282,176 bytes

Contained VSUs:

fct4: ID:0, UUID:c34c4337-7694-4cbd-b12d-7cee43786d7a

fct4 State: Online, Type: block device

ID:0, UUID:c34c4337-7694-4cbd-b12d-7cee43786d7a

576.30 GBytes device size

Format: 140699218 sectors of 4096 bytes

fct5 Attached

SN:1231D1459-1121P1

SMP(AVR) Versions: App Version: 1.0.29.0, Boot Version: 0.0.9.1

Located in slot 1 Lower of ioDrive2 Adapter Controller SN:1231D1459

Powerloss protection: protected

PCI:07:00.0

Vendor:1aed, Device:2001, Sub vendor:1028, Sub device:1f71

Firmware v7.1.17, rev 116786 Public

576.30 GBytes device size

Format: v500, 140699218 sectors of 4096 bytes

PCIe slot available power: 25.00W

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Internal temperature: 59.06 degC, max 60.04 degC

Internal voltage: avg 1.01V, max 1.02V

Aux voltage: avg 2.49V, max 2.50V

Reserve space status: Healthy; Reserves: 100.00%, warn at 10.00%

Active media: 100.00%

Rated PBW: 8.13 PB, 96.74% remaining

Lifetime data volumes:

Physical bytes written: 265,088,451,953,036

Physical bytes read : 185,154,802,474,104

RAM usage:

Current: 39,670,656 bytes

Peak : 40,282,176 bytes

Contained VSUs:

fct5: ID:0, UUID:8d554735-3233-4685-8b3c-9b5061d563cd

fct5 State: Online, Type: block device

ID:0, UUID:8d554735-3233-4685-8b3c-9b5061d563cd

576.30 GBytes device size

Format: 140699218 sectors of 4096 bytes