Hey,

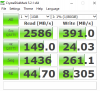

so I've done a couple all-in-one ESXi boxes with either FreeNAS or FreeBSD with ZFS I pass to ESXi as NFS datastores. Tried to run VMs but benchmarks exposed gawd-awful write speeds, totally unusable. Just ended up using them for ISO storage and (slow) backups, content libraries, etc. so not a total bust, but still.

Ran KVM for a while on a box with ZFS datastore and that was much better, but want to use ESXi ... is there something else that works better? I was wondering if Napp-it avoids this awful sync issue FreeBSD/NAS has with ZFS writes, have tried NVMe slog (SM953 with 1000+ Mbps writes), sync=disable, tried re-compiling a kernel with sync modified (I'm sure you've all seen it), it's all crap. ZFS just sucks for VM datastore in ESXi.

Does Napp-it overcome that slow write issue to NFS datastores in some other way? Should I just ditch ZFS and run XFS on mdadm?

so I've done a couple all-in-one ESXi boxes with either FreeNAS or FreeBSD with ZFS I pass to ESXi as NFS datastores. Tried to run VMs but benchmarks exposed gawd-awful write speeds, totally unusable. Just ended up using them for ISO storage and (slow) backups, content libraries, etc. so not a total bust, but still.

Ran KVM for a while on a box with ZFS datastore and that was much better, but want to use ESXi ... is there something else that works better? I was wondering if Napp-it avoids this awful sync issue FreeBSD/NAS has with ZFS writes, have tried NVMe slog (SM953 with 1000+ Mbps writes), sync=disable, tried re-compiling a kernel with sync modified (I'm sure you've all seen it), it's all crap. ZFS just sucks for VM datastore in ESXi.

Does Napp-it overcome that slow write issue to NFS datastores in some other way? Should I just ditch ZFS and run XFS on mdadm?