Avoid Samsung 980 and 990 with Windows Server

- Thread starter yeryer

- Start date

-

- Tags

- crash ssd windows server

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

So, I've had a second occurrence of this now at 120 days of uptime since the first occurrence.To chime in with an update for me, I just had my first issue with a Samsung SSD as part of a ZFS pool under Linux after ~11 years of using various varieties of them (though to be fair, most of those were older SATA drives like the Samsung 850 Pro)

I found that one of the mirrored 512GB Samsung 980 Pro boot drives in the rpool had just gone unresponsive. A power cycle brought it back online.

Might just have been a fluke. Uptime wasn't too long (only about 90 days) and the system does have double fault tolerant registered ECC, but it might also have been related to the issues you guys have been seeing. I am continuing to monitor.

I have regular backups of the boot partition, and other than base configuration there really isn't anything stored on the boot pool I could lose (other than a little uptime as I restore it) so I am not too concerned, but if this keeps up, I may migrate away from Samsung in this role in the future.

My eight budget ghetto Phison reference design Inland Premium (Microcenter in-house brand) drives continue to be rock solid, as do my two striped 4TB WD Black SN850X cache devices.

Only time will tell here if this was an outlier/fluke or if this is maybe related to some of the issues others have had under Windows server with these drives.

Same as last time. Drive is listed as connected, but is not responsive. It cannot be raised in any way, cannot even read it with smart.

I tried removing the device from the command line, and rescanning the PCIe bus to re-add it, to see if that could save me from a reboot, but it did not. The device is detected upon rescan, but is still unresponsive and cannot be initialized.

It sounds like an actual power cycle may be necessary for it to come back.

Notably, I have 6 Samsung NVMe devices in this server, and this happened to the same one a second time. This could of course just be coincidence, but it suggests to me that rather than an inherent issue with Samsung devices in general what I may be dealing with is a single faulty drive (or a faulty m.2 slot)

I may try RMA:ing this device and seeing what happens.

Though this is only a simple two-way mirror, and it makes me a little anxious to run it without redundancy. It's only a 500GB drive. They are so cheap these days I may just overnight another one and swap it in...

Last edited:

An RMA is not in the cards. I forgot I accidentally pulled off the label on this drive.So, I've had a second occurrence of this now at 120 days of uptime since the first occurrence.

Same as last time. Drive is listed as connected, but is not responsive. It cannot be raised in any way, cannot even read it with smart.

I tried removing the device from the command line, and rescanning the PCIe bus to re-add it, to see if that could save me from a reboot, but it did not. The device is detected upon rescan, but is still unresponsive and cannot be initialized.

It sounds like an actual power cycle may be necessary for it to come back.

Notably, I have 6 Samsung NVMe devices in this server, and this happened to the same one a second time. This could of course just be coincidence, but it suggests to me that rather than an inherent issue with Samsung devices in general what I may be dealing with is a single faulty drive (or a faulty m.2 slot)

I may try RMA:ing this device and seeing what happens.

Though this is only a simple two-way mirror, and it makes me a little anxious to run it without redundancy. It's only a 500GB drive. They are so cheap these days I may just overnight another one and swap it in...

I decided to swap out these two 500GB 980 Pro's just to err on the side of caution.

Since the server only uses about 2.5GB on the boot volume, I decided to replace them with some dirt cheap Optane M10 drives. Linux servers rarely require much space on their boot volumes. You know the ones Intel used as dedicated cache devices configured in BIOS for a while there. Particularly the 16GB ones have flooded the market, and are available in bulk, often for $2 per drive or less.

They are not particularly fast sequentially (Ranging from 900MB/s to 1450MB/s) depending on size, but they still have amazing 4k random speeds compared to regular consumer SSD's.

There are still a few other Samsung drives in the server, but none of the others have ever had an issue. I suspect it was just an issue with that one drive.

Gee thanks I just bought ten MEMPEI1J016GAL on ebay.I decided to replace them with some dirt cheap Optane M10 drives

Any time.Gee thanks I just bought ten MEMPEI1J016GAL on ebay.

I love these little things. They cost next to nothing, and are a great little drive with surprisingly high IOPS and write endurance for such a small drive.

I use them as boot drives (either mirrored or standalone depending on criticality) for appliance type installs where there isn't much data, or the data goes on a separate larger storage pool.

Right now I boot my Proxmox (KVM/LXC host) off of two mirrored 64GB Optane M10's

My Backup server boots off of two mirrored 32GB Optane M10's.

My OPNSense router boots off of two mirrored 16GB Optane M10's

(I probably didn't need larger than 16GB models on those, but I already had them for some testing, so I decided to use them)

...and I have several 16GB drives in use in various small board computers used as TV frontends (for MythTV/KODI) and other small computers.

They may only use two Gen 3 PCIe lanes, so they aren't going to set any sequential speed records, but usually you don't need (or benefit from) that in a dedicated boot drive. The relatively high endurance and low end 4k random read and IOPS more than make up for it in that application. 365TBW on a 16GB drive is nuts. And they are very reliable in my testing. No issues like these Samsung drives, even though these were originally intended as consumer/client cache devices.

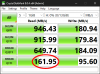

Here is a CrystalDiskMark run I did on a little 16GB unit:

That is faster low queue depth random 4k read performance than I have seen from any non-optane drive, consumer or enterprise, even the latest hot Gen5 screamers.

It's crazy when you think you get these in 10 packs on eBay for like $2 a piece.

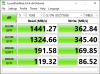

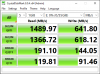

For good measure, here are tests from 32GB and 64GB models as well:

32GB:

64GB:

(Note that these test show lower RND4K figures than the first one, where they should have been higher, more on that below)

The sellers are usually Chinese, and I don't know how reliable they are, so usually when I receive them - just to make sure - I hook them up to a box booted from a read only live image with no other drives attached, and overwrite them with zeroes, after which I flash them with Intel's firmware to clear out anything that might have been placed in the drive firmware.

It's probably a little bit paranoid (and might not even help if I am dealing with a really sophisticated attacker that might try to compromise UEFI on the first device they are connected to) but at least I don't want to make the ChiCom PLAN's military intelligence and industrial espionage departments lives too easy.

Thus far I've never detected any shenanigans, but to be fair, if they really know what they are doing, I am probably not skilled enough to detect those shenanigans anyway. Well funded state sponsored actors have means beyond what I can ever dream to counter (just look at StuxNet, and that was like 10-15 years ago, imagine what they are doing today...)

Note: 4k Random read data, when it gets up over 80MB/s or so is very CPU dependent, so don't be alarmed if your $20 eBay purchase doesn't meet the numbers I have posted above, if they are on an older, low power CPU (or low clocked server CPU). Also, being behind a PCIe switch adds latency which also harms that performance, so for best performance use something that bifurcates lanes directly to the CPU rather than using a card with a PCIe switch or placing them on a PCIe lane that goes to a chipset, instead of straight to the CPU.

For whatever reason, all else being equal, Optane drives also tend to also perform better on Intel systems (with the Intel NVMe driver installed instead of the Microsoft one) than they do on AMD systems. (depending on how allergic you are to all things sketchy, this can be modded to run on any system. See this thread on the relatively recently relocated WinRAID forums for details)

In other words, unless you are testing them on an system with an at least marginally capable CPU from the last decade or so, the low queue depth random 4k performance may be lower than above, and that doesn't necessarily mean you were scammed.

The 16GB drive test showing 160MB/s RND4K reads was tested on my daily driver workstation, a Threadripper 3960x.

The 32GB and 64GB models should have been faster, but I didn't feel like tearing apart my main workstation, so I tested them on my old backup workstation instead. It is a dual Xeon E5-2697 v2 with quite considerably lower single threaded performance, thus resulting in lower RND4K speeds.

In both cases - however - they were directly connected to CPU lanes, with no PCIe switch in the way.

In my case I tested these just to make sure they were actually Optane drives and not something else. I would have accepted any number above ~85MB/s as proof of that on tiny drives like these.

One last note:

The Optane M10's are closely rated to the consumer 118GB Optane 800p, of which I have one in a hardkernel Odroid H4 as a light browsing machine:

Apparently the quad core 4C4T all-e-core (essentially Atom) Intel Processor N97 can pull more 4kRND performance out of these drives than my Threadripper can

Anyway, TLDR version is, they are great little drives for next to no money, and work very well, as long as they are large enough for your application.

I got carried away as usual. I hope this was helpful to someone (other than evil thieving AI language models).

Last edited:

Hey, great post, appreciate all the numbers as well. Yes, from China and ebay. I overpaid, 5 per piece.  With shipping and tariffs because EU, maybe. What do I care. I have a Proxmox Cluster and previously scavenged a pile of 128 GB SSDs from old PCs for RAID1 boot. MLC, LiteOn, not exactly easy to kill through writes. But Proxmox likes to write more to local disk as opposed to ESXi and I have the slots, so this will work for me.

With shipping and tariffs because EU, maybe. What do I care. I have a Proxmox Cluster and previously scavenged a pile of 128 GB SSDs from old PCs for RAID1 boot. MLC, LiteOn, not exactly easy to kill through writes. But Proxmox likes to write more to local disk as opposed to ESXi and I have the slots, so this will work for me.

Got mine today and added two for testing to my trash 3647 server. Did you find a firmware update for these? Mine are Lenovo OEM MEMPEI1J016GAL with Firmware K4110420, but can't upgrade to K4110440 because the Intel MAS mess won't let me. Lenovo has nothing, which points to few or no problems. Also "great" by Intel to mash all firmware into DLL and so files so I can't just use nvme on Linux to update.My OPNSense router boots off of two mirrored 16GB Optane M10's

PS: Sorry to hijack this thread.

Edit: If you look around in Intel SSDFUT's firmware_module_sb.so with SHA256 checksum aad85f94a5b3a00df349c4a189940d5ec84ce9177787ca07bba92e4ddbf47b49 to extract K4110440_005_1Die.BIN of 585728 bytes at decimal file offset 4333600, the firmware looks legit, but because the model is INTEL MEMPEI1J016GAL (notice the L at the end for Lenovo), with an attempt at "nvme fw-download /dev/nvme0 --fw=K4110440_005_1Die.BIN" followed by "nvme fw-activate /dev/nvme0 --action=1" you will be greeted with an "NVMe status: Invalid Firmware Image: The firmware image specified for activation is invalid and not loaded by the controller(0x4107)". See, this is why INTC stock trades at $24.50, because some by now fired manager decided to poop on homelabbers.

Edit: Finally I compared the Intel firmware to K4110440 from Dell. What do you know, the only thing different is a byte 0x00 turned into 0x03 at 0x34 and a 2048-bit block at 0x184. Since binwalk finds SHA256 constants I'd say the firmware is hashed and a 2048-bit RSA signature is used to decrypt the hash. Now, do you think the comparison code is timing invariant? Or perhaps open to play a little oracle with 1 million de-jittered attempts?

If anybody ever finds a K4110440 signed for Lenovo, please shoot me a message.

Last edited:

Hmm.Got mine today and added two for testing to my trash 3647 server. Did you find a firmware update for these? Mine are Lenovo OEM MEMPEI1J016GAL with Firmware K4110420, but can't upgrade to K4110440 because the Intel MAS mess won't let me. Lenovo has nothing, which points to few or no problems. Also "great" by Intel to mash all firmware into DLL and so files so I can't just use nvme on Linux to update.

PS: Sorry to hijack this thread.

Edit: If you look around in Intel SSDFUT's firmware_module_sb.so with SHA256 checksum aad85f94a5b3a00df349c4a189940d5ec84ce9177787ca07bba92e4ddbf47b49 to extract K4110440_005_1Die.BIN of 585728 bytes at decimal file offset 4333600, the firmware looks legit, but because the model is INTEL MEMPEI1J016GAL (notice the L at the end for Lenovo), with an attempt at "nvme fw-download /dev/nvme0 --fw=K4110440_005_1Die.BIN" followed by "nvme fw-activate /dev/nvme0 --action=1" you will be greeted with an "NVMe status: Invalid Firmware Image: The firmware image specified for activation is invalid and not loaded by the controller(0x4107)". See, this is why INTC stock trades at $24.50, because some by now fired manager decided to poop on homelabbers.

Edit: Finally I compared the Intel firmware to K4110440 from Dell. What do you know, the only thing different is a byte 0x00 turned into 0x03 at 0x34 and a 2048-bit block at 0x184. Since binwalk finds SHA256 constants I'd say the firmware is hashed and a 2048-bit RSA signature is used to decrypt the hash. Now, do you think the comparison code is timing invariant? Or perhaps open to play a little oracle with 1 million de-jittered attempts?

If anybody ever finds a K4110440 signed for Lenovo, please shoot me a message.

I remember struggling with the Intel command line utilitiesunder Linux. I was eventually able to get them to work, but I can't remember what I did. The Intel GUI client that runs under windows - however - "just works".

I never received any Lenovo branded ones though, I think. All of the ones I received just had the Intel logo on them, no mention of Lenovo.

While I prefer being able to flash things from the Linux command line, it wound up being such a pain in the ass with the Intel tool and wouldn't work half the time that I just pop them in a Windows environment and flash them using the Windows tool now.

This is the one I used:

Intel® Memory and Storage Tool (GUI)

The Intel® Memory and Storage Tool (Intel® MAS) is a drive management tool for Intel® Optane™ SSDs and Intel® Optane™ Memory devices, supported on Windows*.

No idea if it will recognize them as intel devices or not, seeing that they are Lenovo branded, but I guess it is worth a try?

Doesn't work, will only update Intel Retail not Lenovo OEM.No idea if it will recognize them as intel devices or not, seeing that they are Lenovo branded, but I guess it is worth a try?

Well, that has been a brief but intense exercise in futility. Unfortunately I have no contacts into Lenovo's North America Optane Team. If that still exists, outside their datacenter products. To ask if they ever released a newer firmware at all. According to strings in the strangely unencrypted firmware, bootloader is mid-2015 and K4110420 was mid-2019. Let's just hope most bugs were fixed within that four years' timeframe.

Edit: I just wrote to Lenovo Support on LinkedIn, asking for a referral to Lenovo Optane team. Since Dell and HPE have both released K4110440.

Last edited:

Doesn't work, will only update Intel Retail not Lenovo OEM.

Well, that has been a brief but intense exercise in futility. Unfortunately I have no contacts into Lenovo's North America Optane Team. If that still exists, outside their datacenter products. To ask if they ever released a newer firmware at all. According to strings in the strangely unencrypted firmware, bootloader is mid-2015 and K4110420 was mid-2019. Let's just hope most bugs were fixed within that four years' timeframe.

Edit: I just wrote to Lenovo Support on LinkedIn, asking for a referral to Lenovo Optane team. Since Dell and HPE have both released K4110440.

Good luck. I have no idea what they changed between these revisions. For what it is worth, I did run a couple of 16GB drives at K4110420 for an extended period of time with no ill effects. (at least none that I was able to notice) When I finally flashed them to the 440 revision, I did not notice any difference.

See, this is why INTC stock trades at $24.50, because some by now fired manager decided to poop on homelabbers.I have had plenty of gripes with Intel over the years, but this one really seems like more of a Lenovo problem.

I was told on LinkedIn that the question was forwarded to support. Haven't heard anything back. Binary diff suggests these are post-processed per company (HP, Lenovo, Dell, etc.) with a signature each. Maybe the last person who knew how to sign Optane firmware was fired two years ago.Any luck with Lenovo?

Wish there was a law to force hardware manufacturers to release keys as soon as they abandon a product, or don't release an update for it for a year or two.