Hello STH forum goers,

I've been in IT for awhile but my brain lacks the proportional linkage of cost/usage in a home environment unless I sit my rear down and monitor a killawatt for 8hrs. I do have a Solar panel system/w battery backup so power usage is 50/50 on cost expense out. I am looking to retire my old mini-itx FreeNAS/TrueNAS from 2016 and my dusty MS Hyper-V test server of frankenscraps. Reading deeply on integrating a VMware ESXi vSphere w/Veeam+Cloud Repo and TrueNAS VM. Will rely on more "thin/zero client" RDP with my mobile devices such as old laptops/tablets in my household. No plans on hosting a Plex server since I am lazy on streaming. TrueNAS Storage will be for family archiving/DVD Movie backups/(BD Backups?). No plans to increase internal network from 1Gb since there are rarely any streaming requirements for 4K with gaming consoles to the home theater section.

Expected Project Completion Date Q2 2023.

The grit:

Estimated Budget $2.5K US

Default Hardware:

Chassis: 4U Rosewill Chassis with possible HS 3.5" Bays

OS/VM Primary Storage: 2x 1TB or 2x 2TB SK Hynix P31s (RAID1)

TrueNAS Secondary/Archive Storage: 4x 14TB Seagate EXOS or IW. Mirror VDEV or RZ2 VDEV

PSU: Seasonic Focus PX 80 Plat 650W

Memory: 64GB ECC Minimum

1a. Go Cheap but still expensive on a AMD Consumer platform w/R7 5800X and ASRockrack X5700D4 ~ $700 US

PRO: Lower TDP/Power Usage/Lesser ECC RAM Requirements 2x vs 4x

CON: Lower PCIe Expansion if ever for a HBA (Not sure if PCIe Lanes will be eaten by the second NVMe for 8 SATA)

1b. Alternative Go Bigger on a AMD Enterprise platform w/E 7302P and Supermicro H12SSL-i ~ $720 US (Ebay Chances...)

PRO: More Cores for more VM instances & testing/PCIe Lanes Galore with possible expansion to thin gaming clients. (Maybe a simple 3D Rendering VM for 3D Printing Projects but that is wishful thinking for my available time.)

CON: Slightly Older Arch/Higher TDP/High Power usage+idling/ECC REG RAM Requirements costs up for 4x DIMM Minimal

Current Setup:

Recently completed StarTech 12U Openframe

Startech 1U 8 Outlet PS

Pending Hardware: 2U 16" Shelf and 2U Cyberpower UPS

Gaming PC:

Rosewill RSV-R4000 w/AMD R7 7700x/ASRock X670E Pro RS/32GB DDR5 5600 G.Skill FlareX5/1TB Samsung 980 Pro NVMe/Gigabyte G.OC RX6650XT/Seasonic Focus PX650W (All Noctua cooling)

FreeNAS/TrueNAS:

Bitfenix Prodigy Mini-ITX Tower /w Intel P G3258/ASRock Rack mini-itx C something Intel chipset/16GB ECC DDR3/2x 32GB USB FDs/4x 3TB WD or 3TB SG in RV1

(Running 400W PSU that is 7-8yrs old from my frankenscrap test server after the original 550W PSU fan seized up... *gulp*)

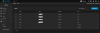

Network:

New stuff from 2021 but only 1Gb.

I've been in IT for awhile but my brain lacks the proportional linkage of cost/usage in a home environment unless I sit my rear down and monitor a killawatt for 8hrs. I do have a Solar panel system/w battery backup so power usage is 50/50 on cost expense out. I am looking to retire my old mini-itx FreeNAS/TrueNAS from 2016 and my dusty MS Hyper-V test server of frankenscraps. Reading deeply on integrating a VMware ESXi vSphere w/Veeam+Cloud Repo and TrueNAS VM. Will rely on more "thin/zero client" RDP with my mobile devices such as old laptops/tablets in my household. No plans on hosting a Plex server since I am lazy on streaming. TrueNAS Storage will be for family archiving/DVD Movie backups/(BD Backups?). No plans to increase internal network from 1Gb since there are rarely any streaming requirements for 4K with gaming consoles to the home theater section.

Expected Project Completion Date Q2 2023.

The grit:

Estimated Budget $2.5K US

Default Hardware:

Chassis: 4U Rosewill Chassis with possible HS 3.5" Bays

OS/VM Primary Storage: 2x 1TB or 2x 2TB SK Hynix P31s (RAID1)

TrueNAS Secondary/Archive Storage: 4x 14TB Seagate EXOS or IW. Mirror VDEV or RZ2 VDEV

PSU: Seasonic Focus PX 80 Plat 650W

Memory: 64GB ECC Minimum

1a. Go Cheap but still expensive on a AMD Consumer platform w/R7 5800X and ASRockrack X5700D4 ~ $700 US

PRO: Lower TDP/Power Usage/Lesser ECC RAM Requirements 2x vs 4x

CON: Lower PCIe Expansion if ever for a HBA (Not sure if PCIe Lanes will be eaten by the second NVMe for 8 SATA)

1b. Alternative Go Bigger on a AMD Enterprise platform w/E 7302P and Supermicro H12SSL-i ~ $720 US (Ebay Chances...)

PRO: More Cores for more VM instances & testing/PCIe Lanes Galore with possible expansion to thin gaming clients. (Maybe a simple 3D Rendering VM for 3D Printing Projects but that is wishful thinking for my available time.)

CON: Slightly Older Arch/Higher TDP/High Power usage+idling/ECC REG RAM Requirements costs up for 4x DIMM Minimal

Current Setup:

Recently completed StarTech 12U Openframe

Startech 1U 8 Outlet PS

Pending Hardware: 2U 16" Shelf and 2U Cyberpower UPS

Gaming PC:

Rosewill RSV-R4000 w/AMD R7 7700x/ASRock X670E Pro RS/32GB DDR5 5600 G.Skill FlareX5/1TB Samsung 980 Pro NVMe/Gigabyte G.OC RX6650XT/Seasonic Focus PX650W (All Noctua cooling)

FreeNAS/TrueNAS:

Bitfenix Prodigy Mini-ITX Tower /w Intel P G3258/ASRock Rack mini-itx C something Intel chipset/16GB ECC DDR3/2x 32GB USB FDs/4x 3TB WD or 3TB SG in RV1

(Running 400W PSU that is 7-8yrs old from my frankenscrap test server after the original 550W PSU fan seized up... *gulp*)

Network:

New stuff from 2021 but only 1Gb.