ZFS Advice for new Setup

- Thread starter humbleThC

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

I just turned LZ4 on myself, for additional benchmarking results.

Also i'm curious if i'm 'doing it wrong' on my partition scheme.

I used the Napp-It GUI , enabled partition support, and created the partitions 85%/15% via GUI.

I noticed its using Msdos partition scheme (MBR) and not GPT. When you initialize a disk for ZFS it normally partitions it GPT.

My question is, am I mis-aligned to 4K sectors, due to the fact that i'm splitting my SSDs in to L2ARC/ZIL and using MBR?

I'm very familiar with disk crossing in enterprise arrays, and dont want to make a silly mistake

Perhaps I should have partitioned manually? If this is an issue, it could be 30-50% performance hit on my SSDs.

Also i'm curious if i'm 'doing it wrong' on my partition scheme.

I used the Napp-It GUI , enabled partition support, and created the partitions 85%/15% via GUI.

I noticed its using Msdos partition scheme (MBR) and not GPT. When you initialize a disk for ZFS it normally partitions it GPT.

My question is, am I mis-aligned to 4K sectors, due to the fact that i'm splitting my SSDs in to L2ARC/ZIL and using MBR?

I'm very familiar with disk crossing in enterprise arrays, and dont want to make a silly mistake

Perhaps I should have partitioned manually? If this is an issue, it could be 30-50% performance hit on my SSDs.

Just some additional testing this morning... I'm likely going to tear everything down and try and optimize/tune a bit more. Also want to test more against the Intel SSD RAID1/0 pool -vs- RAIDz1 by itself. For the fun of it, i might re-test everything again with the (4) Intel SSDs as dedicated ZIL (1.6TB) it'll be the ultimate waste of capacity, just to see if i can squeeze the IOPs out of 4 ssds. It will also tell me if my mis-alignment issue is actually an issue, or if the dual-dipping as L2ARC takes away from the ZIL itself.

But here's the Hitachi pool still, with Intel's still partitioned cache/logs as above. Now with LZ4 enabled as well.

Hitachi Pool (RAIDz1*2 with SSD) – ZFS Volume presented via iSCSI/iSER with MPIO

L4Z Compression Disabled

Sync Disabled (QD4-------------Sync Always (QD4)---------------Sync Always (QD10)

L4Z Compression Enabled

Sync Disabled (QD4-------------Sync Always (QD4)---------------Sync Always (QD10)

No noticeable difference with or without LZ4 via ATTO Disk Benchmark.

Most likely the dataset is incompressible.

Sync Disabled (QD16)-----------Sync Always (QD16)

Playing with CrystalDiskMark on the Windows VM as well as ATTO, numbers are pretty in line with the previous ATTO benchmarks, with perhaps some slower results mixed in. These are probably highlighting more of the 'real worst case' scenarios.

Will be interesting after the pool rebuild / retest of everything separate, how the unique tests fair.

Sync Disabled---------------------Sync Always

Playing with AS SSD Benchmark Bandwidth-vs-IOPS.

Again this tool is showing what appears to be more realistic worst case values.

Last but not least... Playing with Windows Explorer file transfers....

This is where I'm confused a bit....

Knowing the VM's VMDK is hosted via VMFS5 via iSCSI via ZFS, and seeing the various benchmarks push single and multithreaded workloads at various IO sizes, I would expect similar results inside Windows Explorer copying large block sequential to a 1MB block size VMFS file system.

When I start to copy a 4GB file, it will go about 1GBs for the first 2 seconds, then drop down to 30MBs for a bit, and then trickle back up quickly to 500-900MBs, then back down. I'm not sure if if i'm 100% optimized on my IPoIB and iSCSI setup, so i'm troubleshooting a bit more on my tunings to make sure my tests are valid.

I created a 48GB RAM Drive on my Windows VM. (it benchmarks @ 4.9GBs read/writes)

Copied the file from RAM Drive to C:\ (Hitachi pool w/ SSDs, Sync=Always)

But just when I thought I had it working how I wanted Sure enough there's a wierd inconsistent performance issue to be solved.

Sure enough there's a wierd inconsistent performance issue to be solved.

But here's the Hitachi pool still, with Intel's still partitioned cache/logs as above. Now with LZ4 enabled as well.

Hitachi Pool (RAIDz1*2 with SSD) – ZFS Volume presented via iSCSI/iSER with MPIO

L4Z Compression Disabled

Sync Disabled (QD4-------------Sync Always (QD4)---------------Sync Always (QD10)

L4Z Compression Enabled

Sync Disabled (QD4-------------Sync Always (QD4)---------------Sync Always (QD10)

No noticeable difference with or without LZ4 via ATTO Disk Benchmark.

Most likely the dataset is incompressible.

Sync Disabled (QD16)-----------Sync Always (QD16)

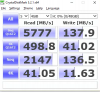

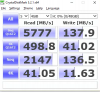

Playing with CrystalDiskMark on the Windows VM as well as ATTO, numbers are pretty in line with the previous ATTO benchmarks, with perhaps some slower results mixed in. These are probably highlighting more of the 'real worst case' scenarios.

Will be interesting after the pool rebuild / retest of everything separate, how the unique tests fair.

Sync Disabled---------------------Sync Always

Playing with AS SSD Benchmark Bandwidth-vs-IOPS.

Again this tool is showing what appears to be more realistic worst case values.

Last but not least... Playing with Windows Explorer file transfers....

This is where I'm confused a bit....

Knowing the VM's VMDK is hosted via VMFS5 via iSCSI via ZFS, and seeing the various benchmarks push single and multithreaded workloads at various IO sizes, I would expect similar results inside Windows Explorer copying large block sequential to a 1MB block size VMFS file system.

When I start to copy a 4GB file, it will go about 1GBs for the first 2 seconds, then drop down to 30MBs for a bit, and then trickle back up quickly to 500-900MBs, then back down. I'm not sure if if i'm 100% optimized on my IPoIB and iSCSI setup, so i'm troubleshooting a bit more on my tunings to make sure my tests are valid.

I created a 48GB RAM Drive on my Windows VM. (it benchmarks @ 4.9GBs read/writes)

Copied the file from RAM Drive to C:\ (Hitachi pool w/ SSDs, Sync=Always)

But just when I thought I had it working how I wanted

Last edited:

For reference my home RaidZ2 pool of 5900 RPM drives has no problem maxing out 1GigE consistently writing or reading to/from Windows.

Now, if I'm copying pictures or other smaller files the transfer fluctuates but when I move ISO around always 100MB/s (goes 100-110 it says in windows so take that fwiw).

Now, if I'm copying pictures or other smaller files the transfer fluctuates but when I move ISO around always 100MB/s (goes 100-110 it says in windows so take that fwiw).

From what I can tell it looks like a faulty pointer in the Solarish SMB2.1 stack that caused the panic.

I wonder if it's because I removed the Hitachi Pool without double checking if it had a File System still, and I bet that file system had a SMB share. (thus the NULL pointer).

BTW~ i've never ever troubleshot a SunOS kernel panic, so if I actually found the cause, i'm quite happy with my google-foo

Last edited:

Welcome to OmniOS kernel panic-land! :-Dwell that's not good...

Was typing up a work email, and all of a sudden my Putty session to OmniOS unexpectedly closed.

I walk over to the server closet room, and sure enough Kernel Panic/Dump.

Time to start poking around on why....

View attachment 4260

Seen both kernel panics AND tcp buffers exhausted...even w/ TCP tuning applied. FreeNAS FTW!

EDIT: stmf is a COMSTAR stack component so I'd say it was a iSCSI induced kernel panic not SMB.

/SadPanda's cry unicorn tears...Welcome to OmniOS kernel panic-land! :-D

Seen both kernel panics AND tcp buffers exhausted...even w/ TCP tuning applied. FreeNAS FTW!

EDIT: stmf is a COMSTAR stack component so I'd say it was a iSCSI induced kernel panic not SMB.

If it's a user error panic, I can live with it... And I really was hoping that's what it was. If it's an iSCSI induced kernel panic, and not SMB, then IDK. I totally did the proper tear down of the iSCSI.

1) Unmounted the datastore, deleted the datastore in ESX)

2) Comstar > Views > del view

3) Comstar > Logical Units > delete LU

4) Comstar > Volumes > delete volume

5) Pools > Destroy (verified no volumes/partitions were visible under pool)

I kinda need a stable iSCSI service

And FreeNAS pukes on Mellanox CX2 IB adapters, which why i'm entertaining Solarish.

Little more google-foo leads me right back to these forums, Gea posts about how IB is pretty much EOL. So it could be related to my older hardware/drivers on the CX2 adapter that contributed to the stmf issue. (who knows?.. literally.. who knows?

I wonder if Oracle Solaris would be a more stable platform for napp-it/comstar under the same hardware environment.

Last edited:

To be honest been running omnios now for about 5 years in prod and test and also at home and have yet to have a kernelcrash that wasnt because of a dumbass fault of my own

Honestly, the only reason I ventured into the less-common ZoL land 2.5 years ago was that the ONLY platform Mellanox really support and care for is Linux. Since then my system survived CentOS 7.0->7.1->7.2->7.3 upgrades and multiple kernel updates in between + countless hardware failures (mobo, video card, controllers, disks), but I've never ever had a kernel panic.

Yes, it's annoying you need to compile SCST manually, and sometimes after a kernel/Mellanox OFED/SCST source update it won't compile, but I have always been able to fix it relatively easily, and then a proper fix arrived in due course.

This is not to mention that Linux is the only environment where you have SRP support AND iSER over both IB and Ethernet modes, and all the Mellanox hardware is supported, including some weird beasts like Connect-IB (yes, I tried it too...)

Yes, it's annoying you need to compile SCST manually, and sometimes after a kernel/Mellanox OFED/SCST source update it won't compile, but I have always been able to fix it relatively easily, and then a proper fix arrived in due course.

This is not to mention that Linux is the only environment where you have SRP support AND iSER over both IB and Ethernet modes, and all the Mellanox hardware is supported, including some weird beasts like Connect-IB (yes, I tried it too...)

Interesting... I feel like i'm focusing around Mellanox support above all else at this point. I'm not willing to give up the 4036-E switch and the cheap cards/cables. And I want 'near SSD' speeds across the wire, so i dont have to slice all my storage up locally per machine.Honestly, the only reason I ventured into the less-common ZoL land 2.5 years ago was that the ONLY platform Mellanox really support and care for is Linux. Since then my system survived CentOS 7.0->7.1->7.2->7.3 upgrades and multiple kernel updates in between + countless hardware failures (mobo, video card, controllers, disks), but I've never ever had a kernel panic.

Yes, it's annoying you need to compile SCST manually, and sometimes after a kernel/Mellanox OFED/SCST source update it won't compile, but I have always been able to fix it relatively easily, and then a proper fix arrived in due course.

This is not to mention that Linux is the only environment where you have SRP support AND iSER over both IB and Ethernet modes, and all the Mellanox hardware is supported, including some weird beasts like Connect-IB (yes, I tried it too...)

I feel like i'm far from done with this setup atm, but CentOS ZoL might be in my future as well. I'll of course have to have you jump on my WebEx and help me set it up though.

One more finding... I destroyed the Hitachi Pool w/ SSD caching, and re-created the Intel SSD RAID1/0.

I wanted to go back and compare for the CrystalDiskMark test on the Windows VM.

Intel Pool (RAID1*2) – ZFS Volume presented via iSCSI/SRP with MPIO (RR/SRP targets)

L4Z Compression Enabled

Sync Disabled (QD16)-----------Sync Always (QD16)

-versus-

Hitachi Pool (RAIDz1*2 with SSD) – ZFS Volume presented via iSCSI/SRP with MPIO (RR/SRP targets)

L4Z Compression Enabled

Sync Disabled (QD16)-----------Sync Always (QD16)

Another strong case that the Intels can be used to speed up the HDD pool for ESX/iSCSI with Sync Enabled.

If anything the Hitachi's speed up the Intel's in the workloads that are better suited for HDD.

I wanted to go back and compare for the CrystalDiskMark test on the Windows VM.

Intel Pool (RAID1*2) – ZFS Volume presented via iSCSI/SRP with MPIO (RR/SRP targets)

L4Z Compression Enabled

Sync Disabled (QD16)-----------Sync Always (QD16)

-versus-

Hitachi Pool (RAIDz1*2 with SSD) – ZFS Volume presented via iSCSI/SRP with MPIO (RR/SRP targets)

L4Z Compression Enabled

Sync Disabled (QD16)-----------Sync Always (QD16)

Another strong case that the Intels can be used to speed up the HDD pool for ESX/iSCSI with Sync Enabled.

If anything the Hitachi's speed up the Intel's in the workloads that are better suited for HDD.

Well, the real beauty of Infiniband (with RDMA) + ZFS is that you have BETTER THAN SSD speeds across the wire, thanks to the massive in-memory caching on the server side......I want 'near SSD' speeds across the wire...

That would be a bit difficult to organise, considering I'm in the land down under and the work week has already started here :-(I'll of course have to have you jump on my WebEx and help me set it up though.

OK, so I've evacuated all the active VMs from my HDD pool to make it a little less noisy. Then, I've changed just one parameter of my SCST target using its sysfs interface (ah, the beauty of Linux  ) without even restarting the service:

) without even restarting the service:

echo 4 > /sys/kernel/scst_tgt/devices/vmfs/threads_num (used to be one, this is per initiator).

Now, I'm getting totally ridiculous speed:

Now, the write speed is definitely like this because I have nv_cache option set to 1. Kind of not sure to what extent it's safe to run it this way. I do have a good UPS and my server never crashed in 2.5 years, but still... Here's how the same system looks like with sync=always:

Clearly, this sucks, really pity to lose heaps of performance this way...

echo 4 > /sys/kernel/scst_tgt/devices/vmfs/threads_num (used to be one, this is per initiator).

Now, I'm getting totally ridiculous speed:

Now, the write speed is definitely like this because I have nv_cache option set to 1. Kind of not sure to what extent it's safe to run it this way. I do have a good UPS and my server never crashed in 2.5 years, but still... Here's how the same system looks like with sync=always:

Clearly, this sucks, really pity to lose heaps of performance this way...

Trying do a 5GB file 3-5 times instead of a 1GB since you're at >1GB/s.

You could do the tests you're at or near 1GB instead of all then too if you wanted.

Just another thing to think about

It's funny you guys mention following Mellanox I did ZoL earlier this year for NVME support, and still will likely run Ubuntu just because it's so up to date with NVME and other drivers it seems to 'fit' best, also worked for iSCSI easily. I've yet to try my 4036 or 4036E though with my Mellanox cards sounds like between you 2 it shouldn't be too tough on me

You could do the tests you're at or near 1GB instead of all then too if you wanted.

Just another thing to think about

It's funny you guys mention following Mellanox I did ZoL earlier this year for NVME support, and still will likely run Ubuntu just because it's so up to date with NVME and other drivers it seems to 'fit' best, also worked for iSCSI easily. I've yet to try my 4036 or 4036E though with my Mellanox cards sounds like between you 2 it shouldn't be too tough on me

They're sooo cheapTrying do a 5GB file 3-5 times instead of a 1GB since you're at >1GB/s.

You could do the tests you're at or near 1GB instead of all then too if you wanted.

Just another thing to think about

It's funny you guys mention following Mellanox I did ZoL earlier this year for NVME support, and still will likely run Ubuntu just because it's so up to date with NVME and other drivers it seems to 'fit' best, also worked for iSCSI easily. I've yet to try my 4036 or 4036E though with my Mellanox cards sounds like between you 2 it shouldn't be too tough on me

36 Ports @ $225 = $6.27 / port!!

Got the dual port 40Gb QDR PCIe 2.0 x8 adapters for $38 ea, and the 5 and 7' meter cables for $15ea

Its like $68 per dual 40Gb ports for NIC+Cables

so like $80 per host for dual 40Gb raw IB (which is very much like serial port/protocol) until you bind stuff on top of it. Driver/protocol support is limiting, (especially when you use the CX2 stuff from 5yr+ ago) but i'm still determined to find a way to make it work

I could never find a the Mellanox Fabric Manager GUI, but i did manage to get it setup very basic via null modem serial cable, and then manage it via telnet. All you really have to do is setup the mgmt IP, and then SCP to the switch to create this partition.conf file. (Kinda like VLANs) I used a basic template that gave me like default ALL, plus 8 unique partitions.

So far on OmniOS i'm just using partition FFFF which is like VLAN0 (default/ALL/non-VLAN)

Which is likely why my SRP targets are cross-connecting.

2 Initiators - 2 Targets - 1 LUN - 4 Paths (should technically be 2 for iSCSI proper MPIO)

I'm 'ghetto' fixing it by just disabling the paths in ESX for now

Which is why when people say IB is dead, go 10GbE, I refuse to listen. Good 10GbE copper dual port cards are expense. Good 10GbE copper managed switches are expensive. 56Gb/112Gb Infiniband is 'current' and in today's 'storage world' it's all about that cluster interconnect. So i'm looking for which storage OS's support them now and in the future.

If the story was we support the CX3/4 but not the CX2 i'd understand. (like some modern OS). But to say IB is dead is sad. Because FC is dying, iSCSI never was worth a lick, NVMe is PCI based. I have seen beta-products for high speed NVMe fabrics. I've also seen FC vendors trying to encapsulate NVMe and switch it. But line speeds of 16Gbs and 32Gbs with protocols inside protocols isn't going to be the solution.

Last edited:

OK, so I blew my previous 2 x Intel S3610 480GB (RAID1) array and recreated it with blocksize=32K (instead of the default 8k). Also, I increased the ARC size to 24GB (I reckon the OS will be fine with the remaining 8GB). Now it looks truly insane:

With 4GB file, it looks like we're starting to hit the actual drives:

Now the same with sync=always and an S3610 120GB (underprovisioned to 16GB) as an SLOG:

And now without the SLOG:

Clearly, the SLOG helps, even on an SSD-only pool, especially for random writes. The biggest question is, whether it's worthwhile to stick to sync=always, given the performance penalty on one hand and possible risk to the data on the other hand.

With 4GB file, it looks like we're starting to hit the actual drives:

Now the same with sync=always and an S3610 120GB (underprovisioned to 16GB) as an SLOG:

And now without the SLOG:

Clearly, the SLOG helps, even on an SSD-only pool, especially for random writes. The biggest question is, whether it's worthwhile to stick to sync=always, given the performance penalty on one hand and possible risk to the data on the other hand.

The answer is, it depends. Do you have good backups and can you tolerate down time for restoring them? Note that write cache is limited to a few seconds in ZFS, and the pool/filesystem are not at risk. Just those few seconds of data.

For a large enterprise database server, yes, sync=always. Then throw devices/money at it. For a home environment, I run sync off. It's all about risk/reward ratios. For me, the loss of a couple seconds of data is a non issue. And it hasn't happened in the years I've run ZFS anyway. But what's important to you is the question. The performance gain is worth the small risk to me.

It's a bit like the people that run non-redundant arrays at home with backups. Sure, the whole thing dies if one disk does. But it's more flexible and performance is excellent. I'm in between. I want redundancy, but I will risk a little to get some of the speed back.

If you turn sync off, don't bother with SLOG as it only affects sync writes.

Nice thing is, you can choose per filesystem.

For a large enterprise database server, yes, sync=always. Then throw devices/money at it. For a home environment, I run sync off. It's all about risk/reward ratios. For me, the loss of a couple seconds of data is a non issue. And it hasn't happened in the years I've run ZFS anyway. But what's important to you is the question. The performance gain is worth the small risk to me.

It's a bit like the people that run non-redundant arrays at home with backups. Sure, the whole thing dies if one disk does. But it's more flexible and performance is excellent. I'm in between. I want redundancy, but I will risk a little to get some of the speed back.

If you turn sync off, don't bother with SLOG as it only affects sync writes.

Nice thing is, you can choose per filesystem.