Hi, I've just rebuilt my 2 node cluster using Server 2019 and have set up S2d. I've been running 2016 since it came out and have always experienced erratic performance, that was using 4x Samsung SSDs (PM863) per node. Performance wasn't bad but I always thought it should be better. So now I have two nodes running 2019 each with 4x Toshiba HK4 SSDs (on the MS SDDC list) and 2x Intel P3700 NVMe SSDs (also on the list). The nodes do tell me the storage pool is power protected so I think the hardware is working as it should. I've created a couple of volumes using these commands:

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName CSV-01 -FileSystem CSVFS_REFS -StorageTierFriendlyName MirrorOnSSD, Capacity -StorageTierSizes 180GB, 2000GB

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName CSV-02 -FileSystem CSVFS_REFS -StorageTierFriendlyName MirrorOnSSD, Capacity -StorageTierSizes 180GB, 1500GB

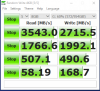

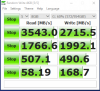

My only real concern is with the small writes, 4k at qd1. It appears to be no better than the old setup (or not much) and strangely noticeably worse than a test I did a few months back using a couple of random desktops with the 4x Toshiba drives by themselves in each node. I have verified that tiering is working, windows admin center shows heavy writes to the P3700s when benchmarking. Here is a quick benchmark that shows what I'm talking about, look at the bottom right number, 4k writes are pretty low:

I fully admit I could be off my rocker even complaining but if anyone has any ideas on how to improve performance i would appreciate it! There are only a couple of test VMs on the cluster so I can blow it out and start over if need be.

A bit more info about each node:

E5-2699v4

256GB RAM

Supermicro X10 board with built in LSI 3008 flashed to IT mode (Toshiba SATA SSDs are running on this)

Connectx3-Pro Nic for coms, verified RDMA is working properly @ 40Gbps for the storage network

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName CSV-01 -FileSystem CSVFS_REFS -StorageTierFriendlyName MirrorOnSSD, Capacity -StorageTierSizes 180GB, 2000GB

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName CSV-02 -FileSystem CSVFS_REFS -StorageTierFriendlyName MirrorOnSSD, Capacity -StorageTierSizes 180GB, 1500GB

My only real concern is with the small writes, 4k at qd1. It appears to be no better than the old setup (or not much) and strangely noticeably worse than a test I did a few months back using a couple of random desktops with the 4x Toshiba drives by themselves in each node. I have verified that tiering is working, windows admin center shows heavy writes to the P3700s when benchmarking. Here is a quick benchmark that shows what I'm talking about, look at the bottom right number, 4k writes are pretty low:

I fully admit I could be off my rocker even complaining but if anyone has any ideas on how to improve performance i would appreciate it! There are only a couple of test VMs on the cluster so I can blow it out and start over if need be.

A bit more info about each node:

E5-2699v4

256GB RAM

Supermicro X10 board with built in LSI 3008 flashed to IT mode (Toshiba SATA SSDs are running on this)

Connectx3-Pro Nic for coms, verified RDMA is working properly @ 40Gbps for the storage network