Parallax raises a number of excellent points, I don't necessarily agree with all of them but they are certainly valid from a POV.

I will dive a bit into the hardware, having spent a few months building up a few systems based on this board and in small ITX cases. My use cases were relatively well defined though and honestly I'm thinking you may not have thought through all of yours and that is okay! Build, give yourself room to grow and if possible make design decisions that don't hamstring you in 6-12 months (and cost you a bunch of wasted money/time).

X10SDV-4C-TLN2F is a good sound choice for a basic NAS with a light virtualization load.

Buy a board off the bay that has an active CPU cooler *or*

be prepared to aftermarket mod something that is either very large (chassis constraints) or has active cooling.

Read the manual.

With your listed hardware choices you will use the 24 pin ATX style power plug on the motherboard.

DO NOT use the 4 pin power plug that looks like a cpu power plug.

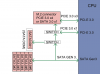

If you use all 6 sata ports you will drop a pice 3.0 x1 lane from your m.2 (see the manual).

If you run 10Gbe on this board then that large heat sink behind the vga port will get very hot and likely need higher air flow than you planned.

If you run 1Gbe you'll be fine.

The motherboard supports pcie bifurcation on the x16 slot. There are some exotic risers you can consider to do add more hardware.

Memory

If you are buying the motherboard from the bay, look there for memory too.

Don't buy 8GB sticks. Look at either 2x16GB sticks or 2x 32GB.

You could also start with a single stick of 16 or 32 with a drop in performance and add another one later based on funds availability.

Storage

If you build it you will fill it. I've been telling this to folks for 25 years.

If you think your initial target is 4TB usable then build for 6 or 8TB day 1. If your initial target is 2TB stick with 4TB.

If you want new drives with warranty great.

Used 4TB SATA or SAS drives are a little more than 1/3 of the cost your new drives. Just want to point this out.

Used 8TB SATA or SAS drives are easily the same cost as your new 4TB IWP.

Whether new or used do plan on running badblocks on your drives as soon as you get them. ie. build and test server first, then buy drives.

NVME - don't waste this on boot device -esp if you are going to run Scale or Core, or Prox.

Thinking about having a cold shelf spare drive for your main storage and one for your boot drives.

Case

if it works for you great!

If you don't need hot swap look at Core's Node series.

There is also nothing wrong with putting an ITX board in an mATX or mid-tower case.

Power

for me whatever works, has the right number and types of connections, is relatively quiet, has a good reliability history, and fits the budget.

what you didn't list or mention

Chassis build out

Get all the hardware you need to fully connect (data and power) all the bays in your chassis whether you fully fill the bays or not.

Boot

How important is it that this server is up? 24/7 and people get mad if it goes down? plan on a software raid boot pool.

You can configure that with TNS or core, and plain jane linux distros.

Want new boot drives? something like Inland (US) 120GB SSD are about $20.00 each.

Used? You can get intel DC S35xx 80or120GB for that too.

HBA

You have 5 SATA ports, 6 if you burn an m2. pcie lane. Your case supports qty 8 3.5 "drives and qty 4 2.5" drives.

Backup

Do you already have a plan to backup this server?

If not build it into your budget.

Plan on backups running on day two so you don't procrastinate it.

Media Server and this board.

If your media is already transcoded to what you want - great (ie. pass through playback)

I don't recommend trying to transcode using this board. Use the pcie slot for an HBA, not GPU, or use bifurcation to get both.

If you need transcoding then look at a TMM as a bare-metal plex,emby,jellyfin (or whatever your media management is) server

VM's and containers on nvme

Putting critical vms or containers on an unprotected nvme is asking for trouble. See backup.

Better, figure out how to configure mirrored nvme for vm's and containers.

don't get hit by decision paralysis - at the same time from personal experience I can tell you it is frustrating to order a bunch of stuff and then start putting it together and trying to use it and think DRATS I should have done XYZ.