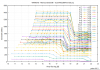

So in the meantime the tests completed - still no significant difference between

6 x 8GB + a 16 GB NVDimm module (with PGem) with 36 Threads (arraysize 4GB

6 x 8GB with 36 Threads:

Will run a final test with 8GB and 36 threads as a final comparison point (to see whether there is any difference at all).

If that is not showing any difference then I'll need to check bios if there is something odd there - this was a nvdimm test box so maybe i micsonfigured while trying to get nvdimm firmware updates working (don't ask).

p.s. Cant attach the pdfs nor statsfile since they are too large. Let me know if you want to see different results

6 x 8GB + a 16 GB NVDimm module (with PGem) with 36 Threads (arraysize 4GB

6 x 8GB with 36 Threads:

Will run a final test with 8GB and 36 threads as a final comparison point (to see whether there is any difference at all).

If that is not showing any difference then I'll need to check bios if there is something odd there - this was a nvdimm test box so maybe i micsonfigured while trying to get nvdimm firmware updates working (don't ask).

p.s. Cant attach the pdfs nor statsfile since they are too large. Let me know if you want to see different results