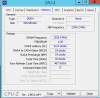

Does anyone know of a tool (any regular OS) that will show current memory configuration?

In particular I'd be looking for channel interleaving, maximum bandwith, clock, latencies and all that.

In particular I'd be looking for channel interleaving, maximum bandwith, clock, latencies and all that.

Background is that I want to run a nvdimm module and nobody can tell me how Bios & OS will actually handle this

- the reason for the uncertainty is that at boot time the module is a regular RDIMM module and only after driver support has been loaded it gets taken out of the memory pool and into special /storage device pool.

- the reason for the uncertainty is that at boot time the module is a regular RDIMM module and only after driver support has been loaded it gets taken out of the memory pool and into special /storage device pool.

- So how does the system react to that?

- does it change previously decided interleaving pattern?

- does it add/remove bandwith...