EU Lenovo ThinkCentre M910x barebone with i5-7500 €85,44

- Thread starter MBastian

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

According to the "Lieferumfang" on the Website, yes. I got a 135w one with it.Before I pull the trigger on these, just to confirm, if I order them through the German site (ebay doesn't ship to my country) will the power brick / supply be included?

The riser slots in the Tiny4 and Tiny5 generations are indeed not the same. They are physically identical, so it does fit, but part of the pinout doesn't match up. Luckily the power pins are the same so at least it won't fry anything, and the first 5 PCIe lanes also line up, but after that it's all shifted a little. Best case you're gonna get a x4 link out of it, worst case it might just not work.After investigating the PCI riser issue a bit I suspect that the P320/M910x riser is slightly different from its successor. I found some reddit posts where people where not able to fit an I3x0-T4 card into an P320 instead of the normally installed GPU card.

The riser I've bought from ebay works but its PCI slot is most likely slightly lower than the original P320 one. But now cards with components on their back can collide with at least one of the heatsink screws.

With a bit of work I probably could shorten the screws so that they are no longer protuding with the hope it'll be enough to fit my I340-T4. Since I am totally fine with a two port card I searched a bit and ordered a used Fujitsu D3035-A11 card which should hopefully fit without modifications.

I made order for 3 units (without RAM or storage) on 31st of December and I received the units on 15th or 16th of January (Germany->Finland). Very well packaged and very clean looking 135W external PSUs included. SATA cables and cages are also present and as a small positive observation the SATA cables were secured to the drive cage with tape for the transport. These units included serial and HDMI ports in the add-on bracket. Units are definitely used (as clearly stated) with small imperfections here and there but nothing major. A bit of very fine dust is present inside the units which does not affect the functionality. Will do a thorough dusting and repasting now that I verified each units works correctly (flash to latest bios and one pass of memtest with 2x16GB RAM, waiting for 2x32GB RAM sticks to arrive). The CPU fans does have varying degree of bearing whine but I'm somewhat sensitive to noises with homelab hardware as all the devices are located in our living spaces in a office room where I work during the day.

I intend to run these as virtualization hosts with 10Gbps network adapters while testing out shared and hyperconverged storage. Very likely I will be settling into Proxmox cluster as I already have built a 3 node HP EliteDesk 800 G2 mini cluster with 2.5GbE USB adapters. As common knowledge an riser/adapter is needed for the PCIe x8 slot, which I acquired from 01AJ902 For Tiny 4 PCIE Riser Card ThinkCentre M910Q M910X P320 Tiny Workstation | eBay. The NICs I ordered were HP 546SFP+ from piospartslap.de which I reflashed to generic Mellanox X3 Pro (fw-ConnectX3Pro-rel-2_42_5000-MCX312B-XCC_Ax-FlexBoot-3.4.752.bin) succesfully. When installed in the riser and booted to Clonezilla I can verify the reflashed NICs are identified and they work as at least they receive IP address from DHCP server and respond to ping in the network. I haven't had time to test throughput or anything else yet.

Now I'm looking into buying a+e key -> m-key M.2 adapter so that the virtualization host OS would be installed on to a 2230 NVMe as this would leave both 2280 M.2 slots for host storage (ZFS and/or CEPH). I know CEPH is pushing with these units and I'm worried that the i5-7500 CPUs are overstressed running CEPH and VMs, but this is the fun in homelabbing: finding out and then buying more advanced stuff

I intend to run these as virtualization hosts with 10Gbps network adapters while testing out shared and hyperconverged storage. Very likely I will be settling into Proxmox cluster as I already have built a 3 node HP EliteDesk 800 G2 mini cluster with 2.5GbE USB adapters. As common knowledge an riser/adapter is needed for the PCIe x8 slot, which I acquired from 01AJ902 For Tiny 4 PCIE Riser Card ThinkCentre M910Q M910X P320 Tiny Workstation | eBay. The NICs I ordered were HP 546SFP+ from piospartslap.de which I reflashed to generic Mellanox X3 Pro (fw-ConnectX3Pro-rel-2_42_5000-MCX312B-XCC_Ax-FlexBoot-3.4.752.bin) succesfully. When installed in the riser and booted to Clonezilla I can verify the reflashed NICs are identified and they work as at least they receive IP address from DHCP server and respond to ping in the network. I haven't had time to test throughput or anything else yet.

Now I'm looking into buying a+e key -> m-key M.2 adapter so that the virtualization host OS would be installed on to a 2230 NVMe as this would leave both 2280 M.2 slots for host storage (ZFS and/or CEPH). I know CEPH is pushing with these units and I'm worried that the i5-7500 CPUs are overstressed running CEPH and VMs, but this is the fun in homelabbing: finding out and then buying more advanced stuff

I installed a 2.5gbps network card and runs ceph on the SATA SSD, so ceph "works"I know CEPH is pushing with these units and I'm worried that the i5-7500 CPUs are overstressed running CEPH and VMs

My proxmox backup server daily backups are a bit slow though - but it works, which is what is important.

If I could get enterprise m2280 ssd's for a decent price, I would switch to using a SATA ssd in one the m.2 slots - and install a 10gbps nic and I think it would run much better than what I have now with ceph - not amazing since it will only be 3 osd's - but usable.

Out of curiosity - what kind of power consumption do you get with these nics? I think the mellanox nics will prevent ASPM to be used and as a consequence higher power consumption?the NICs I ordered were HP 546SFP+

At the moment I'm looking into Micron 7300 PRO 960GB 2280 drives, as these have PLP and propably easily enough performance to saturate the host/network CEPH(?). The result would be:If I could get enterprise m2280 ssd's for a decent price, I would switch to using a SATA ssd in one the m.2 slots - and install a 10gbps nic and I think it would run much better than what I have now with ceph - not amazing since it will only be 3 osd's - but usable.

- 2 OSDs per host (2 x 960GB)

- 1 dedicated 10GbE link for CEPH per host

- If I'd be OK to run 2.5GbE USB adapters for VM traffic (as the emdedded 1GbE NIC is dedicated to managent and corosync) would this enable having additional 10GbE link for CEPH but I'm doubtful on the benefits for CEPH. I don't like USB NIC eventhough the current Asus USB-C2500 adapters have worked perfectly with the previous HP mini cluster.

- (only) 4 cores of i5-7500 per host - note: these cores are also shared with running VMs on the host

I will look into this when I have the nodes setup and running PVE cluster. The HP Elitedesk 800 G2 minis with 1 SATA, 1 NVMe disk and 1 2.5GbE USB NIC was idling around 10W per node, so this is the reference for these Lenovo Tinys.Out of curiosity - what kind of power consumption do you get with these nics? I think the mellanox nics will prevent ASPM to be used and as a consequence higher power consumption?

One device has the following items:I will look into this when I have the nodes setup and running PVE cluster. The HP Elitedesk 800 G2 minis with 1 SATA, 1 NVMe disk and 1 2.5GbE USB NIC was idling around 10W per node, so this is the reference for these Lenovo Tinys.

- Intel i5-7500

- 2 x 32GB RAM

- 2 x SN700 1TB NVMe

- Mellanox ConnectX-3 Pro 2-port NIC

Kernel parameters had to be set to "quiet pcie_aspm=off" because SN700 related error messages are spamming the system log otherwise. This was the same with HP Elitedesk Minis. I don't know how the Mellanox would behave if these WD NVMes didn't require setting the aspm=off.

That is pretty goodIdling without any activity or network traffic = 13-14W

Please note, I'm totally novice with CEPH and interested at this moment finding out with simple and efficient way is the m910x a reasonable platform running shared storage and computing at the same time in a homelab environment.That is pretty goodAlthough it must get pretty toasty inside that machine with 2xnvme drives and the ConnectX card - so fans are spinning quite a bit I guess?

I did a quick setup for 3 node CEPH storage with 1 osd (1xSN700 1TB) per host (basically clickety click setup in the proxmox GUI) and ran some stress tests for the ceph storage. No additional configuration was done on the CEPH setup. Backend and frontend network for the CEPH is using single 10GbE link on each node.

I used the following rados test (source):

Bash:

rados bench -p NVMe-pool 600 write -b 4M -t 16 --run-name `hostname` --no-cleanupThe SN700 NMVe does seem run hot in general but I'm really contemplating are these temps too much and is CEPH maybe too much for these tiny units. Additional cooling for the M.2 slots in the bottom is hard to do without modifying the case or placing the unit on it's side and directing external airflow on to the bottom of the unit. In my opinion these measures somewhat defeat the purpose of the small size of the units.

Maybe a shared external NFS storage is the way forward and the 10GbE NICs are beneficial in this setup also.

Then there is the way of local ZFS storage with M.2 slots and frequent replication between nodes where the 10GbE connection is also a benefit for the setup. But the possible high temps for the NVMes is still an issue.

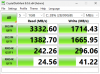

I installed a clean Windows 11 (4vCPU + 8GB RAM) and ran a basic CrystalDiskMark from within the VM.

This is where the VM disk (no cache) is on the CEPH storage:

And here it is on the local node (SN700 1TB EXT4):

I don't have any temperature readings from the Mellanox ConnectX-3 Pro card and the Tinys are in a tight space so I couldn't measure temps on the NICs anyway. If anybody has any pointers how to see the Mellanox internal temp probe readings in Proxmox please chime in.

I have ordered 4010 fans for the NICs and I'm asking my friend to print me a baffle where the fan attaches on top of the NIC. Power for the fan would come from USB port via USB cable.

Last edited:

Have you tried mget_temp? For me this is working running a tiny with Proxmox.I don't have any temperature readings from the Mellanox ConnectX-3 Pro card and the Tinys are in a tight space so I couldn't measure temps on the NICs anyway. If anybody has any pointers how to see the Mellanox internal temp probe readings in Proxmox please chime in.

root@pve:~# lspci | grep Mellanox

01:00.0 Ethernet controller: Mellanox Technologies MT27520 Family [ConnectX-3 Pro]

root@pve:~# mget_temp -d 01:00.0

82

root@pve:~#

Thanks for the help, for some reason I didn't even think about the official Nvidia toolsHave you tried mget_temp? For me this is working running a tiny with Proxmox.

root@pve:~# lspci | grep Mellanox

01:00.0 Ethernet controller: Mellanox Technologies MT27520 Family [ConnectX-3 Pro]

root@pve:~# mget_temp -d 01:00.0

82

root@pve:~#

First I downloaded the latest version of the tools (mft-4.30.0-139) but the mget_temp didn't recognise/support the now old X3 Pro card. Output for the mget_temp command was

mopen: No such file or directoryI uninstalled the latest version and installed the same version of mft tools which I used for flashing the generic firmware for these HP branded cards -> success!

Code:

root@tiny1:~# lspci | grep Mellanox

01:00.0 Ethernet controller: Mellanox Technologies MT27520 Family [ConnectX-3 Pro]

root@tiny1:~# mget_temp -d 01:00.0

71

root@tiny1:~#I made a simple Linstor install (3 nodes, 1 x SN700 1TB per node) for comparisons sake.This is where the VM disk (no cache) is on the CEPH storage:

View attachment 41525

And here it is on the local node (SN700 1TB EXT4):

View attachment 41526

The results seem promising:

It seems that Linstor is more efficient and faster with this minimum node count using NVMEe storage. I dedicated one 10GbE link for Linstor so the same as Ceph setup. Also interesting is that the CPU load on the nodes is a lot smaller compared to Ceph. I don't have good logging for the temps but checking values during CrystalDiskMark benchmark on the active node hosting the VM I saw lower temps for the CPU and NVMe. Mellanox NIC temps didn't practically change at all during the benchmark and I saw them at 70-72C.

This results gives me hope that maybe a hyperconverged setup would work after all with these tinys. This would spare me from a all NVMe NAS with shared NFS storage which I was starting to plan on.