Samsung is like a box of chocolates, you never know what you're gonna get.

Avoid Samsung 980 and 990 with Windows Server

- Thread starter yeryer

- Start date

-

- Tags

- crash ssd windows server

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

Today my system with the 990pro crashed again:

hwinfo reports 34°c

crystal diskinfo reports 36°c

my desensitized fingers (cooking with cast iron a lot...) touching the ssd say it's a lot hotter than that

Either two different tools report bullshit or the samsung ssd/firmware...

I will return the 990 pro and order a satadom instead

(BTW health is down to 90% despite the updated firmware)

hwinfo reports 34°c

crystal diskinfo reports 36°c

my desensitized fingers (cooking with cast iron a lot...) touching the ssd say it's a lot hotter than that

Either two different tools report bullshit or the samsung ssd/firmware...

I will return the 990 pro and order a satadom instead

(BTW health is down to 90% despite the updated firmware)

care to attach smart log?

Code:

=== START OF INFORMATION SECTION ===

Model Number: Samsung SSD 990 PRO 1TB

Serial Number: removed for reasons

Firmware Version: 1B2QJXD7

PCI Vendor/Subsystem ID: 0x144d

IEEE OUI Identifier: 0x002538

Total NVM Capacity: 1.000.204.886.016 [1,00 TB]

Unallocated NVM Capacity: 0

Controller ID: 1

NVMe Version: 2.0

Number of Namespaces: 1

Namespace 1 Size/Capacity: 1.000.204.886.016 [1,00 TB]

Namespace 1 Utilization: 18.244.898.816 [18,2 GB]

Namespace 1 Formatted LBA Size: 512

Namespace 1 IEEE EUI-64: 002538 4b214093b8

Local Time is: Mon Mar 20 14:52:21 2023 MZ

Firmware Updates (0x16): 3 Slots, no Reset required

Optional Admin Commands (0x0017): Security Format Frmw_DL Self_Test

Optional NVM Commands (0x0055): Comp DS_Mngmt Sav/Sel_Feat Timestmp

Log Page Attributes (0x2f): S/H_per_NS Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg *Other*

Maximum Data Transfer Size: 512 Pages

Warning Comp. Temp. Threshold: 82 Celsius

Critical Comp. Temp. Threshold: 85 Celsius

Supported Power States

St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat

0 + 9.39W - - 0 0 0 0 0 0

1 + 9.39W - - 1 1 1 1 0 200

2 + 9.39W - - 2 2 2 2 0 1000

3 - 0.0400W - - 3 3 3 3 2000 1200

4 - 0.0050W - - 4 4 4 4 500 9500

Supported LBA Sizes (NSID 0x1)

Id Fmt Data Metadt Rel_Perf

0 + 512 0 0

=== START OF SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

SMART/Health Information (NVMe Log 0x02)

Critical Warning: 0x00

Temperature: 40 Celsius

Available Spare: 100%

Available Spare Threshold: 10%

Percentage Used: 10%

Data Units Read: 447.759 [229 GB]

Data Units Written: 702.829 [359 GB]

Host Read Commands: 7.854.856

Host Write Commands: 16.103.321

Controller Busy Time: 142

Power Cycles: 11

Power On Hours: 1.671

Unsafe Shutdowns: 7

Media and Data Integrity Errors: 0

Error Information Log Entries: 0

Warning Comp. Temperature Time: 0

Critical Comp. Temperature Time: 0

Temperature Sensor 1: 40 Celsius

Temperature Sensor 2: 50 Celsius

Error Information (NVMe Log 0x01, 16 of 64 entries)

No Errors Loggedcontroller busy time has nothing to do with failures. If you get a server pull drive you are almost guaranteed to see a huge number on that attribute.by this it looks like it never crashed by temp; but controller busy time strikes me as potential issue of it hanging.

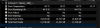

(here's one where temp issues did bring it down - also samsung.)

View attachment 27992

I'd say avoid samsung in general.

Since samsung Alpis has too many(5) R5 cores, it will be a disaster awaiting to happen if there's no heatsink on it. If there is no room for a heatsink, avoid high end drives from any brand, non of them would have a low fail rate. Running the drive constantly at 70°C basically equals to running a CPU at 100°C.

The firmware update should be aiming at a timing issue to prevent read errors(exhibited in "media and data integrity errors"). It obviously won't do anything to an overheated drive on which even the solder pads may start to fall off.

BTW just got a PM9A1 running 7301 FW with some data integrity error recordings on it from a friend. I will try writing large amounts of data using IOmeter and do a before-after comparison to see if the new fw can really completely mitigate the issue. But anyway, I would recommend people to stay away from new NANDs(SSV6+ BICS5 B37/47 and all YMTC/Hynix). They are all pretty problematic in their own ways. Also do not use any desktop drives in even the slightest write-intensive applications, it's not 10 years ago when fws can absolutely botch the ecc algorithm and still get away with it.

Host issues read command on cold data region with some interval. The error bits on that cold data written NAND wordline are abnormally grown by interval read and result in read UECC finally.

The Read recovery time was insufficient which is to eliminate the given voltage. When read, the certain voltage was given and then there is recovery operation follows to eliminate the voltage (= recovery operation). However, in this failure case, due to insufficient time given for recovery operation, there remains a little voltage and it disturbs other word lines.

Add: And nowadays manufacturers always set attribute 05 to follow a downward path according to data writen and some other factors even no spare blocks were used, as shown in @i386 's logs.

Last edited:

Interesting. How sure are we this is a Windows Server issue?Samsung drives newer than the 970 (the last to use a Samsung nvme driver) are not reliable with Windows Server.

Over the past several months I've been debugging issues with both Intel and AMD servers that reset to the bios and the SSD is not detected until a power off/on cycle. I was really reluctant to suspect that the entire product line of SSDs was buggy since this is a very popular drive but I'm certain now and it isn't specific to a particular firmware version.

On 4 different systems using Asus, AsRock Rack, or Supermicro motherboards a high load system would crash every couple weeks or so without ever writing a minidump. I suspected defective drives and swapped a 1TB drive for a 2TB one, or swapped the 980 pro for a 990 but the behavior persisted. Meanwhile several systems with 970 pros and the same workload run stable for months.

After I swapped my X13SAE-F motherboard for an Asus W680-ACE (thinking the X13SAE was at fault) I tried restoring a sql server database from backup and observed this was 100% effective at crashing the SSD controller, causing it to disappear until a power cycle. I checked every related bios setting and all the sql server fixes regarding drive sector size to no avail.

Some of the servers that crash are running MySQL instead of Sql Server and the only common denominator is high IO load, Windows Server 2016 or later, and Samsung 980 or later. With so many bug reports related to Samsung's firmware issues it's hard to find corroborating bug reports so I thought I'd share this here.

I recently picked up a couple of 500GB 980 Pro's to use as boot drives on my H12SSL with a Milan CPU (mirrored with ZFS) under Proxmox (Debian based) based on my good luck with these drives on my Linux workstation.

Seeing this post (thanks @i386 for pointing me to this thread) has made me question if that was the right call, and if I should be looking at something else instead.

Be careful with Sabrent rockets. I've had Sabrent Rocket 4's drop like flies on me. Like, Originals died 2 years in. Had to fight with them to get the RMA (they wanted me to have registered the drives within days of purchase) then the RMA replacements died less than 2 years after that. I'm talking complete brick, non-responsive does not appear as a connected device on any system....and two systems with Sabrent Rockets are perfectly stable.

There goes my "I only trust Intel and Samsung SSD's" shopping philosophy, as they are the only brands I've ever used that haven't failed on me.I'd say avoid samsung in general.

Intel exited the market, and Samsung seems to have gone to shit. Now I have no idea what brands I can actually trust.

Trust no marketing. Better always do homework and check what are temp limits for the components and obey these conditions. Have learnt also not to trust any temp reporting from the device which maybe failing  When more performance promised and use scenario and/or environment temp is above normal additional heatsink needed for all brands. Slower NVMes doesn't have that issue so often as running out of DRAM and speeds drops which lower heat output generated by controller.

When more performance promised and use scenario and/or environment temp is above normal additional heatsink needed for all brands. Slower NVMes doesn't have that issue so often as running out of DRAM and speeds drops which lower heat output generated by controller.

Well, I am going to make sure I put heatsinks on mine, and point a small fan straight at them, though this might be tricky what with the m.2 ports on the H12SSL series of motherboards potentially blocking longer PCIe boards if they get too tall...Trust no marketing. Better always do homework and check what are temp limits for the components and obey these conditions. Have learnt also not to trust any temp reporting from the device which maybe failingWhen more performance promised and use scenario and/or environment temp is above normal additional heatsink needed for all brands. Slower NVMes doesn't have that issue so often as running out of DRAM and speeds drops which lower heat output generated by controller.

My application for these drives does not involve many high IOPS databases, so I hope I don't run into this issue.

Only occurrence of this I can think of is a smallish (103 tables, 2-3GB) MySQL database (well, now MariaDB) that gets exported, backed up and scrubbed automatically every night, which keeps the drives pretty busy for a while. This is currently running on a mirrored (with ZFS) pair of 256GB Inland Premium (Gen3 Phison TLC I believe) drives I got cheaply a few years ago. I wonder if the ZFS "Copy on Write" file system helps minimize the drive load, as it makes that load more sequential. Either way, I've never had a problem with these generic budget SSD's, so I was hoping the Samsung's would be better, but maybe not...

I will say that the small 256GB TLC drives do consume write cycles faster than I would have liked (down to about 60% in two years and three months) with so many VM's running off of them, but I knew that going in and was factored into how little I paid for them. While they are ticking down relatively fast, I figured I'd swap them in place 5-6 years after install when they start getting dangerously low.

Anyone have any suggestions on a quick and dirty way I might tease this issue out in Linux? I can easily do a test with high sustained/sequential reads and writes using DD, but high IOPS/random data is more difficult to manufacture on demand.

Last edited:

fio will do random r/w, though I can never remember the commands and always have to look them up, here's a page of examples to get you started (no endorsement of Oracle, however): Sample FIO Commands for Block Volume Performance Tests on Linux-based InstancesNote that if your drives are already down to 60% random write tests could take a big dent out of the remaining lifetime, you might be better off just assuming these are dead and replacing them with something better soon.

Some brands may be better or worse than others in general terms, but the better way to shop is to just avoid consumer drives and only buy hardware with full PLP, because manufacturers have a lot of incentive to avoid bugs when they sell to big enterprises with large budgets and long memories, whereas consumer drives are primarily designed to win 20 second benchmarks and cost as little as possible to make.There goes my "I only trust Intel and Samsung SSD's" shopping philosophy

Thank you sir. I will look up fio commands.fiowill do random r/w, though I can never remember the commands and always have to look them up, here's a page of examples to get you started (no endorsement of Oracle, however): Sample FIO Commands for Block Volume Performance Tests on Linux-based Instances

Note that if your drives are already down to 60% random write tests could take a big dent out of the remaining lifetime, you might be better off just assuming these are dead and replacing them with something better soon.

The drives that are down to 60% are not the Samsung drives that I will be installing in my new server, and thus will be testing.

Can anyone speak to how quickly their affected Samsung drives have been brought down? I'm trying to figure out how much of a test is an adequate test, without consuming too much drive capacity.

That is true. I take this philosophy with most hardware, but with drives the cost penalty is a little much for my home system, so instead I have just been making sure I have some redundancy and decent backups and buying consumer drives. (Except for my SLOG drives in ZFS, where I used Optanes for obvious reasons)Some brands may be better or worse than others in general terms, but the better way to shop is to just avoid consumer drives and only buy hardware with full PLP, because manufacturers have a lot of incentive to avoid bugs when they sell to big enterprises with large budgets and long memories, whereas consumer drives are primarily designed to win 20 second benchmarks and cost as little as possible to make.

To date I have never lived to regret it, but this Samsung issue sounds like it could be a real problem.

I am still trying to suss out if this is primarily a Windows Server problem (in other words, safe to ignore for me) or if I should be worried.

Last edited:

Micron/Crucial (MX500) models. *(its us based company)There goes my "I only trust Intel and Samsung SSD's" shopping philosophy, as they are the only brands I've ever used that haven't failed on me.

Intel exited the market, and Samsung seems to have gone to shit. Now I have no idea what brands I can actually trust.

Seagate is entering the market, and their offerings are quite decent.

Hynix - con is that they aren't delivering same size disks for very long time (i.e. they had cut 500G models completely)

Toshiba/Kioxia - Looks like this is what dell has chosen.

I decided to buy some very basic thin heatsinks (so that they fit under expansion cards on my supermicro board). Going to try to finagle a small fan on top of them when I install everything.

Right now I am just running Memtest86+ from a bootable USB stick, so the NMVe drives are idle, but they are still surprisingly hot to the touch.

I broke out my little infrared thermometer, and I am reading ~51C on top of the heatsinks, which means the chips itself are likely running MUCH hotter. That seems like kind of a lot for idle temps...

Is it maybe one of those where they stay in full power mode until the OS NVMe driver kicks in, and because of this they are running hotter than they would once booted?

Right now I am just running Memtest86+ from a bootable USB stick, so the NMVe drives are idle, but they are still surprisingly hot to the touch.

I broke out my little infrared thermometer, and I am reading ~51C on top of the heatsinks, which means the chips itself are likely running MUCH hotter. That seems like kind of a lot for idle temps...

Is it maybe one of those where they stay in full power mode until the OS NVMe driver kicks in, and because of this they are running hotter than they would once booted?

Almost all nvme run hot like that without airflow. I recommend larger finstack heatsink, or proper airflow.

you can use hwinfo (windows) or smartctl -a /dev/sdX | grep "Temperature" on linux to read their temps while running tests.

Your best bet for great temps where you can push it without worry is to buy lets say pcie-gen4/5 nvme, and have it in pcie-gen3. (they will run at half/quarter of the wattage, and generate far less heat as new tech is more efficient at lower speeds.)

(gen4)micron 3400@gen3 @ 25'C at idle, and 39'C full load writing 100G

(i use those heatsink for my setups, with decent airflow https://www.amazon.com/JEYI-Q150-HeatSink-Aluminum-Passive/dp/B09C6GH8VV -- i think this is the best heatsink money can buy. I also use it in dell servers at work, temps are even better idle at 20'C and full load at 35'C)

They keep micron 3400@gen4 @ 39'C at idle, and 50'C at full load writing some 100G (same system as in above)

Just make sure your finstack has proper direction so air can pass through them. As they are now - they won't get any airflow over its fins.

you can use hwinfo (windows) or smartctl -a /dev/sdX | grep "Temperature" on linux to read their temps while running tests.

Your best bet for great temps where you can push it without worry is to buy lets say pcie-gen4/5 nvme, and have it in pcie-gen3. (they will run at half/quarter of the wattage, and generate far less heat as new tech is more efficient at lower speeds.)

(gen4)micron 3400@gen3 @ 25'C at idle, and 39'C full load writing 100G

(i use those heatsink for my setups, with decent airflow https://www.amazon.com/JEYI-Q150-HeatSink-Aluminum-Passive/dp/B09C6GH8VV -- i think this is the best heatsink money can buy. I also use it in dell servers at work, temps are even better idle at 20'C and full load at 35'C)

They keep micron 3400@gen4 @ 39'C at idle, and 50'C at full load writing some 100G (same system as in above)

Just make sure your finstack has proper direction so air can pass through them. As they are now - they won't get any airflow over its fins.

Last edited:

I know the fins are pointing the wrong way, which is why in my final build I will be sticking a small 40 mm fan on there to make sure I move some air over the fins. It's surprising that supermicro would point the slots in that direction knowing that the airflow in most servers is perpendicular to that. They are usually better than that.Just make sure your finstack has proper direction so air can pass through them. As they are now - they won't get any airflow over its fins.

I just expected that they wouldn't get too hot at idle.

Thank you. I am very familliar with Smartctl, just cant run it while I am running a hours long memtestAlmost all nvme run hot like that without airflow. I recommend larger finstack heatsink, or proper airflow.

you can use hwinfo (windows) or smartctl -a /dev/sdX | grep "Temperature" on linux to read their temps while running tests.

Last edited: