I have built a NAS server using following:

Thanks for your help

- HPE Gen 10 Proliant Microserver,

- VMware ESXi 6.7 update 3

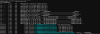

- Napp-it ZFS appliance with OmniOS (SunOS napp-it030 5.11 omnios-r151030-1b80ce3d31 i86pc i386 i86pc OmniOS v11 r151030j)

Thanks for your help