There is no physical barrier. This is more an example of the 80:20 rule what means that you only need to spend 20% of all efforts to reach 80% of a maximum. If you want to reach the maximum you need to spend 80% of all efforts for the missing 20%

I see it like

Reason is the ZFS filesystem with its goal of ultimate datasecurity what means more data and data processing due checksums, CopyOnWrite that increases data that must be written as all writes are ZFS blockwise as there is no infile data update. This also adds more fragmentation. As in a ZFS Raid, data is spread quite evenly over the whole pool there is hardly a pure sequential datastream. Disk iops is a limiting factor then. Without these security related items a filesysystem can be faster.

And even with a quite best of all DC/P 3700 you are limited by traditional Flash what means you must erase a SSD block prior write a page with a ZFS datablock and the need of trim and garbage collection. With 3 GB/s and more you are also in regions where you must care of internal performance limits regarding RAM, CPU, PCIe bandwith so this require a fine tuning on a lot of places. This is propably the reason why a genuine Solaris is faster than Open-ZFS..

So unless you cannot do a technological jump like with Optane that is not limited by all the Flash restrictions as it can read/write any cell directly similar to RAM. Optane can give up to 500k iops, ultralow latency down to 10us without any degration after time or the need of trim or garbage collection to keep performance high. This is 3-5x better than a P 3700 and the reason why it can double the result of a P3700 pool.

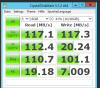

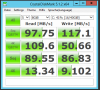

In the end you must also accept a benchmark as a synthetic test to check performance in a way that limits effects of RAM and caching. This is what makes ZFS fast despite the higher security approaches. On real workloads more than 80% of all random reads are delivered from RAM what makes pool performance not relevant and all small random writes go to RAM (beside sync writes). Sync write performance in a benchmark is propably the only value that give a correct relation to real world performance. Other benchmarks are more a hint that performance is as expected or to decide if a tuning or modification is helpful or not.

I see it like

Reason is the ZFS filesystem with its goal of ultimate datasecurity what means more data and data processing due checksums, CopyOnWrite that increases data that must be written as all writes are ZFS blockwise as there is no infile data update. This also adds more fragmentation. As in a ZFS Raid, data is spread quite evenly over the whole pool there is hardly a pure sequential datastream. Disk iops is a limiting factor then. Without these security related items a filesysystem can be faster.

And even with a quite best of all DC/P 3700 you are limited by traditional Flash what means you must erase a SSD block prior write a page with a ZFS datablock and the need of trim and garbage collection. With 3 GB/s and more you are also in regions where you must care of internal performance limits regarding RAM, CPU, PCIe bandwith so this require a fine tuning on a lot of places. This is propably the reason why a genuine Solaris is faster than Open-ZFS..

So unless you cannot do a technological jump like with Optane that is not limited by all the Flash restrictions as it can read/write any cell directly similar to RAM. Optane can give up to 500k iops, ultralow latency down to 10us without any degration after time or the need of trim or garbage collection to keep performance high. This is 3-5x better than a P 3700 and the reason why it can double the result of a P3700 pool.

In the end you must also accept a benchmark as a synthetic test to check performance in a way that limits effects of RAM and caching. This is what makes ZFS fast despite the higher security approaches. On real workloads more than 80% of all random reads are delivered from RAM what makes pool performance not relevant and all small random writes go to RAM (beside sync writes). Sync write performance in a benchmark is propably the only value that give a correct relation to real world performance. Other benchmarks are more a hint that performance is as expected or to decide if a tuning or modification is helpful or not.