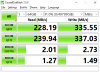

SMB - no loop - sync disabled

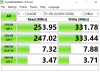

iSCSI - no loop - sync disabled

iSCSI - no loop - sync disabled

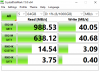

NFS - no loop - sync disabled

NFS - no loop - sync disabled

Notes / Remarks / Conclusions

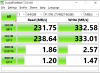

CrystalDiskMark

Notes / Remarks / Conclusions

CrystalDiskMark

To be honest, I wasn't expecting much performance difference between SMB/NFS/iSCSI at all. I know some are more resource hungry, but as none should be able to starve my resources, I was expecting little to no performance difference.

So I was very surprised to see such these huge differences...

1) I'm using a 64GB test-set in CrystalDiskMark for (hopefully) getting "more accurate" worst-case-scenario scores (so FreeNAS can't use the cache in RAM all the time).

2) loop vs no loop

CrystalDiskMark scores are A LOT higher when running it in a loop. I guess this is also because of FreeNAS caching more in the RAM when running it in a loop.

The strange thing is that simply running it multiple times after each other without a loop doesn't provide the same high scores as when running it in a loop. Is the RAM cache "cleared" so quickly already?

Especially when using iSCSI, the difference is HUGE. It seems to be (at least partly) because ZFS is still completing its writes, when the read-test already starts...

3) NFS performance is horrible

I'm using "Client for NFS" that is included in Windows 10. As far as I could find, this NFS client only supports NFSv3. I wasn't able to enable / force NFSv4 on it. I'm not sure if there are better 3rd party NFS clients for Windows that do support NFSv4 and have better performance...?

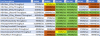

4) iSCSI and SMB are both "ok" I guess?

It seems like SMB is a little better for sequential transfers and iSCSI might be better for random transfers. But I'm not sure if it is ok to draw much conclusions out of this... Should I best compare looped results with looped results? Or non-looped with non-looped?

5) "Internal Storage (hardware RAID5)" vs "Network Storage (FreeNAS RAIDZ2)"

Also here I'm not sure if I should be drawing much conclusions, as my RAID5 is 99% full. But on first sight, "Network Storage" seems faster in all regards than "Internal Storage". Not sure if there are specific aspects which CrystalDiskMark doesn't test (like latency?) that could be faster on the "Internal Storage"?

6) Enabling sync makes write speeds unusable slow

Do I understand it correctly that having sync enabled is especially important when using the pool for block storage, such as iSCSI, as having sync disabled could potentially destroy the partition. While for NFS and SMB, the maximum you can loose is the file(s) you were transfering at the time of the powerloss?

Intel NAS Performance Toolkit

As you can see, the results are EXTREMELY inconsistent, so I'm not sure if it is usable at all. iSCSI performs unrealistically / impossibly well. I suspect this must be because of extreme compression or something?

When starting NASPT, it does warn that results will probably be unreliable because I have more than 2GB RAM (32GB actually

).

NetData comparison

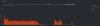

1) CrystalDiskMark phases

CrystalDiskMark first prepares the benchmark by writing (I guess) a 64GB test file(s) to the disk to test. This takes about 2 minutes

Then it runs the read tests, starting with 2 sequential tests and then 2 random access tests.

Finally it runs the write tests, starting with 2 sequential tests and then 2 random access tests.

You can use the "Disk Usage" graph below to see in which part of the test CrystalDiskMark is around which time.

2) CrystalDiskMark duration vs benchmark results

SMB took 4m20s

iSCSI took 6m10s

NFS took 5m50s

So although the benchmark result of iSCSI is better than NFS, the test NFS benchmark completed faster than the iSCSI benchmark. And although I don't know the actual times, by checking the graphs, I can see that it is not just the preperation that takes longer, also the test itself. This is weird...

3) The reason why running a CrystalDiskMark loop is faster than a non-loop

In the "Disk Usage" graph I now also clearly saw why running CrystalDiskMark in non-loop is slower... It is because it directly starts with the read-test, after "preparing" the benchmark (which is writing to the disk). In the "Disk Usage" graph, you can clearly see that ZFS is still in progress of writing, while it already starts the read test. For a proper ZFS test, CrystalDiskMark should delay its read test for a couple seconds, so that ZFS can complete its writing to the disk...

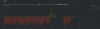

4) CPU usage comparison (this is a bit of guess / estimation work,by looking at the graphs)

SMB: During preperation not so much fluctuation, about 20% average, max 55%. During read-test about 3%. During write-test about 20% average, max 35%

iSCSI: During preparation much fluctuation, about 25% average, max almost 60%. During read-test about 4%. During write-test about 20% average, almost 60% max

NFS: During preparation much fluctuation, I think less than 10% average, max 20%. During read-test about 2%. During write-test about 10%, max 20%.

Actually the CPU usage during the read-test is too small / short / poluted by the writes still going on in the background, to be useable at all...

NFS uses less CPU, at least partly, because of being slower. So not sure how comparable this result is either.

iSCSI seems a little bit more CPU hungry than SMB, but not by much...

5) Disk usage comparison (this is a bit of guess / estimation work,by looking at the graphs)

SMB: During preparation pretty consistent about 700MB/sec. During read-test 1 peak of about 900MB/sec. During write-test about the same as during preparation.

iSCSI: During preparation although the minimum seems similar to SMB the peaks are A LOT faster (almost 2GB/sec), but still it took longer to prepare. During read-test 1 huge peak of 2GB/sec. During write-test about the same as during preparation.

NFS: During preparation much short fluctuations with similar maximums (730MB/sec) to SMB, but a lot lower minimums (50MB/sec). During read-test 2 peaks of about 200MB/sec. During write-test a little less fluctuations than during prepare but slow average (400MB/sec).

As iSCSI "Disk Usage" seems to reach unrealisticly high peaks, I'm suspecting that much of this transfer might be highly compressable meta-data because of it being block storage or something.

The results don't seem very comparable...

6) ARC hits comparison (looking only at the read tests)

SMB: Peaks of 40% / 50% during sequential and around 30% during random access

iSCSI: Peaks of 45% / 30% during sequential and around 30% during random access

NFS: Peaks of about 20% during both sequential and random access

7) NetData freezes during iSCSI benchmark

I also noticed that NetData refreshed nicely every second when benchmarking SMB and NFS, but froze for about 3-5 seconds constantly when benchmarking iSCSI.

Not sure if iSCSI is causing performance issues also outside of the NetData plugin, but it is concerning...

As my signature isn't easily found, below are the specs of my Storage Server

OS: FreeNAS 11.3U3.2

Case: Fractal Design Define R6 USB-C

PSU: Fractal Design ION+ 660W Platinum

Mobo: ASRock Rack X470D4U2-2T

NIC: Intel X550-AT2 (onboard)

CPU: AMD Ryzen 5 3600

RAM: 32GB DDR4 ECC (2x Kingston KSM26ED8/16ME)

HBA: LSI SAS 9211-8i

HDDs: 8x WD Ultrastar DC HC510 10TB (RAID-Z2)

Boot disk: Intel Postville X25-M 160GB