I purchased a Samsung PM1733 7.68TB NVMe drive for a build I'm working on.

This morning I installed it and quickly threw Ubuntu 18.04 onto it to do some testing/benchmarking for the whole system.

Speeds are WELL below the stated 7000MB/s read that Samsung advertises.

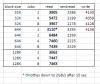

hdparm returns the following:

I found this thread about the PM1735 and the poor performance. Speeds seem similar to what I'm getting.

Is there anything I can do or should I not even bother wasting my time with this drive and just get something from Intel?

I had a similar issue with another Samsung enterprise drive I bought last year, but thought it was just a bad drive. Regretting buying this one now

Edit: Oddly enough the write speed seems to be normal at around 4.4GB/s (actually it's higher than the rated 3.8GB/s!)

This morning I installed it and quickly threw Ubuntu 18.04 onto it to do some testing/benchmarking for the whole system.

Speeds are WELL below the stated 7000MB/s read that Samsung advertises.

hdparm returns the following:

Code:

/dev/nvme0n1:

Timing buffered disk reads: 4284 MB in 3.00 seconds = 1427.57 MB/secIs there anything I can do or should I not even bother wasting my time with this drive and just get something from Intel?

I had a similar issue with another Samsung enterprise drive I bought last year, but thought it was just a bad drive. Regretting buying this one now

Edit: Oddly enough the write speed seems to be normal at around 4.4GB/s (actually it's higher than the rated 3.8GB/s!)

Last edited: