So, I went to build a BackBlaze Storage Pod 6.0, with a few twists including getting 256GB of RAM and 2 CPUs + 10G + Server 2016.

Open Source Storage Server: 60 Hard Drives 480TB Storage

As I'm getting this system online I wanted to compare the performance to a similar Adaptec Raid 6 system, also with 24 drives.

And was horrified by the poor performance:

Here is what I get with Storage Spaces + parity

New-VirtualDisk -FriendlyName "datastore" -StoragePoolFriendlyName "datastore1" -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Parity

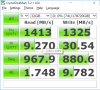

And here is what I get with Storage Spaces without parity

PS C:\Users\Administrator> New-VirtualDisk -FriendlyName "datastore" -StoragePoolFriendlyName "datastore1" -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Simple

The drives are Seagate 8TB BarraCuda Pro SATA 6Gb/s 256MB Cache 3.5-Inch Internal Hard Drive (ST8000DM005), with do sequential write at ~125 MB/s.

Previous builds with 24 drives and an Adaptec 8805 + Intel Splitter have pushed past 2000MB/s (when new).

How do I make these drives run faster on Windows with some kind of parity/fault tolerance similar to RAID6? Also why is storage spaces so darn slow?

Open Source Storage Server: 60 Hard Drives 480TB Storage

As I'm getting this system online I wanted to compare the performance to a similar Adaptec Raid 6 system, also with 24 drives.

And was horrified by the poor performance:

Here is what I get with Storage Spaces + parity

New-VirtualDisk -FriendlyName "datastore" -StoragePoolFriendlyName "datastore1" -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Parity

And here is what I get with Storage Spaces without parity

PS C:\Users\Administrator> New-VirtualDisk -FriendlyName "datastore" -StoragePoolFriendlyName "datastore1" -UseMaximumSize -ProvisioningType Fixed -ResiliencySettingName Simple

The drives are Seagate 8TB BarraCuda Pro SATA 6Gb/s 256MB Cache 3.5-Inch Internal Hard Drive (ST8000DM005), with do sequential write at ~125 MB/s.

Previous builds with 24 drives and an Adaptec 8805 + Intel Splitter have pushed past 2000MB/s (when new).

How do I make these drives run faster on Windows with some kind of parity/fault tolerance similar to RAID6? Also why is storage spaces so darn slow?

Attachments

-

33.5 KB Views: 239

-

138.1 KB Views: 230