The tl;dr is pretty much in the thread title: I'm trying to find three or four two-port NICs that will connect to the mezzanine slot on the nodes of my C6220 II, and communicate via Ethernet at not less than 10 Gbit/sec with my existing SFP+ infrastructure, be supported by Debian 11 (specifically, Proxmox), and include (or have available) the appropriate mounting bracket.

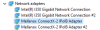

Some background: I'm using three nodes of a Dell PowerEdge C6220 II as a Proxmox cluster, complete with a Ceph pool on SATA SSDs on each node. Each node has a Chelsio T420-CR card with one port for the main network, and the second for a dedicated Ceph network. The SATA SSDs are becoming performance-limiting, so I'm wanting to replace them with NVMe SSDs. That means installing a card to connect the NVMe SSDs, and that means I need to do something else for the NICs. The obvious solution, IMO, is to use a mezzanine card for this.

Dell listed an Intel dual 10 GbE, SFP+ NIC for this server, and there are plenty to be had on eBay at reasonable prices. I bought a couple that came with brackets, only to realize yesterday morning when I was trying to install one that it was the wrong bracket. And now that I have a better idea of what the right bracket would look like, I don't see any (on eBay or otherwise) that have it.

So then some poking around on eBay led me to these:

...and I'm interested--I don't need 40 Gbit/sec speeds, but they're available, they're cheap, they have the right bracket, and I can buy QSFP+ optics from fs.com (not as inexpensively as SFP+ optics, but they're still available). So, three NICs, six optics, a handful of fiber patch cables (which I already have), a suitable 40G switch (the wallet is wincing at this point), and I can patch that in to my existing 10G infrastructure somehow, I think. I'm thinking a suitable switch (8+ QSFP+, plus at least one SFP+) sounds like an expensive proposition, though, even used.

So a little more research leads me to the idea of QSFP+-to-SFP+ adapters--plug one into the NIC, plug a SFP+ optic into it, and Bob's your proverbial uncle. But then I read of a question as to whether these NICs would support such an adapter. And I also see some discussion suggesting some Mellanox cards don't support Ethernet, and I'm not able to find any definitive specs on these particular NICs to be able to tell whether these are among them.

So I'm seeing three ways to get where I need to go, but I'm seeing problems (or at least unknowns that I don't know how to address) with each of them. Any help narrowing them down would be appreciated.

Some background: I'm using three nodes of a Dell PowerEdge C6220 II as a Proxmox cluster, complete with a Ceph pool on SATA SSDs on each node. Each node has a Chelsio T420-CR card with one port for the main network, and the second for a dedicated Ceph network. The SATA SSDs are becoming performance-limiting, so I'm wanting to replace them with NVMe SSDs. That means installing a card to connect the NVMe SSDs, and that means I need to do something else for the NICs. The obvious solution, IMO, is to use a mezzanine card for this.

Dell listed an Intel dual 10 GbE, SFP+ NIC for this server, and there are plenty to be had on eBay at reasonable prices. I bought a couple that came with brackets, only to realize yesterday morning when I was trying to install one that it was the wrong bracket. And now that I have a better idea of what the right bracket would look like, I don't see any (on eBay or otherwise) that have it.

So then some poking around on eBay led me to these:

Dell CloudEdge C6220 Mellanox ConnectX-2 Dual-Port 40GBs QDR Network Card XXM5F | eBay

Part Number - XXM5F. DELL POWEREDGE C6220 NETWORK ADAPTER w/INTERPOSER.

www.ebay.com

...and I'm interested--I don't need 40 Gbit/sec speeds, but they're available, they're cheap, they have the right bracket, and I can buy QSFP+ optics from fs.com (not as inexpensively as SFP+ optics, but they're still available). So, three NICs, six optics, a handful of fiber patch cables (which I already have), a suitable 40G switch (the wallet is wincing at this point), and I can patch that in to my existing 10G infrastructure somehow, I think. I'm thinking a suitable switch (8+ QSFP+, plus at least one SFP+) sounds like an expensive proposition, though, even used.

So a little more research leads me to the idea of QSFP+-to-SFP+ adapters--plug one into the NIC, plug a SFP+ optic into it, and Bob's your proverbial uncle. But then I read of a question as to whether these NICs would support such an adapter. And I also see some discussion suggesting some Mellanox cards don't support Ethernet, and I'm not able to find any definitive specs on these particular NICs to be able to tell whether these are among them.

So I'm seeing three ways to get where I need to go, but I'm seeing problems (or at least unknowns that I don't know how to address) with each of them. Any help narrowing them down would be appreciated.