Multi-NVMe (m.2, u.2) adapters that do not require bifurcation

- Thread starter andrewbedia

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

I had to search a lot to find something that doesn't break the bank at $30-$40+ per NVMe disk... Will this adapter work? https://www.aliexpress.com/i/3256804271282346.html?gatewayAdapt=Msite2Pc4itemAdapt

Ideally:

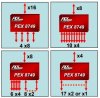

- Use an aforementioned PEX 8748 or 8749 card to break a single PCIe x16, x8, or even M.2 x4 slot out into 8 ports of U.2 in SFF-8643 format

- Get 4 of the above linked el cheapo M.2 M-key adapters

- Use only 4 SATA power connectors, one for each

- Host 8 NVMe disks

???

If this is how it works this is a phenomenal expandability option for consumer platforms.

Edit: Based on the immediately preceding post, it seems like x4 on host would drop the child devices to x2. Though thats the diagram for 8749, which I suspect may not tell the whole picture ... 8748's diagram does show 4x switching!

So, https://www.aliexpress.us/item/2255800570129198.html is the card, seems like a lot of capability that may be afforded by this $100 card.

It's not clear whether the SFF 8643 cables (rated 12Gbit) meant for SATA/SAS are suitable for this use case of connecting PCIe x4, however, as that would be (for gen 3) 32Gbit...

Ideally:

- Use an aforementioned PEX 8748 or 8749 card to break a single PCIe x16, x8, or even M.2 x4 slot out into 8 ports of U.2 in SFF-8643 format

- Get 4 of the above linked el cheapo M.2 M-key adapters

- Use only 4 SATA power connectors, one for each

- Host 8 NVMe disks

???

If this is how it works this is a phenomenal expandability option for consumer platforms.

Edit: Based on the immediately preceding post, it seems like x4 on host would drop the child devices to x2. Though thats the diagram for 8749, which I suspect may not tell the whole picture ... 8748's diagram does show 4x switching!

So, https://www.aliexpress.us/item/2255800570129198.html is the card, seems like a lot of capability that may be afforded by this $100 card.

It's not clear whether the SFF 8643 cables (rated 12Gbit) meant for SATA/SAS are suitable for this use case of connecting PCIe x4, however, as that would be (for gen 3) 32Gbit...

Last edited:

Nice find! Yes, it should work. Devising a mounting location/method is "user's choice".... Will this adapter work? https://www.aliexpress.com/i/3256804271282346.html?gatewayAdapt=Msite2Pc4itemAdapt

I've been putting my (switch-connected) M.2s into these [Link] (excellent product; note:2280 only, despite description) Will buy a couple of your_link also. Compared to 8643-to-8639 cables, 8643-to-8643s are less expensive, more length options.

No worries; those diagrams in the Product Briefs are just examples.Based on the immediately preceding post, it seems like x4 on host would drop the child devices to x2. Though thats the diagram for 8749, which I suspect may not tell the whole picture ... 8748's diagram does show 4x switching!

The width (<= x16) and gen (<=3) of the host connection determines the bandwidth available. The switch allocates that bw dynamically, and "fairly", to the targets, on demand.

I've had 3 of those for 2+ years. I like them.So, https://www.aliexpress.us/item/2255800570129198.html is the card, seems like a lot of capability that may be afforded by this $100 card.

Again, no worries. In SAS3 usage, the signal speed on the cable is 12Gbps (x4=48G); whereas, in PCIe3 usage, the sig spd is 8Gbps (per-lane; x4=32G)It's not clear whether the SFF 8643 cables (rated 12Gbit) meant for SATA/SAS are suitable for this use case of connecting PCIe x4, however, as that would be (for gen 3) 32Gbit...

I have a Dell Optiplex 5070 running Proxmox. I thought I'd try a dual pci-e nvme adapter (https://www.amazon.com/dp/B08ZHNTWNG?ref=ppx_yo2ov_dt_b_product_details&th=1)(ULANSeN M.2 NVMe PCIe Adapter using ASM2812 chipset) in the only x16 slot.

lspci shows:

01:00.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

02:00.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

02:08.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

03:00.0 Non-Volatile memory controller: Realtek Semiconductor Co., Ltd. Device 5765 (rev 01)

04:00.0 Non-Volatile memory controller: Realtek Semiconductor Co., Ltd. Device 5765 (rev 01)

05:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN570 NVMe SSD 1TB <----- this is the nvme boot drive on the mb

But fdisk -l only sees one of the two nvme drives on the adapter:

root@pve:~# fdisk -l

Disk /dev/nvme2n1: 931.51 GiB, 1000204886016 bytes, 1953525168 sectors

Disk model: WD Blue SN570 1TB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 0A3C6157-EA95-4FC5-AFE1-83916573B63A

Device Start End Sectors Size Type

/dev/nvme2n1p1 34 2047 2014 1007K BIOS boot

/dev/nvme2n1p2 2048 2099199 2097152 1G EFI System

/dev/nvme2n1p3 2099200 1953525134 1951425935 930.5G Linux LVM

Disk /dev/mapper/pve-swap: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/pve-root: 96 GiB, 103079215104 bytes, 201326592 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/nvme1n1: 3.73 TiB, 4096805658624 bytes, 8001573552 sectors

Disk model: JAJP600M4TB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x01aa5354

Is there any way to get proxmox / debian to see the second drive?

lspci shows:

01:00.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

02:00.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

02:08.0 PCI bridge: ASMedia Technology Inc. Device 2812 (rev 01)

03:00.0 Non-Volatile memory controller: Realtek Semiconductor Co., Ltd. Device 5765 (rev 01)

04:00.0 Non-Volatile memory controller: Realtek Semiconductor Co., Ltd. Device 5765 (rev 01)

05:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN570 NVMe SSD 1TB <----- this is the nvme boot drive on the mb

But fdisk -l only sees one of the two nvme drives on the adapter:

root@pve:~# fdisk -l

Disk /dev/nvme2n1: 931.51 GiB, 1000204886016 bytes, 1953525168 sectors

Disk model: WD Blue SN570 1TB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 0A3C6157-EA95-4FC5-AFE1-83916573B63A

Device Start End Sectors Size Type

/dev/nvme2n1p1 34 2047 2014 1007K BIOS boot

/dev/nvme2n1p2 2048 2099199 2097152 1G EFI System

/dev/nvme2n1p3 2099200 1953525134 1951425935 930.5G Linux LVM

Disk /dev/mapper/pve-swap: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/pve-root: 96 GiB, 103079215104 bytes, 201326592 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/nvme1n1: 3.73 TiB, 4096805658624 bytes, 8001573552 sectors

Disk model: JAJP600M4TB

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x01aa5354

Is there any way to get proxmox / debian to see the second drive?

Suspicious that you have nvme1 and nvme2, but no nvme0. Maybe it was there but dropped off the bus? I assume you're expecting to see another 3.73TB drive?

That was the hopeSuspicious that you have nvme1 and nvme2, but no nvme0. Maybe it was there but dropped off the bus? I assume you're expecting to see another 3.73TB drive?

I have a Dell Optiplex 5080 so I'll be interested to see if you have any luck resolving your issue as I'm assuming I would have the same.That was the hope

Excuse me if I missed the posts that indicated the answer to my follow up here. I did attempt tor read through the 5 pages.

Are we saying these linked cards allow "desktop" model pc's which typically do not support bifurcation to drop these pci cards in and the pci cards somehow provide bifurcation for the m.2 drives on them?

Because when I checked a couple of the provided links, none stated they did that.

The OP mentioned these cards negated the need to step up to a HEDT for bifurcation (which is the issue I am faced with. Cost considering for more PCI lanes & bifurcation to add hdds & m.2's).

I want to add multiple m.2 ssd's to my very limited pci slots and see each ssd as its own drive, not one large pool/array.

Thank you

Mike

Are we saying these linked cards allow "desktop" model pc's which typically do not support bifurcation to drop these pci cards in and the pci cards somehow provide bifurcation for the m.2 drives on them?

Because when I checked a couple of the provided links, none stated they did that.

The OP mentioned these cards negated the need to step up to a HEDT for bifurcation (which is the issue I am faced with. Cost considering for more PCI lanes & bifurcation to add hdds & m.2's).

I want to add multiple m.2 ssd's to my very limited pci slots and see each ssd as its own drive, not one large pool/array.

Thank you

Mike

Well, guess why the title of the tread says:

Multi-NVMe (m.2, u.2) adapters that do not require bifurcation

So it’s all about adapters with a build-in PCIE switch which do the „bifurcation“ for itself and thus offer attaching more than one NVME (M.2 or U.2) to one x16/x8 PCIE slot even if those slot doesn’t offer lane split by the BIOS.

Multi-NVMe (m.2, u.2) adapters that do not require bifurcation

So it’s all about adapters with a build-in PCIE switch which do the „bifurcation“ for itself and thus offer attaching more than one NVME (M.2 or U.2) to one x16/x8 PCIE slot even if those slot doesn’t offer lane split by the BIOS.

ok thank you for the clarification.Well, guess why the title of the tread says:

Multi-NVMe (m.2, u.2) adapters that do not require bifurcation

So it’s all about adapters with a build-in PCIE switch which do the „bifurcation“ for itself and thus offer attaching more than one NVME (M.2 or U.2) to one x16/x8 PCIE slot even if those slot doesn’t offer lane split by the BIOS.

I read it to mean "the cards were somehow doing on-card bifurcation so you did not have to and with confirmation bias read this to align with that train of thought.

" This is in lieu of moving up to HEDT (Ryzen Threadripper, Haswell-E[P]/Broadwell-E[P]/Skylake-E[P]/etc) and using a card like ASUS Hyper M.2 with bifurcation enabled. "

Thus my question for clarification.

So I did read the title and the 5 pages, just misunderstood the comms.

Thank you again. Appreciate the time to clarify.

Bifurcation != switching. These cards switch PCIe much like a network switch does for ethernet. This means you could get full bandwidth from 1 or 2 drives or partial bandwidth across 8 depending on what's available to the card and how much traffic you're driving against the attached drives.

Had anyone encountered a Fujitsu D3262-A12 (16x 4-port u.2)?

I grabbed one off ebay (there's one left currently) because it looked right and the price seemed reasonable, but then I figured I would check this post, and the PLX8732 on the card doesn't seem to be mentioned here. I'm mildly concerned that it's an 8 port PLX (as opposed to 5 for the 4-port chips I looked up in the original post,) and could thus be 4x4 on the host side which sort of makes the whole thing pointless because it requires bifurcation. I know OEMs do weird stuff sometimes, did I get one of those useless devices, or is it fine, or does nobody know until I plug it in?

I grabbed one off ebay (there's one left currently) because it looked right and the price seemed reasonable, but then I figured I would check this post, and the PLX8732 on the card doesn't seem to be mentioned here. I'm mildly concerned that it's an 8 port PLX (as opposed to 5 for the 4-port chips I looked up in the original post,) and could thus be 4x4 on the host side which sort of makes the whole thing pointless because it requires bifurcation. I know OEMs do weird stuff sometimes, did I get one of those useless devices, or is it fine, or does nobody know until I plug it in?

No luck. I gave up and went another direction. I could have probably played around with the bios settings some more but I didn't want to take that much time.I have a Dell Optiplex 5080 so I'll be interested to see if you have any luck resolving your issue as I'm assuming I would have the same.

Figures, I just purchased this one from NewEgg as it's a bit cheaper than the one posted on AliExpress by OP. Although it's being shipped from China so hopefully I receive it.No luck. I gave up and went another direction. I could have probably played around with the bios settings some more but I didn't want to take that much time.

Linkreal PCIe 3.0 x16 to 4 Port M.2 NVMe SSD Swtich Adapter Card - Newegg.com

I didn't see your reply before purchasing and suppose I could cancel the order, but I have another SFF PC I know these "no bifucation" cards work in so I may move it to that system if needed. The card I currently have in that PC is the following and has been working well for my needs.

Amazon.com: RIITOP Dual NVMe PCIe Adapter, M.2 NVMe SSD to PCI-e 3.1 x8/x16 Card Support M.2 (M Key) NVMe SSD 22110/2280/2260/2242/2230 : Electronics

Thank you for confirming! This is enough for me to feel comfortable ordering a whole bunch of stuff all from aliexpress. It's very interesting -- now I have to also decide if I want to just grab a card like this https://www.aliexpress.us/item/3256801158360351.html instead, as that would be one card that can sit on a pcie x4 hanging off a M.2 slot and itself host up to 4 M.2 NVMe SSDs.Yes, it should work.

I need to think about it some more to see what my configurations are going to be once I get this stuff. The built in compactness of the M.2 form factor does make it a much more attractive expansion architecture compared to SFF-8643 if NVMes are the primary use case. Though, SFF-8643 cables at $5 or $10 a pop are a bit more affordable compared to M.2 risers, however SFF-8643 to PCIe form factor would still be elusive. I might want to say that 4 M.2 slots on the card may be superior in every way to 8 SFF-8643 on the card for form factor reasons as the most reasonable way to adapt out of 8643 so far seems to be via M.2 risers!

Last edited:

Thanks for this post, was looking to add a bunch of NVMe Drives to my Dell T620.

Gonna get the card at "https://a.aliexpress.com/_mq1kwvg" based on recommendations.

Gonna get the card at "https://a.aliexpress.com/_mq1kwvg" based on recommendations.

quick update is I'm ordering a LRNV9547L card instead, since this is a low profile card and still hosts 4 M.2 slots (just two on each side). Seems still obtainable at Newegg for around $140. Edit: I ordered but they told me they were out of stock actually and refunded me. I think i am changing my mind again and getting a 8 port breakout board instead. should get interesting. Edit 2: Nah the 8 port ones just don't make sense for me.

Last edited:

Of course M.2 is slow and after few second you end up at sata speed mostly. Having full pcie lane to feed U3 disk is quite the best used and whre you get all perfo.quick update is I'm ordering a LRNV9547L card instead, since this is a low profile card and still hosts 4 M.2 slots (just two on each side). Seems still obtainable at Newegg for around $140.

Well it's all about expectations really. High end M.2 devices can absolutely (especially today) fully saturate full 4 lanes of pice 3.0 at 3GB/s in sustained loads. The thing that's really nice about a PLX card, it really enhances the flexibility of I/O. It lets you take the x4 slot in a consumer platform motherboard and turn it into 16 more lanes worth of shared connectivity. If I had a non-bus-bandwidth-sensitive GPU application, cards like this allow me to run 6 or 10 GPUs off my 5950X system instead of just 3 (or I guess 4 if we also count the x1 slot...also possible to get creative and add 2 more via M.2, but then we're booting off SATA only). In my case without a card like this I would have had to make some tough choices about which stuff I would not be able to host in the system, since I'm expanding the capabilities.Of course M.2 is slow and after few second you end up at sata speed mostly. Having full pcie lane to feed U3 disk is quite the best used and whre you get all perfo.

- dual 3090s

- Mellanox 40Gbit card

- HBA for 8x SATA

- boot disk NVMe

There's not enough connectivity to go around without a PCIe switch card. With a PCIe switch card, it's a walk in the park, I can shove all storage through that card connected on 4 lanes, and I can fill the remaining 2 slots on there with more NVMe storage. If I want to trade with the GPU to get 8 lanes of storage connectivity with it instead, that'd be easy to do. (Edit: Actually, adding the second GPU and making them split down to x8x8 does not change anything else in the equation... but anyhow, I got down this path because I wanted to add more NVMe for ZFS slog/special vdev/etc.)

I dunno what a "U3 disk" is, maybe what you're referring to is U.2 enterprise SSDs.

Last edited: