Now that I know how to ENABLE qemu-guest-agent in the vm (see my previous post above), the next question is what should the virtual disk controller be that I use in proxmox?

When I import an ESXi Guest VM (linux in this case), the disk controller is VMWARE PVSCI. That will would still work once the VM is imported and converted into Proxmox, however the performance is not good using VMWARE PVSCI as Prox doesn't optimize for a VMWARE PVSCI. We want to change the scsi controller from "VMware PVSCI" to instead "VirtIO SCSI".

* please refer to JLAURO's post (scroll down in the link --> ) showing his IOP testing and comparing virtio scsi vs vmware pvcsi in a proxmox guest:

Worth swapping vmware pvscsi to virtio scsi?

However, if we import the guest vm into prox and setup to use VirtIO SCSI then the linux guest won't bootup at all (windows guests don't seem to have that problem). To get it the linux guest to boot using VIRTIO SCSI controller I'd have to change the line containing scsi0 to instead references sata0, which is weird right?

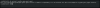

cd /etc/pve/qemu-server/

vi 102.conf #<-- my newly imported guest vm#102 config file

scsi0: miniprox1_thinpool001:vm-102-disk-1,size=100G

change to

sata0: miniprox1_thinpool001:vm-102-disk-1,size=100G

But I definitely don't want to do that. I don't want to switch the device from scsi0 to sata0, a bad workaround that would allow for bootup but with even worse performance than if we stayed with VMWARE PVSCSI. So, don't change from scsi0 to sata0. I'll keep that .conf file as it was after import (VMWARE SCSI and scsi0, etc):

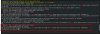

root@miniprox1:/etc/pve/qemu-server# cat 102.conf

agent: 1

bios: ovmf

boot: order=sata0;scsi0

cores: 4

cpu: x86-64-v2-AES

efidisk0: miniprox1_thinpool001:vm-102-disk-0,size=4M

memory: 10240

meta: creation-qemu=9.0.2,ctime=1734709623

name: BRTAT20c

net0: virtio=BC:24:11

F:1B:11,bridge=vmbr0

net1: virtio=BC:24:11:29:EE:16,bridge=vmbr0

ostype: l26

sata0: none,media=cdrom

scsi0: miniprox1_thinpool001:vm-102-disk-1,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=4231016d-8b18-8bd2-6e22-39429ef526ec

sockets: 1

vmgenid: 339a0797-63ce-4e7c-9057-52b416c86ad7

root@miniprox1:/etc/pve/qemu-server#

So how do we bootup if it won't boot with scsi0 and virtio scsi drivers? The solution I've found to use controller=VirtIO SCSI (as shown in above .conf as scsihw: virtio-scsi-pci) while also still using scsi0 disk devices, and then boot into RESCUE kernel to rebuild/reinstall the linux main kernel. Not sure why that is needed, but that's my workaround for now:

I boot into the guest VM's linux rescue kernel by selecting it from the grub menu (because that top listed kernel doesn't bootup I must boot into "rescue" and recreate or reinstall a new workable kernel):

I wanted to use a el8uek (UEK=Oracle Unbreakable Linux kernel), and so once I boot into the rescue kernel I install a new/latest UEK kernel:

Then I add that new kernel so that it appears and defaults in the grub menu:

Then I can boot into the new kernel:

and the proxmox qemu service is running too:

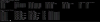

double checking that the virtio drivers are installed and being utilized:

And because I was curious and wanted to know what those are, here is chatGPT-4o response as to what those various Virtio drivers are for:

The VirtIO drivers you see in the output each serve a different purpose, particularly when it comes to optimizing I/O performance and memory management in virtualized environments. Here's a breakdown of what each module represents:

1. virtio_balloon

- Purpose: This module is responsible for dynamic memory management. The balloon driver allows the hypervisor (in this case, Oracle Linux with KVM or QEMU) to dynamically adjust the memory allocated to a virtual machine. It does this by "inflating" and "deflating" the balloon, which mimics memory usage and helps the hypervisor optimize physical memory between guests.

- Use case: It helps manage overcommitment of memory by adjusting the memory size available to VMs without requiring a reboot. If memory is tight on the host, the balloon driver can release memory from the guest to the host.

2. virtio_net

- Purpose: This module provides the VirtIO driver for network devices. VirtIO networking enables high-performance network I/O for virtual machines (VMs) by providing a paravirtualized network device.

- Use case: It offers significantly better performance than emulated network devices (such as the e1000), making it ideal for high-performance networking in VMs.

3. virtio_console

- Purpose: The VirtIO console driver provides a paravirtualized serial console interface to the guest.

- Use case: This is used to manage serial devices in the guest (such as virtual serial ports for debugging or logging). It's typically used for management purposes and does not directly impact normal network or disk I/O.

4. virtio_scsi

- Purpose: This module provides a paravirtualized SCSI driver for block storage devices.

- Use case: It allows high-performance SCSI devices (such as virtual disks) to be accessed by virtual machines. It is used to provide better I/O performance for VM disk devices compared to the emulated IDE or SATA controllers.

5. virtio_pci

- Purpose: This driver enables communication between the guest and VirtIO devices over PCI (Peripheral Component Interconnect). It acts as a bridge between the virtual machine and VirtIO devices, including network, block, and other devices.

- Use case: This is the core module that facilitates communication for other VirtIO drivers (like virtio_net, virtio_blk, etc.) by registering the VirtIO devices on the PCI bus.

6. virtio_pci_legacy_dev

- Purpose: This is a legacy driver for older versions of VirtIO that were designed to work with specific configurations of the VirtIO PCI device.

- Use case: It's used for backward compatibility with older virtual machines or systems that use earlier versions of VirtIO. It's generally not needed for newer systems using modern VirtIO drivers but is included for compatibility.

7. virtio_pci_modern_dev

- Purpose: This is a more recent version of the virtio_pci driver that provides better performance and more features compared to the legacy driver (virtio_pci_legacy_dev).

- Use case: It is used to enable communication between the guest and VirtIO devices with the latest optimizations and features.

Summary of Key Concepts:

- Ballooning: The virtio_balloon driver allows dynamic memory management for virtual machines, enabling the host to reclaim memory from the guest when needed.

- Legacy vs. Modern: The virtio_pci_legacy_dev and virtio_pci_modern_dev drivers are related to compatibility. The legacy driver is for older systems, while the modern version provides enhanced performance and features.

- Networking and Block Storage: The virtio_net and virtio_scsi drivers are used for network and storage devices, respectively, and provide efficient I/O by avoiding the overhead of emulated devices.

Clear as mud?

I'll probably spend alot of my vacation next week importing and converting most of my ESXi VMs into Proxmox. Cool stuff