Fun with an MD1200/MD1220 & SC200/SC220

- Thread starter fohdeesha

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

I have figured out how to get the SES EncServ bit to present as enabled (at least on an enclosure programmed as MD1200). So that /sys/class/enclosure gets populated by the Linux kernel.

Short version:

One bit in the MD12_106.bin firmware image that @fohdeesha shared in post #7 needs editing. Change hex value 90 to D0 at location 0002B7DF. Then flash the modified image and make it active.

Long version of how I figured it out:

Sharing the details in case others see any issues with the approach. Or want to try the same method on other firmware versions. Or just to try and share/document fully.

As @neonclash mentioned in post #111, the ENCSERV bit is 0 when doing an inquiry of the enclosure. This frustrated me too because /sys/class/enclosure was not populated, even though SES-2 is supposed to be supported per sg3-utils sg_inq command:

I dumped the hex values for the same inquiry:

I used motivation from this bug report:

github.com

github.com

Where the user had recompiled the kernel with modifications to ses.c in order to "force" an over-ride for these enclosures based on string match for MD1200 or MD1220. And /sys/class/enclosure worked (at least partially) for them.

I also used this document:

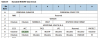

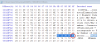

Which has this figure on page 94:

Notice that the ENCSERV bit is part of byte 6 of the inquiry.

In the hex inquiry output I captured above, I could see that byte 6 was value 90 - which would make the ENCSERV bit 0 (since binary value would be 10010000). I decided to try and find the location of this data in the enclosure, and change it to D0 value (11010000) - so that only the ENCSERV bit would be different.

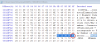

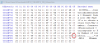

I had previously captured some "_flashdump 0 100000" output to try and map the different regions. I searched for part of the inquiry hex data "05 12 3d 00 90" and found it in the line at offset 1402B7D0 and that was part of the EMM image region 0:

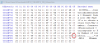

So I opened the MD12_106.bin file @fohdeesha made available in post #7 with HxD editor. And searched for that hex data. I found it in the line at offset 0002B7D0. The specific byte I was looking for was at location 0002B7DF:

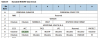

I made a copy of the firmware and changed that location's data from 90 to D0 using HxD editor:

Then flashed the modified firmware with _boot command so that all other EMM firmware regions would be wiped and there would be no conflict with multiple 1.06 versions present in different regions, but with different data.

After restarting the enclosure, it seems to work. Here is the new inquiry showing "EncServ=1" in the reply:

And D0 as the hex value in byte 6 now instead of 90 like before:

And /sys/class/enclosure is populated and seems to be working for me so far. I can query the device in a slot, set and clear the slot's fault/locate LEDs (which was my main goal). The encled script (from GitHub - amarao/sdled: Control fault/locate indicators in disk slots in enclosures (SES devices)) now works as well.

One issue I'm still facing - I have a few disks which suffer from the issue that was mentioned in the zfs-ha bug. The device tree for the disk is present in /sys/class/enclosure/H:C:T:L/Slot X/device when first starting the enclosure. When I unplug, the device tree disappears as expected. Then when I hot plug them, they don't re-populate the "device" tree. But I have other drives which work fine. The drives with problems are also showing some kernel messages when hot plugged, so I think it is a drive problem, not an enclosure problem. They are Seagate IBM-branded drives and I am attempting a format of them with SeaTools as mentioned in this other STH thread:

And further disclosure - I only have a basic/simple setup with one enclosure in unified mode with a single EMM installed. So no testing of multiple enclosures, multipath, split mode, etc. I used a Proxmox based system with kernel version 5.15.83-1-pve to do the testing along with LSI 9207-8e HBA and mpt3sas driver.

I hope some others are able to test this and I hope that it works for them as well.

Short version:

One bit in the MD12_106.bin firmware image that @fohdeesha shared in post #7 needs editing. Change hex value 90 to D0 at location 0002B7DF. Then flash the modified image and make it active.

Long version of how I figured it out:

Sharing the details in case others see any issues with the approach. Or want to try the same method on other firmware versions. Or just to try and share/document fully.

As @neonclash mentioned in post #111, the ENCSERV bit is 0 when doing an inquiry of the enclosure. This frustrated me too because /sys/class/enclosure was not populated, even though SES-2 is supposed to be supported per sg3-utils sg_inq command:

Code:

# sg_inq -d /dev/sg0

standard INQUIRY:

PQual=0 Device_type=13 RMB=0 LU_CONG=0 version=0x05 [SPC-3]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=1 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=1]

EncServ=0 MultiP=1 (VS=0) [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=0 Sync=0 [Linked=0] [TranDis=0] CmdQue=0

[SPI: Clocking=0x0 QAS=0 IUS=0]

length=66 (0x42) Peripheral device type: enclosure services device

Vendor identification: DELL

Product identification: MD1200

Product revision level: 1.06

Version descriptors:

SAM-3 (no version claimed)

SAS-1.1 (no version claimed)

SPC-3 (no version claimed)

SES-2 (no version claimed)

Code:

# sg_inq -d -H /dev/sg0

00 0d 00 05 12 3d 00 90 00 44 45 4c 4c 20 20 20 20 ....=...DELL

10 4d 44 31 32 30 30 20 20 20 20 20 20 20 20 20 20 MD1200

20 31 2e 30 36 33 59 31 50 51 57 31 20 00 00 00 00 1.063Y1PQW1 ....

30 00 00 00 00 00 00 00 00 00 00 00 60 0c 00 03 00 ...........`....

40 03 e0 ..Dell 1220 and /sys/class/enclosure · Issue #31 · ewwhite/zfs-ha

First of all, thanks for this beautiful and elegant solution. Using it for my XenServer pool and works great! The only downside is that with Dell MD1220 there's no way to identify drives because /s...

Where the user had recompiled the kernel with modifications to ses.c in order to "force" an over-ride for these enclosures based on string match for MD1200 or MD1220. And /sys/class/enclosure worked (at least partially) for them.

I also used this document:

Which has this figure on page 94:

Notice that the ENCSERV bit is part of byte 6 of the inquiry.

In the hex inquiry output I captured above, I could see that byte 6 was value 90 - which would make the ENCSERV bit 0 (since binary value would be 10010000). I decided to try and find the location of this data in the enclosure, and change it to D0 value (11010000) - so that only the ENCSERV bit would be different.

I had previously captured some "_flashdump 0 100000" output to try and map the different regions. I searched for part of the inquiry hex data "05 12 3d 00 90" and found it in the line at offset 1402B7D0 and that was part of the EMM image region 0:

So I opened the MD12_106.bin file @fohdeesha made available in post #7 with HxD editor. And searched for that hex data. I found it in the line at offset 0002B7D0. The specific byte I was looking for was at location 0002B7DF:

I made a copy of the firmware and changed that location's data from 90 to D0 using HxD editor:

Then flashed the modified firmware with _boot command so that all other EMM firmware regions would be wiped and there would be no conflict with multiple 1.06 versions present in different regions, but with different data.

After restarting the enclosure, it seems to work. Here is the new inquiry showing "EncServ=1" in the reply:

Code:

# sg_inq -d /dev/sg0

standard INQUIRY:

PQual=0 Device_type=13 RMB=0 LU_CONG=0 version=0x05 [SPC-3]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=1 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=1]

EncServ=1 MultiP=1 (VS=0) [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=0 Sync=0 [Linked=0] [TranDis=0] CmdQue=0

[SPI: Clocking=0x0 QAS=0 IUS=0]

length=66 (0x42) Peripheral device type: enclosure services device

Vendor identification: DELL

Product identification: MD1200

Product revision level: 1.06

Version descriptors:

SAM-3 (no version claimed)

SAS-1.1 (no version claimed)

SPC-3 (no version claimed)

SES-2 (no version claimed)

Code:

# sg_inq -d -H /dev/sg0

00 0d 00 05 12 3d 00 d0 00 44 45 4c 4c 20 20 20 20 ....=...DELL

10 4d 44 31 32 30 30 20 20 20 20 20 20 20 20 20 20 MD1200

20 31 2e 30 36 33 59 31 50 51 57 31 20 00 00 00 00 1.063Y1PQW1 ....

30 00 00 00 00 00 00 00 00 00 00 00 60 0c 00 03 00 ...........`....

40 03 e0 ..And /sys/class/enclosure is populated and seems to be working for me so far. I can query the device in a slot, set and clear the slot's fault/locate LEDs (which was my main goal). The encled script (from GitHub - amarao/sdled: Control fault/locate indicators in disk slots in enclosures (SES devices)) now works as well.

One issue I'm still facing - I have a few disks which suffer from the issue that was mentioned in the zfs-ha bug. The device tree for the disk is present in /sys/class/enclosure/H:C:T:L/Slot X/device when first starting the enclosure. When I unplug, the device tree disappears as expected. Then when I hot plug them, they don't re-populate the "device" tree. But I have other drives which work fine. The drives with problems are also showing some kernel messages when hot plugged, so I think it is a drive problem, not an enclosure problem. They are Seagate IBM-branded drives and I am attempting a format of them with SeaTools as mentioned in this other STH thread:

And further disclosure - I only have a basic/simple setup with one enclosure in unified mode with a single EMM installed. So no testing of multiple enclosures, multipath, split mode, etc. I used a Proxmox based system with kernel version 5.15.83-1-pve to do the testing along with LSI 9207-8e HBA and mpt3sas driver.

I hope some others are able to test this and I hope that it works for them as well.

That's a pretty awesome hack, @wavejumper, thanks for sharing!

I was considering my options for this exact problem recently, but didn't think of patching the firmware.

I ended up doing this:

This worked well enough for me when I needed to locate a particular disk, but your approach is way cooler.

I was considering my options for this exact problem recently, but didn't think of patching the firmware.

I ended up doing this:

Bash:

lsscsi -g | grep enclosu # Locate the /dev/sg? enclosure device

# Blink a led of a particular device (sdf in the case below)

sg_ses --sas-addr=`cat /sys/block/sdf/device/sas_address` --set=locate /dev/sg5

# Clear it

sg_ses --sas-addr=`cat /sys/block/sdf/device/sas_address` --clear=locate /dev/sg5Great work! I'm surprised there was no checksum byte that needed updating after changing the firmware contents, or perhaps there is but the raw xmodem flashing routine doesn't check it. Did you try flashing with sg_utils as well?I have figured out how to get the SES EncServ bit to present as enabled (at least on an enclosure programmed as MD1200). So that /sys/class/enclosure gets populated by the Linux kernel.

Short version:

One bit in the MD12_106.bin firmware image that @fohdeesha shared in post #7 needs editing. Change hex value 90 to D0 at location 0002B7DF. Then flash the modified image and make it active.

Long version of how I figured it out:

Sharing the details in case others see any issues with the approach. Or want to try the same method on other firmware versions. Or just to try and share/document fully.

As @neonclash mentioned in post #111, the ENCSERV bit is 0 when doing an inquiry of the enclosure. This frustrated me too because /sys/class/enclosure was not populated, even though SES-2 is supposed to be supported per sg3-utils sg_inq command:

I dumped the hex values for the same inquiry:Code:# sg_inq -d /dev/sg0 standard INQUIRY: PQual=0 Device_type=13 RMB=0 LU_CONG=0 version=0x05 [SPC-3] [AERC=0] [TrmTsk=0] NormACA=0 HiSUP=1 Resp_data_format=2 SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=1] EncServ=0 MultiP=1 (VS=0) [MChngr=0] [ACKREQQ=0] Addr16=0 [RelAdr=0] WBus16=0 Sync=0 [Linked=0] [TranDis=0] CmdQue=0 [SPI: Clocking=0x0 QAS=0 IUS=0] length=66 (0x42) Peripheral device type: enclosure services device Vendor identification: DELL Product identification: MD1200 Product revision level: 1.06 Version descriptors: SAM-3 (no version claimed) SAS-1.1 (no version claimed) SPC-3 (no version claimed) SES-2 (no version claimed)

I used motivation from this bug report:Code:# sg_inq -d -H /dev/sg0 00 0d 00 05 12 3d 00 90 00 44 45 4c 4c 20 20 20 20 ....=...DELL 10 4d 44 31 32 30 30 20 20 20 20 20 20 20 20 20 20 MD1200 20 31 2e 30 36 33 59 31 50 51 57 31 20 00 00 00 00 1.063Y1PQW1 .... 30 00 00 00 00 00 00 00 00 00 00 00 60 0c 00 03 00 ...........`.... 40 03 e0 ..

Dell 1220 and /sys/class/enclosure · Issue #31 · ewwhite/zfs-ha

First of all, thanks for this beautiful and elegant solution. Using it for my XenServer pool and works great! The only downside is that with Dell MD1220 there's no way to identify drives because /s...github.com

Where the user had recompiled the kernel with modifications to ses.c in order to "force" an over-ride for these enclosures based on string match for MD1200 or MD1220. And /sys/class/enclosure worked (at least partially) for them.

I also used this document:

Which has this figure on page 94:

View attachment 26945

Notice that the ENCSERV bit is part of byte 6 of the inquiry.

In the hex inquiry output I captured above, I could see that byte 6 was value 90 - which would make the ENCSERV bit 0 (since binary value would be 10010000). I decided to try and find the location of this data in the enclosure, and change it to D0 value (11010000) - so that only the ENCSERV bit would be different.

I had previously captured some "_flashdump 0 100000" output to try and map the different regions. I searched for part of the inquiry hex data "05 12 3d 00 90" and found it in the line at offset 1402B7D0 and that was part of the EMM image region 0:

View attachment 26946

So I opened the MD12_106.bin file @fohdeesha made available in post #7 with HxD editor. And searched for that hex data. I found it in the line at offset 0002B7D0. The specific byte I was looking for was at location 0002B7DF:

View attachment 26947

I made a copy of the firmware and changed that location's data from 90 to D0 using HxD editor:

View attachment 26948

Then flashed the modified firmware with _boot command so that all other EMM firmware regions would be wiped and there would be no conflict with multiple 1.06 versions present in different regions, but with different data.

After restarting the enclosure, it seems to work. Here is the new inquiry showing "EncServ=1" in the reply:

And D0 as the hex value in byte 6 now instead of 90 like before:Code:# sg_inq -d /dev/sg0 standard INQUIRY: PQual=0 Device_type=13 RMB=0 LU_CONG=0 version=0x05 [SPC-3] [AERC=0] [TrmTsk=0] NormACA=0 HiSUP=1 Resp_data_format=2 SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=1] EncServ=1 MultiP=1 (VS=0) [MChngr=0] [ACKREQQ=0] Addr16=0 [RelAdr=0] WBus16=0 Sync=0 [Linked=0] [TranDis=0] CmdQue=0 [SPI: Clocking=0x0 QAS=0 IUS=0] length=66 (0x42) Peripheral device type: enclosure services device Vendor identification: DELL Product identification: MD1200 Product revision level: 1.06 Version descriptors: SAM-3 (no version claimed) SAS-1.1 (no version claimed) SPC-3 (no version claimed) SES-2 (no version claimed)

Code:# sg_inq -d -H /dev/sg0 00 0d 00 05 12 3d 00 d0 00 44 45 4c 4c 20 20 20 20 ....=...DELL 10 4d 44 31 32 30 30 20 20 20 20 20 20 20 20 20 20 MD1200 20 31 2e 30 36 33 59 31 50 51 57 31 20 00 00 00 00 1.063Y1PQW1 .... 30 00 00 00 00 00 00 00 00 00 00 00 60 0c 00 03 00 ...........`.... 40 03 e0 ..

And /sys/class/enclosure is populated and seems to be working for me so far. I can query the device in a slot, set and clear the slot's fault/locate LEDs (which was my main goal). The encled script (from GitHub - amarao/sdled: Control fault/locate indicators in disk slots in enclosures (SES devices)) now works as well.

One issue I'm still facing - I have a few disks which suffer from the issue that was mentioned in the zfs-ha bug. The device tree for the disk is present in /sys/class/enclosure/H:C:T:L/Slot X/device when first starting the enclosure. When I unplug, the device tree disappears as expected. Then when I hot plug them, they don't re-populate the "device" tree. But I have other drives which work fine. The drives with problems are also showing some kernel messages when hot plugged, so I think it is a drive problem, not an enclosure problem. They are Seagate IBM-branded drives and I am attempting a format of them with SeaTools as mentioned in this other STH thread:

And further disclosure - I only have a basic/simple setup with one enclosure in unified mode with a single EMM installed. So no testing of multiple enclosures, multipath, split mode, etc. I used a Proxmox based system with kernel version 5.15.83-1-pve to do the testing along with LSI 9207-8e HBA and mpt3sas driver.

I hope some others are able to test this and I hope that it works for them as well.

Thanks.Great work! I'm surprised there was no checksum byte that needed updating after changing the firmware contents, or perhaps there is but the raw xmodem flashing routine doesn't check it. Did you try flashing with sg_utils as well?

I thought about a possible checksum issue when editing the firmware, so I only tried the xmodem based approach with _boot command and the serial cable using extraputty.

Mainly because it had been previously mentioned that the xmodem method seemed void of any checking. And I wanted a proof of concept whether it would work or not. Then I was so excited when it worked, that I posted about it before trying the other method.

I will try tomorrow using sg_utils and report back whether it works or not. The system and enclosure are tied up at the moment.

Last edited:

The sg_write_buffer method does not seem to work to load the modified firmware. It returns 0 like the command completed successfully. But after re-starting the enclosure, the image isn't there and reverts to what was already present.

But before restarting the enclosure, it appears to be there in _ver output. Like it downloaded it, but couldn't actually save/activate it.

Verbose details below.

I started with _boot and xmodem transfer of original MD12_106.bin file over serial cable. Then restarted the enclosure. _ver shows as expected (only region 0 is populated and active):

And sg_inq shows EncServ=0 as expected.

Then write modified firmware with sg_utils:

Before restarting the enclosure, _ver looks like it took the file and put it in region 2 since it is no longer empty:

However, after restarting the enclosure, the region 2 image is empty again, per _ver command. And region 0 is still the active one:

And sg_inq still shows EncServ=0 because there is still only one image to boot from (the original unmodified version).

If I reverse the procedure:

1. start with modified firmware through _boot and xmodem transfer into region 0 (region 1 and 2 are empty)

2. sg_write_buffer of unmodified firmware

3. restart enclosure

Then the unmodified firmware is saved in region 2 and is activated after restarting the enclosure. And the EncServ goes from 1 to 0 after the restart of the enclosure.

So I guess this single bit firmware hack will only work with xmodem based transfers, unless someone knows the checksum methods to consider and can help me change appropriate locations in the modified firmware file.

But before restarting the enclosure, it appears to be there in _ver output. Like it downloaded it, but couldn't actually save/activate it.

Verbose details below.

I started with _boot and xmodem transfer of original MD12_106.bin file over serial cable. Then restarted the enclosure. _ver shows as expected (only region 0 is populated and active):

Code:

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

BlueDress.106.000 >_ver

********************* Bench Build **********************

Build Owner : GitHub

Build Number : 000

Build Version : 106

Build Date : Mon Aug 24 12:28:59 2015

FPGA Revision : A9

Board Revision : 8

CPLD Revision : 7

**************Firmware Image Information****************

Active--->Image Region 0 (Always Boot)

Revision : 0.0.63.0

Dell Version : 1.06

Total Image Size : 000414fc [267516]

Fast Boot : Yes

Image Address : 0x14000000

RegionOffset : 0x00000000

RegionSize : 0x00080000

RegionType : 0x00000000

Image Region 1

Revision : 255.255.255.255

Dell Version : ▒▒▒▒

Total Image Size : ffffffff [-1]

Fast Boot : No

Image Address : 0x14080000

RegionOffset : 0x00080000

RegionSize : 0x00080000

RegionType : 0x00000001

Image Region 2

Revision : 255.255.255.255

Dell Version : ▒▒▒▒

Total Image Size : ffffffff [-1]

Fast Boot : No

Image Address : 0x14100000

RegionOffset : 0x00100000

RegionSize : 0x00080000

RegionType : 0x00000002

********************************************************Then write modified firmware with sg_utils:

Code:

# lsscsi -g |grep MD

[10:0:11:0] enclosu DELL MD1200 1.06 - /dev/sg8

# sg_write_buffer -b 4k -m dmc_offs_save -I MD12_106_JUmod.bin /dev/sg8

# echo $?

0

Code:

BlueDress.106.000 >_ver

********************* Bench Build **********************

Build Owner : GitHub

Build Number : 000

Build Version : 106

Build Date : Mon Aug 24 12:28:59 2015

FPGA Revision : A9

Board Revision : 8

CPLD Revision : 7

**************Firmware Image Information****************

Active--->Image Region 0 (Always Boot)

Revision : 0.0.63.0

Dell Version : 1.06

Total Image Size : 000414fc [267516]

Fast Boot : Yes

Image Address : 0x14000000

RegionOffset : 0x00000000

RegionSize : 0x00080000

RegionType : 0x00000000

Image Region 1

Revision : 255.255.255.255

Dell Version : ▒▒▒▒

Total Image Size : ffffffff [-1]

Fast Boot : No

Image Address : 0x14080000

RegionOffset : 0x00080000

RegionSize : 0x00080000

RegionType : 0x00000001

Image Region 2

Revision : 0.0.63.0

Dell Version : 1.06

Total Image Size : 000414fc [267516]

Fast Boot : Yes

Image Address : 0x14100000

RegionOffset : 0x00100000

RegionSize : 0x00080000

RegionType : 0x00000002

********************************************************

Code:

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

BlueDress.106.000 >_ver

********************* Bench Build **********************

Build Owner : GitHub

Build Number : 000

Build Version : 106

Build Date : Mon Aug 24 12:28:59 2015

FPGA Revision : A9

Board Revision : 8

CPLD Revision : 7

**************Firmware Image Information****************

Active--->Image Region 0 (Always Boot)

Revision : 0.0.63.0

Dell Version : 1.06

Total Image Size : 000414fc [267516]

Fast Boot : Yes

Image Address : 0x14000000

RegionOffset : 0x00000000

RegionSize : 0x00080000

RegionType : 0x00000000

Image Region 1

Revision : 255.255.255.255

Dell Version : ▒▒▒▒

Total Image Size : ffffffff [-1]

Fast Boot : No

Image Address : 0x14080000

RegionOffset : 0x00080000

RegionSize : 0x00080000

RegionType : 0x00000001

Image Region 2

Revision : 255.255.255.255

Dell Version : ▒▒▒▒

Total Image Size : ffffffff [-1]

Fast Boot : No

Image Address : 0x14100000

RegionOffset : 0x00100000

RegionSize : 0x00080000

RegionType : 0x00000002

********************************************************If I reverse the procedure:

1. start with modified firmware through _boot and xmodem transfer into region 0 (region 1 and 2 are empty)

2. sg_write_buffer of unmodified firmware

3. restart enclosure

Then the unmodified firmware is saved in region 2 and is activated after restarting the enclosure. And the EncServ goes from 1 to 0 after the restart of the enclosure.

So I guess this single bit firmware hack will only work with xmodem based transfers, unless someone knows the checksum methods to consider and can help me change appropriate locations in the modified firmware file.

Last edited:

As follow-up to the issue with the "device" symlink not being created and kernel messages I was facing. Two things I have figured out so far and wanted to share.One issue I'm still facing - I have a few disks which suffer from the issue that was mentioned in the zfs-ha bug. The device tree for the disk is present in /sys/class/enclosure/H:C:T:L/Slot X/device when first starting the enclosure. When I unplug, the device tree disappears as expected. Then when I hot plug them, they don't re-populate the "device" tree. But I have other drives which work fine. The drives with problems are also showing some kernel messages when hot plugged, so I think it is a drive problem, not an enclosure problem. They are Seagate IBM-branded drives and I am attempting a format of them with SeaTools as mentioned in this other STH thread:

The drives which have problems are specifically IBM branded Seagate ST10000NM0226 10TB SAS self encrypted drives with firmware ECG6. They are 4kn drives and were formatted with type 2 protection per smartctl:

Code:

=== START OF INFORMATION SECTION ===

Vendor: IBM-ESXS

Product: ST10000NM0226 E

Revision: ECG6

Compliance: SPC-4

User Capacity: 9,931,038,130,176 bytes [9.93 TB]

Logical block size: 4096 bytes

Formatted with type 2 protection

8 bytes of protection information per logical block

LU is fully provisioned

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Logical Unit id: 0xXXXXXXXXXXXXXXXX

Serial number: XXXXXXXXXXXXXXXXXXXX

Device type: disk

Transport protocol: SAS (SPL-3)

Code:

Feb 2 15:44:24 asrock kernel: [ 2.525106] sd 4:0:0:0: [sda] tag#2924 FAILED Result: hostbyte=DID_ABORT driverbyte=DRIVER_OK cmd_age=0s

Feb 2 15:44:24 asrock kernel: [ 2.525110] sd 4:0:0:0: [sda] tag#2924 Sense Key : Illegal Request [current]

Feb 2 15:44:24 asrock kernel: [ 2.525112] sd 4:0:0:0: [sda] tag#2924 Add. Sense: Logical block reference tag check failed

Feb 2 15:44:24 asrock kernel: [ 2.525114] sd 4:0:0:0: [sda] tag#2924 CDB: Read(32)

Feb 2 15:44:24 asrock kernel: [ 2.525116] sd 4:0:0:0: [sda] tag#2924 CDB[00]: 7f 00 00 00 00 00 00 18 00 09 20 00 00 00 00 00

Feb 2 15:44:24 asrock kernel: [ 2.525118] sd 4:0:0:0: [sda] tag#2924 CDB[10]: 90 83 b7 f0 90 83 b7 f0 00 00 00 00 00 00 00 01I ended up removing the type 2 protection with sg_format to try and get past the issue I was having. I know this disables the SED feature, but for now I am ok with that. This change cleaned up the kernel related messages I saw. But it did not resolve the device symlink creation issue.

Then I did further digging with udevadm monitoring and kernel tracing to try and figure out why the device symlink didn't get created in sysfs. And noticed that these drives take up to 17-18 seconds to come online. And the ses.c enclosure query was happening too soon per comparisons against drives which worked properly and came online in 5 seconds or so and worked properly.

I added printk statements to the drivers/scsi/ses.c and found where the failure occurred. Then added a delay before the enclosure was queried in order to give the drive more time to come online. It worked - and now these drives are also seeming to work fine with hotplug and the device symlink is created on hotplug as well. So there must be some race or other timing related issue with these drives which take a long time to come online. I have four of them and all behave similar.

The delay I added was before the enclosure query in drivers/scsi/ses.c source. It is inside ses_match_to_enclosure function right before the call to ses_enclosure_data_process.

Original code:

Code:

if (refresh)

ses_enclosure_data_process(edev, edev_sdev, 0);

Code:

mdelay(20000);

if (refresh)

ses_enclosure_data_process(edev, edev_sdev, 0);

Code:

#include <linux/delay.h>This was all done on proxmox 7.3 using git clone of the proxmox kernel from a few days ago (pve-kernel_5.19.17-2_amd64). I have not done the same with an unmodified kernel.org kernel but will do so on a fresh Debian install. I originally did try several different distributions and kernel versions on several systems to make sure it wasn't related to one system/kernel/distribution.

Now I will need to figure out how to get a scsi driver kernel bug created to try and address this. I'm sure a hard-coded delay is not appropriate for the real kernel, but I think it proved that the enclosure is not really at fault and was just proof of concept for me. And I wanted to share.

If anyone has knowledge of the scsi/ses.c workings and can help me find a better work-around than a hard-coded delay, it would be appreciated.

Hey all was wondering if anyone could give some details on upgrading the firmware of the SC220s? I got one from work today, but it's still running ver 1.00 and wanted to upgrade it to 1.03. I used extraPuTTY to get into it and used _download 2 0 y to send the file from post #18, but as soon as i send it the screen just starts spamming 3s and i see an 'error not found!'.

I don't have access to a dell server and hoped to just connect this up to my TrueNAS server to expand my storage.

I don't have access to a dell server and hoped to just connect this up to my TrueNAS server to expand my storage.

Was moving some drives from one SC200 to another and could not get the SATA drives to show back up in the OS. Eventually realized it was because the single EMM was in the bottom slot. Once I moved it to the top slot all the SATA drives started working.

Thanks for the tips, love the _shutup command, can see must have been a great dev team!

I keep getting the following showing in the terminal, little concerning it seems to be doing a reboot all the time? but the disks don't drop... Can see I'm all ready on the latest firmware. the "Devil Startup Complete" also seems linked to the fans ramping back up.

I keep getting the following showing in the terminal, little concerning it seems to be doing a reboot all the time? but the disks don't drop... Can see I'm all ready on the latest firmware. the "Devil Startup Complete" also seems linked to the fans ramping back up.

Code:

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

BlueDress.106.000 >

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

BlueDress.106.000 >

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

BlueDress.106.000 >Maybe the older xyratex manual helps.

Try this:I ended up removing the type 2 protection with sg_format to try and get past the issue I was having.

sg_format -v --format --fmtpinfo=0 --pfu=0 --size=4096 /dev/da$Should kill problem#1

Formatted with type 2 protection and #2 8 bytes of protection information per logical blockpage2 in attachment has info

Attachments

-

140.9 KB Views: 12

Last edited:

so this is fun.. I bought a Dell EqualLogic PS6100 without controllers, bought two "03DJRJ Dell PowerVault MD1200 MD1220 6Gbps SAS 512MB Cache Controller" and slapped them in, connected Dell password reset cable to a USB-RS232 adapter and on reboot I'm greeted with:

but it just hangs here and I can't send any commands.

**** Devil Startup Complete (Based on vendor drop 00.00.63.00) ****

GreenDress.101.0 >but it just hangs here and I can't send any commands.

Uhh so.. I may have a weird development firmware build on my controllers.. running _ver shows the Build Owner not as Github but as a person’s name..who is a principal dev who works at Dell. Build date is 2012 but Dell Version is 1.05 and Revision 0.0.63.0.

just to add some more info, here's the output of my "fru_read 2" 00000000: 01 0a 19 01 00 00 20 bb 01 09 00 81 88 82 83 64 ...............d000 - Pastebin.com

note that it says "MD1240-EQL" which is funny.

note that it says "MD1240-EQL" which is funny.

Hi,

I am new to this stuff! and i want to transform a MD3220 controler to accept all drives. Now my problems are:

1) i have 2 MD3220 controlers that they get only IP i cant see on MDSM, i don't know if because they are in a md3220i and original controlers for MDXXXXi(latest firmware) are working perfect in the same shelf.

2) can i still do something about that? there is any command to reset md3220 to work with md3220i?

3) if not i can still use any modified firmware with correct tool to push and use md3220 to accept shelf and sata drives

4) if you can point from where i can download all tools and firmware for doing this ?

All info from here is perfect and my knowledge right now is monkey see monkey do

Thanks for your future help

I am new to this stuff! and i want to transform a MD3220 controler to accept all drives. Now my problems are:

1) i have 2 MD3220 controlers that they get only IP i cant see on MDSM, i don't know if because they are in a md3220i and original controlers for MDXXXXi(latest firmware) are working perfect in the same shelf.

2) can i still do something about that? there is any command to reset md3220 to work with md3220i?

3) if not i can still use any modified firmware with correct tool to push and use md3220 to accept shelf and sata drives

4) if you can point from where i can download all tools and firmware for doing this ?

All info from here is perfect and my knowledge right now is monkey see monkey do

Thanks for your future help

Thank you very much to this tip, I use cronjob and my MD1200 is finally quieter!!!

For information, if you are unable to obtain input with the serial port of your servers (R710 for example), it is because you must deactivate the serial port in your BIOS. By default the BIOS enables the serial port for remote management with Dell tools. The serial port is therefore "blocked" for Dell monitoring use. Disabling it in the BIOS frees it up. Then your OS will have full access to the Serial port and Minicom, Cu, etc... will get a functional entry.

This trick for the fans gave me another idea, maybe it is possible to automatically turn off/on the MD1200 depending on the state of the host (UPS, manual shutdown, etc.) with a script during boot and during shutdown?

For the shutdown just run the "_shutdown 2" command and the MD1200 will shutdown.

However, how do you restart the MD1200 afterwards? I don't see any command to do this, so manual intervention is required?

In this case, perhaps rather turn to a connected power strip solution allowing full control via script...

Has anyone here tried this remote stop/start trick?

For information, if you are unable to obtain input with the serial port of your servers (R710 for example), it is because you must deactivate the serial port in your BIOS. By default the BIOS enables the serial port for remote management with Dell tools. The serial port is therefore "blocked" for Dell monitoring use. Disabling it in the BIOS frees it up. Then your OS will have full access to the Serial port and Minicom, Cu, etc... will get a functional entry.

This trick for the fans gave me another idea, maybe it is possible to automatically turn off/on the MD1200 depending on the state of the host (UPS, manual shutdown, etc.) with a script during boot and during shutdown?

For the shutdown just run the "_shutdown 2" command and the MD1200 will shutdown.

However, how do you restart the MD1200 afterwards? I don't see any command to do this, so manual intervention is required?

In this case, perhaps rather turn to a connected power strip solution allowing full control via script...

Has anyone here tried this remote stop/start trick?

Adding this in case others run into the same problem.

On a MD1200 connected to a R730 I ran into a same problem where I couldn't get a connection to the serial port on the R730. I'm sure it's user error but using a serial RS232 to USB adapter that I had laying around I got everything working fine. In Proxmox I just passed the USB Device ID to my TrueNAS VM and it worked. I originally tried passing the serial port to my TrueNAS VM but for whatever reason that wasn't working.

Not a cable problem, just disable the serial port in the BIOS to free it up and give control of the port to the OS@fohdeesha, I bought this cable, unfortunately exactly same problem. I see spew on the terminal during power on and then nothing else happens. I cannot send any characters.

My MD1200 also does not work with sata drives. I put a single sata drive on the shelf but nothing happens. These shelves are supposed to work with SATA drives. I don't quite understand why I am having so much trouble with them. Is it because of old firmware? Mine says

BlueDress.105.100 .

I remember that I can update the firmware over a regular SAS connection, should I give a try to that?

Hi, I had 2pcs SC220 units with drives in them and I wanted to convert them to SAN controllers so I ordered 4 sc4020 FC-16Gb controllers (2 type A and 2 type B - just in case because I did not know what is difference between type A and B). Anyway prior to order I checked pictures of backplanes and it seems fine, I can insert FC controllers instead of SAS, and it powers on...but it does not work. What I have noticed is that in SC220 enclosures backplane is same for top and bottom controller, but on pictures of SC4020 top and bottom is inverted. That makes me to suspect that enclosures for controller and expansion are different? I used to do this conversion with many other storages (like HP 3PAR, Lenovo v5000/v7000, netapp) where enclosure is the same for all versions, you just swap SAN controller with IOM expansions and it works fine.

Can someone confirm that compellent SC220 can be converted by swapping SAS io module with SC controller?

Thanks in advance.

Can someone confirm that compellent SC220 can be converted by swapping SAS io module with SC controller?

Thanks in advance.

The SC200/SC220 are rebranded PowerVault MD1200/MD1220's which were a Dell designed/built product. The only other product which looks like it uses the same enclosure/backplane part number is some of the EqualLogic products like the PS4100. I've never tried this swap, and if it does work then you are limited by the licensing and software of the EQL product. This design very much follows the KISS principal, which I am a huge fan of for the simplicity and it being very reliable.Hi, I had 2pcs SC220 units with drives in them and I wanted to convert them to SAN controllers so I ordered 4 sc4020 FC-16Gb controllers (2 type A and 2 type B - just in case because I did not know what is difference between type A and B). Anyway prior to order I checked pictures of backplanes and it seems fine, I can insert FC controllers instead of SAS, and it powers on...but it does not work. What I have noticed is that in SC220 enclosures backplane is same for top and bottom controller, but on pictures of SC4020 top and bottom is inverted. That makes me to suspect that enclosures for controller and expansion are different? I used to do this conversion with many other storages (like HP 3PAR, Lenovo v5000/v7000, netapp) where enclosure is the same for all versions, you just swap SAN controller with IOM expansions and it works fine.

Can someone confirm that compellent SC220 can be converted by swapping SAS io module with SC controller?

Thanks in advance.

The SC4020 (Type A signifies its a SC4020 controller, Type B is actually an SCv20x0 controller (less RAM, lower spec IO card and more software limitation on the hardware) is built by Xyratex (now owned by Seagate) for Dell, I'm actually a little surprised you even got it to fit and power I never thought they we pin compatible. If you do get a compatible enclosure same concept, you are then limited by the software and licensing of Storage Center. The Xyratex design is more complicated and you get way more logging and sensor data, but because of this i find it a bit more finicky.

Hope that helps.

Last edited: