Doing some benchmarking for reducing latency in AIO - ESXi 6.5 to OmniOS ZFS backed NFS datastore.

The hardware is:

Now onto the benchmarks:

Testing out latency reduction from VSphere 6.5 best practices:

https://www.vmware.com/content/dam/...performance/Perf_Best_Practices_vSphere65.pdf

p.43

OmniOS VM ESXi advanced param ethernet2.coalescingScheme:

(for "storagenet" VMXNet3 adapter ethernet2)

Default setup (param not present - coalescing enabled by default)

4 x WD GLD in 2 stripe x 2 mirror - with S3700 slog:

ethernet2.coalescingScheme : disable

4 x WD GLD in 2 stripe x 2 mirror - with P4800X Optane slog:

ethernet2.coalescingScheme - not present

ethernet2.coalescingScheme : disable

OmniOS VM set to Latency Sensitivity - High (from Normal)

- Side effect is reserving all CPU cores assigned to VM for that VM.

S3700 200GB Slog

P4800X 375GB Optane Slog (as 30GB VMware VMDK - Thick Eager Zeroed)

- 4 CPU cores

Testing CPU Cores assigned to OmniOS with Latency Sensitivity High:

P4800X 375GB Optane Slog (as 30GB VMware VMDK - Thick Eager Zeroed)

- 2 CPU cores

- 4 Cores:

- 5 Cores:

- 6 CPU cores

Finally - Optane VMDK to native ESXi6 Datastore:

P4800X 375GB Optane Slog (30GB VMware VMDK - to NON-NFS local Optane ESXi6 Datastore)

Profit?

The hardware is:

- Supermicro X10DRi-T

- 2 x Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40GHz

- 128 GB RAM DDR4 (@ 1866 Mhz - cpu limited from 2133Mhz)

- 3 x 9211-8i in HBA IT mode /w P20.0.0.7 firmware

- 1 x AOC-SLG3-2E4 (non R) HBA card to connect NVMe Optane

- Updated to Version 3.1

- Bifurcation enabled and setup in Slot 5 to 4x4

- Slot 5 EFI Oprom -> Legacy

- Power Settings

- Custom -> C State Control

- C6 (Retention) State, CPU C6 Report Enable, C1E Enable

- Custom -> P State Control

- EIST (P-States) Enable, Turbo Mode Enable, P-state Coord – HW_ALL, Boost perf mode Max performance

- Custom -> T State Control

- Enable

- Custom -> C State Control

- USB 3.0 Support -> Enabled (to support USB 3.0 key booting)

- 4 x Cpus

- 59392 MB RAM (All reserved)

- 3 x 9211-8i in passthrough

- 4 x Intel S4600 – 960GB

- 4 x WD Gold 6TB

- 1 x Intel S3700 - 200GB (slog)

- 1 x Intel Optane P4800X - 375GB (slog)

- OmniOS VM with VMXNET3 adapter attached to Port group "storagenet"

- ESXi VMkernel NIC vmk2 for NFS storage attached to own Port Group "storagevmk"

- Both portgroups "storagenet" and "storagevmk" attached to separate vSwitch2

- Not attached to any Physical NIC

- vSwitch2 set to 9000MTU

- OmniOS VM VMXNET3 adapter set to 9000MTU

- omnios-r151028

- Intel Optane P4800X - 375GB (slog) - added as 30 GB VMDK on local Optane backed datastore.

- NUMA affinity set to numa.nodeAffinity=1

- To match Optane in Slot5 (CPU2) of Dual CPU MB

- Results were about 300MB/s seq slower if ESXi flipped the OmniOS VM onto Numa node 0 (CPU1)

- /etc/system modified:

Code:

* Thanks Gea!

* napp-it_tuning_begin:

* enable sata hotplug

set sata:sata_auto_online=1

* set disk timeout 15s (default 60s=0x3c)

set sd:sd_io_time=0xF

* increase NFS number of threads

set nfs:nfs3_max_threads=64

set nfs:nfs4_max_threads=64

* increase NFS read ahead count

set nfs:nfs3_nra=32

set nfs:nfs4_nra=32

* increase NFS maximum transfer size

set nfs3:max_transfer_size=1048576

set nfs4:max_transfer_size=1048576

* increase NFS logical block size

set nfs:nfs3_bsize=1048576

set nfs:nfs4_bsize=1048576

* tuning_end:- sd.conf modified:

Code:

# DISK tuning

# Set correct physical-block-size and non-volitile settings for SSDs

# S3500 - 480 GB

# S3700 - 100 + 200 + 400 GB

# S4600 - 480 + 960 GB

# Set fake physical-block-size for WD RE drives to set pools to ashift12 (4k) so drives are replaceable by larger disks.

# WD RE4

# WD RE gold

sd-config-list=

"ATA INTEL SSDSC2BB48", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDSC2BA10", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDSC2BA20", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDSC2BA40", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDSC2KG48", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDSC2KG96", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA INTEL SSDPE21K37", "physical-block-size:4096, cache-nonvolatile:true, throttle-max:32, disksort:false",

"ATA WDC WD2000FYYZ-0", "physical-block-size:4096",

"ATA WDC WD2005FBYZ-0", "physical-block-size:4096";Now onto the benchmarks:

Testing out latency reduction from VSphere 6.5 best practices:

https://www.vmware.com/content/dam/...performance/Perf_Best_Practices_vSphere65.pdf

p.43

OmniOS VM ESXi advanced param ethernet2.coalescingScheme:

(for "storagenet" VMXNet3 adapter ethernet2)

Default setup (param not present - coalescing enabled by default)

4 x WD GLD in 2 stripe x 2 mirror - with S3700 slog:

ethernet2.coalescingScheme : disable

4 x WD GLD in 2 stripe x 2 mirror - with P4800X Optane slog:

ethernet2.coalescingScheme - not present

ethernet2.coalescingScheme : disable

OmniOS VM set to Latency Sensitivity - High (from Normal)

- Side effect is reserving all CPU cores assigned to VM for that VM.

S3700 200GB Slog

P4800X 375GB Optane Slog (as 30GB VMware VMDK - Thick Eager Zeroed)

- 4 CPU cores

Testing CPU Cores assigned to OmniOS with Latency Sensitivity High:

P4800X 375GB Optane Slog (as 30GB VMware VMDK - Thick Eager Zeroed)

- 2 CPU cores

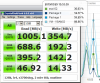

- 4 Cores:

- 5 Cores:

- 6 CPU cores

Finally - Optane VMDK to native ESXi6 Datastore:

P4800X 375GB Optane Slog (30GB VMware VMDK - to NON-NFS local Optane ESXi6 Datastore)

Profit?

Last edited: