I am new to the world of fiber networks, so I thought I'd dip my toes in cautiously to start with a small investment before diving into switches.

My goal, directly connect two machines via 10 G Fiber and get full bandwidth.

In short, I have partially succeeded as it is a bit lack luster and a little disappointing. So perhaps I just need my expectations checked, or perhaps something is wrong (i.e. I should have spent more money).

Machine A: Windows 11 latest, Intel i9-9900K, 32GB RAM, ASUS ROG Maximus Hero XI, card installed in the bottom x16 slot (Chipset z390). NVME read/write are 2 GB/s or better.

Machine B: Windows 11 latest, Intel i9-13900K, 64 GB RAM, ASUS ROG Maximus Z790 Hero, card installed in the bottom x16 slot (Chipset z790). NVME read/write are 5 GB/s or better.

The two NICs: NICGIGA Intel 82599(X520-DA1): https://www.amazon.ca/dp/B0CM37WWXF?ref=ppx_yo2ov_dt_b_product_details&th=1

The two SFP+ modules (intel coding): https://www.amazon.ca/dp/B01CN82LP8?ref=ppx_yo2ov_dt_b_product_details&th=1

The Cable: 10m https://www.amazon.ca/dp/B01C5HHFVC?psc=1&ref=ppx_yo2ov_dt_b_product_details

Static IPs on both ends, in a subnet that can't be confused with my existing legacy NICs.

I plugged it all in, installed the wired driver package from Intel's download site: Wired_driver_28.3_x64.zip

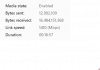

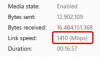

The cards are recognized, I've got lights on the NICs showing 10G link, all good. Although Windows shows this, depending where you look. It's only been 40 years. Give them some time to get link speed reporting working.

Here's where the disappointment comes:

So the real question is: has anyone got a real life experience of getting the full 9.x Gbps bandwidth between two windows boxes, or even to just one? Is it possible? Am I wasting my time, or is this as good as it gets?

Would love to hear what others have achieved.

Thanks

My goal, directly connect two machines via 10 G Fiber and get full bandwidth.

In short, I have partially succeeded as it is a bit lack luster and a little disappointing. So perhaps I just need my expectations checked, or perhaps something is wrong (i.e. I should have spent more money).

Machine A: Windows 11 latest, Intel i9-9900K, 32GB RAM, ASUS ROG Maximus Hero XI, card installed in the bottom x16 slot (Chipset z390). NVME read/write are 2 GB/s or better.

Machine B: Windows 11 latest, Intel i9-13900K, 64 GB RAM, ASUS ROG Maximus Z790 Hero, card installed in the bottom x16 slot (Chipset z790). NVME read/write are 5 GB/s or better.

The two NICs: NICGIGA Intel 82599(X520-DA1): https://www.amazon.ca/dp/B0CM37WWXF?ref=ppx_yo2ov_dt_b_product_details&th=1

The two SFP+ modules (intel coding): https://www.amazon.ca/dp/B01CN82LP8?ref=ppx_yo2ov_dt_b_product_details&th=1

The Cable: 10m https://www.amazon.ca/dp/B01C5HHFVC?psc=1&ref=ppx_yo2ov_dt_b_product_details

Static IPs on both ends, in a subnet that can't be confused with my existing legacy NICs.

I plugged it all in, installed the wired driver package from Intel's download site: Wired_driver_28.3_x64.zip

The cards are recognized, I've got lights on the NICs showing 10G link, all good. Although Windows shows this, depending where you look. It's only been 40 years. Give them some time to get link speed reporting working.

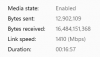

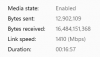

Here's where the disappointment comes:

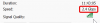

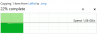

- iperf3 maxes out between the machines (in either direction) at about 6.5 Gbps. I have to use -w 1024k otherwise it only gets 2.5 Gbps!

- Turning on jumbo frames (either 4088 or 9014) doesn't really affect the iperf results.

- A windows file explorer copy goes at about 830 MB/s or 6.6 Gbps. It's good. It's just not great. About 200 MB/s shy of what I was hoping for...

- I was Expecting/hoping to copy files over at between 1000-1100 MB/s

So the real question is: has anyone got a real life experience of getting the full 9.x Gbps bandwidth between two windows boxes, or even to just one? Is it possible? Am I wasting my time, or is this as good as it gets?

Would love to hear what others have achieved.

Thanks

Attachments

-

8.6 KB Views: 3