I have a Corsair 230T filled to brim with 4x HDDs and 26 SSDs. Due to physical limitations I had to "cable manage" HBA data cables every gap I could find.

I am running an 5700G, B550 PG Riptide, 128G RAM, 9300-16i, 9207-8i along with onboard sata controller.

I have 26 dramless hikvision 1tb ssds and 4 4tb reds.

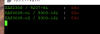

Problem lies with the 26x disk RAIDZ2 array. Once every 3-4 days, array starts to complain about write error, read errors or checksum errors. I swap the disk with whatever offline replacement disk I got and resilver it and problem goes away. Few days later, different disk has the same thing going for it. I replace the complaining disk with the "broken" disk I pulled out the other day and resilver and problem goes away.

This cycle has been repeating on and on for a month now. I am kind of frustrated.

I check the SMART data of the "broken" disks each time I pull them out and they are all fine. I even went as far as surface checking them few times and nothing there.

Motherboard, HBAs, Proxmox and TrueNAS Scale are updated. I run Scale virtualized in PVE and passthrough all storage related PCIE devices to Scale.

Any ideas? Pulling this thing apart is a massive hassle so I kind of wanted to get some thoughts before that happens.

I am running an 5700G, B550 PG Riptide, 128G RAM, 9300-16i, 9207-8i along with onboard sata controller.

I have 26 dramless hikvision 1tb ssds and 4 4tb reds.

Problem lies with the 26x disk RAIDZ2 array. Once every 3-4 days, array starts to complain about write error, read errors or checksum errors. I swap the disk with whatever offline replacement disk I got and resilver it and problem goes away. Few days later, different disk has the same thing going for it. I replace the complaining disk with the "broken" disk I pulled out the other day and resilver and problem goes away.

This cycle has been repeating on and on for a month now. I am kind of frustrated.

I check the SMART data of the "broken" disks each time I pull them out and they are all fine. I even went as far as surface checking them few times and nothing there.

Motherboard, HBAs, Proxmox and TrueNAS Scale are updated. I run Scale virtualized in PVE and passthrough all storage related PCIE devices to Scale.

Any ideas? Pulling this thing apart is a massive hassle so I kind of wanted to get some thoughts before that happens.