With RDMA latency is not too bad over the network.

Sure, but without the specific data comparisons, it's difficult to compare to native PCI-E fabrics with HBA cards (which have PLX switch chips that I'm sure also introduce latency?) to RDMA. Perhaps that's an opportunity for you to explore some day for us dear readers

Still, native PCI-E is still going to be better, we just don't have the data to know how much better from anyone other than the vendors themselves. I don't like non-independent numbers.

Here is the big thing - when you manage via a DPU, then VMs containers on the host can be untrusted (think public cloud, or enterprise cloud where people are running code from untrusted sources.) Services can be provisioned as needed whether those are accelerators, storage, or network bandwidth. All of the VMs can have an encrypted connection to other infrastructure (think AWS VPC.)

So like, I get what you are saying, but don't we already have that? I have a sever at home that has a Cisco VIC-1225. This is tech from 2013.

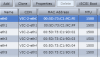

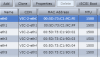

Cisco UCS Virtual Interface Card 1225 - Cisco

It has ports with physical MAC addresses like any other NIC

But it also has vNICs that have their own:

Apparently these are on like firesale now. I can get them for $15 lol

So, theoretically, I can have trusted and untrusted networks riding over the same physical fabric but are totally isolated in hardware. So when I say I hear you I really do, but doing that type of security doesn't require a dedicated ARM CPU.

Speaking of, would you classify this is a SmartNIC or as exotic in the continuum?

Moving on from my home setup...In the professional side of my life, I am doing this in production without anything even

that fancy. Leveraging CAPWAP on Cisco APs all of my "Guest" and "BYOD" traffic is sent through a GRE tunnel to a WLC that terminates the client traffic at L2 on a switch that's entirely air-gapped from my network.

From there, I have a VMWare cluster with a couple of dedicated Intel X540-T2s (dirt cheap!) on a separate vSwitch plugged into that physical switch.

I use pfSense as a router and NAT and have it's WAN IP in my DMZ. Granted, this is all only 10GBE, but I have a relatively high performance network with tons of traffic riding on this solution all the time. No extra ARM CPUs are required.

The outlined solution doesn't even require anything more than maybe than the bread and butter Intel X540...which just had its 10 year anniversary. Scaling up doesn't require anything exotic, it would just require an XL710. Anything past that and the whole system would need to be rearchitected anyway in favor of a hardware firewall so it's sorta irrelevant what NICs my servers have.

Granted, I am using PCI-E Lanes and slots in our design, but more and more that is less of a problem with modern hardware. Plus, I could always adopt PCI-E fabric tech. Maybe it's different in the cloud vs on prem in the datacenter and the use case makes more sense there. But for me in "Cloud Last" land...I don't understand the benefit?

The other big part is that none of this is running on the host x86 cores, so those are all freed up to allocate to workloads.

Also to your point about using the non-x86 cores. Sure, point taken. But what about licensing? Why would I use a VMware license on a PCI-E card's CPU when I can use it on a 128 Core Epyc?

Licensing aside, I think it would make more sense to take the price difference between a Bluefield 2 AIC and a normal NIC and instead invest it into a higher end CPU. If you do the math and compare the CPU performance to $ ratio, I think it probably ends up in my favor here.

MSRP from Dell for a 100GBE card:

MSRP from nVidia for Bluefield 2:

I can go from a 7313P to a 7502 and get double the relative CPU performance in my server node and still save $500.

Think about it more like later this year we will start seeing 200GbE/ 400GbE to nodes. At those speeds, just to encrypt traffic you need an accelerator or it will eat most of your CPU resources.

I recorded a demo of the Intel IPU Monday that will go live in a few weeks. The host system just sees block NVMe devices and has no idea that they are actually being delivered over 100GbE fabric as it is completely transparent.

I hear and agree with what you are saying here. But then why expose the ARM CPU to customers to use at all? Surely dedicated ASICs would be better suited to that task. You yourself proved that when Intel released QAT

Intel QuickAssist Technology and OpenSSL - Benchmarks and Setup Tips (servethehome.com).

Thank you as always for humoring me

@Patrick. I always appreciate your insight and I know I am a pain in the butt