Any chance you could share the step file? Was thinking about how to best rackmount multiple ms-01's and your setup if by far the cleanestI have 5x MS01 in a Proxmox cluster. Running about 40 "permanent" always ON VMs. 10 always on LXC containers. Storage is 15x 2TB Crucial T500 (3 per MS01). Ceph is running public and backend networking on bond1, which is 2x 10gb on each node. No transceivers, using direct cables from each MS01 to the 10g switch. Bond0 is 2x 2.5g on each MS01. Both bond0 and bond1are LACP.

Of the 40 VMs running, really just a mix of everything I need. DHCP, DNS, active directory, a Kubernetes worker on each node, Oracle databases, nested ESXi and vCenter, Proxmox Backup Server, pfSense, Plex, iVentoy (PXE boot version of Ventoy), AzureDevops (git repository), MeshCommander, MinIO, Kasm, a lot of stuff. Kubernetes hosts a bunch of databases, media manager, Guacamole, FreshRSS and probably 50 other deployments in total.

I have them mounted vertically in 6U of a super shallow depth rack - 12 inches deep. The front of the rack has 6U of fans pushing air directly into the front of the MS01s. To get most air through them, I designed and 3D printed a custom carrier for the MS01. The MS01 slide into place and lock with a latch at the back of the rack.

The cluster also has 5x i7 Intel NUCs, not because I designed it that way, but because I happen to have them already. Currently the GPUs are Thunderbolt attached to the i7 NUCs and using PCI passthrough for the VMs running Ollama, Open-Webui, Stable Diffusion, ComfyUI, Blender rendering and PiperTTS voice training - mostly.

The rack will get a lot cleaner once I design the mounts the NUCs, or just get rid of them and finish the rack with more MS01s. The single 1gb NIC on the NUCs really limits their usefulness. I also need to design/make the mounts for that growing collection of power bricks under the switch. They. aren't getting any cooling currently but the MS01 and NUCs are icy cold in normal operation.

View attachment 36782View attachment 36783

Minisforum MS-01 PCIe Card and RAM Compatibility Thread

- Thread starter Patrick

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

I'm going to buy a third MS-01 next month and given the 50% failure rate for the Crucial 96GB kit I'm going to try this one instead.Just to confirm that the i5-12600H variant supports 96gb of ram. Tested with memtest 24hrs+.

Mushkin Enhanced Redline 96GB DDR5-5600 (Mushkin Enhanced Redline 96GB (2 x 48GB) 262-Pin PC RAM DDR5 5600 (PC5 44800) SODIMM Memory Model MRA5S560LKKD48GX2 - Newegg.com).

Here you go @Neb . Original Solidworks file, STEP and STL if you just want to print them directly. The steel shelf that the 3D printed holders are mounted in can be found here:

https://www.amazon.com/dp/B07Z7N5XFH?ref=ppx_yo2ov_dt_b_product_details&th=1

https://www.amazon.com/dp/B07Z7N5XFH?ref=ppx_yo2ov_dt_b_product_details&th=1

Attachments

-

657.6 KB Views: 35

Last edited:

Can confirm this config to work:

MS-01 12900

- Crucial 96GB Kit (2x48GB) DDR5 CT2K48G56C46S5

- Samsung 990 Pro 2TB

- QNAP-TL-R400S (Rackmount-version) incl QXP-400eS card and SFF-8088 cable

- HGST Ultrastar He10: 4x (ZFS mirrored Raid10)

- WD Red SN700 2TB: 2x (ZFS mirrored SLOG)

- QIANRENON 4K HDMI Dummy

- Proxmox 8.2.2

- Meshcommander 0.9.5

Consumes about 60W incl about 4x8W consumed by the spinning disks with a few first LXCs and docker containers (portainer, adguard, caddy, cups, MediaWiki, homepage, ...). More to come.

The SFP+ connector acts as a heat sink ...

MS-01 12900

- Crucial 96GB Kit (2x48GB) DDR5 CT2K48G56C46S5

- Samsung 990 Pro 2TB

- QNAP-TL-R400S (Rackmount-version) incl QXP-400eS card and SFF-8088 cable

- HGST Ultrastar He10: 4x (ZFS mirrored Raid10)

- WD Red SN700 2TB: 2x (ZFS mirrored SLOG)

- QIANRENON 4K HDMI Dummy

- Proxmox 8.2.2

- Meshcommander 0.9.5

Consumes about 60W incl about 4x8W consumed by the spinning disks with a few first LXCs and docker containers (portainer, adguard, caddy, cups, MediaWiki, homepage, ...). More to come.

The SFP+ connector acts as a heat sink ...

Attachments

-

159.1 KB Views: 108

@jro77 @Pikeman1868 I just tested my MS-01 (1.22 BIOS) again with Sparkle Intel Arc A310 Eco and it posts.Very odd. That's the card I have.

Will check the bios version and see what's doing.

Very strange. I'm running 1.22 as well.

Here is

lspci output from Proxmox

Bash:

00:00.0 Host bridge [0600]: Intel Corporation Device [8086:a706]

00:01.0 PCI bridge [0604]: Intel Corporation Device [8086:a70d]

00:06.0 PCI bridge [0604]: Intel Corporation Raptor Lake PCIe 4.0 Graphics Port [8086:a74d]

00:06.2 PCI bridge [0604]: Intel Corporation Device [8086:a73d]

00:07.0 PCI bridge [0604]: Intel Corporation Raptor Lake-P Thunderbolt 4 PCI Express Root Port [8086:a76e]

00:07.2 PCI bridge [0604]: Intel Corporation Raptor Lake-P Thunderbolt 4 PCI Express Root Port [8086:a72f]

00:0d.0 USB controller [0c03]: Intel Corporation Raptor Lake-P Thunderbolt 4 USB Controller [8086:a71e]

00:0d.2 USB controller [0c03]: Intel Corporation Raptor Lake-P Thunderbolt 4 NHI [8086:a73e]

00:0d.3 USB controller [0c03]: Intel Corporation Raptor Lake-P Thunderbolt 4 NHI [8086:a76d]

00:14.0 USB controller [0c03]: Intel Corporation Alder Lake PCH USB 3.2 xHCI Host Controller [8086:51ed] (rev 01)

00:14.2 RAM memory [0500]: Intel Corporation Alder Lake PCH Shared SRAM [8086:51ef] (rev 01)

00:16.0 Communication controller [0780]: Intel Corporation Alder Lake PCH HECI Controller [8086:51e0] (rev 01)

00:16.3 Serial controller [0700]: Intel Corporation Alder Lake AMT SOL Redirection [8086:51e3] (rev 01)

00:1c.0 PCI bridge [0604]: Intel Corporation Alder Lake-P PCH PCIe Root Port [8086:51bb] (rev 01)

00:1c.4 PCI bridge [0604]: Intel Corporation Device [8086:51bc] (rev 01)

00:1d.0 PCI bridge [0604]: Intel Corporation Alder Lake PCI Express Root Port [8086:51b0] (rev 01)

00:1d.2 PCI bridge [0604]: Intel Corporation Device [8086:51b2] (rev 01)

00:1d.3 PCI bridge [0604]: Intel Corporation Device [8086:51b3] (rev 01)

00:1f.0 ISA bridge [0601]: Intel Corporation Raptor Lake LPC/eSPI Controller [8086:519d] (rev 01)

00:1f.3 Audio device [0403]: Intel Corporation Raptor Lake-P/U/H cAVS [8086:51ca] (rev 01)

00:1f.4 SMBus [0c05]: Intel Corporation Alder Lake PCH-P SMBus Host Controller [8086:51a3] (rev 01)

00:1f.5 Serial bus controller [0c80]: Intel Corporation Alder Lake-P PCH SPI Controller [8086:51a4] (rev 01)

01:00.0 PCI bridge [0604]: Intel Corporation Device [8086:4fa1] (rev 01)

02:01.0 PCI bridge [0604]: Intel Corporation Device [8086:4fa4]

02:04.0 PCI bridge [0604]: Intel Corporation Device [8086:4fa4]

03:00.0 VGA compatible controller [0300]: Intel Corporation DG2 [Arc A310] [8086:56a6] (rev 05)

04:00.0 Audio device [0403]: Intel Corporation DG2 Audio Controller [8086:4f92]

05:00.0 Non-Volatile memory controller [0108]: Kingston Technology Company, Inc. OM8PGP4 NVMe PCIe SSD (DRAM-less) [2646:501b]

06:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ [8086:1572] (rev 02)

06:00.1 Ethernet controller [0200]: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ [8086:1572] (rev 02)

5b:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I226-V [8086:125c] (rev 04)

5c:00.0 Non-Volatile memory controller [0108]: MAXIO Technology (Hangzhou) Ltd. NVMe SSD Controller MAP1602 [1e4b:1602] (rev 01)

5d:00.0 System peripheral [0880]: Global Unichip Corp. Coral Edge TPU [1ac1:089a]

5e:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I226-LM [8086:125b] (rev 04)

5f:00.0 Network controller [0280]: MEDIATEK Corp. MT7922 802.11ax PCI Express Wireless Network Adapter [14c3:0616]04:00.0 Audio device [0403]: Intel Corporation DG2 Audio Controller [8086:4f92] - those are PCI devices from the card

And lshw:

Bash:

root@proxmox-ms01:~# lshw -c video

*-display UNCLAIMED

description: VGA compatible controller

product: DG2 [Arc A310]

vendor: Intel Corporation

physical id: 0

bus info: pci@0000:03:00.0

version: 05

width: 64 bits

clock: 33MHz

capabilities: pciexpress msi pm vga_controller bus_master cap_list

configuration: latency=0

resources: iomemory:400-3ff memory:6d000000-6dffffff memory:4000000000-40ffffffff memory:6e000000-6e1fffff

*-graphics

product: simpledrmdrmfb

physical id: 4

logical name: /dev/fb0

capabilities: fb

configuration: depth=32 resolution=800,600

Last edited:

This worksOh so I missed that. Sorry, English isn’t my mother tongue.

Qnap QM2-2P-384 perfectly fits the bill, although somewhat pricey. If you go for this card, double check to get the older version QM2-2P-384, and not QM2-2P-384A. The newer one does not fit, it is longer.

The left most slot is the u.2/m.2 combo port. A u.2 converter would have come in the box.I have searched but could not find the answer. First post, enjoying my MS01 so far.

I am looking to add a U.2 7mm drive. If I add that which m.2 NVMe slot do I lose?

I have all three NVMe slots filled. Do I lose the gen 4x4 slot? Thanks for the help

Small update for the i5-12600H.

18.6w idle usage on Proxmox with no VMs or LXCs running.

96gb ram, pm9a3 3.84tb u.2 and 2 x 2.5gb connected. Nothing disabled in bios.

18.6w idle usage on Proxmox with no VMs or LXCs running.

96gb ram, pm9a3 3.84tb u.2 and 2 x 2.5gb connected. Nothing disabled in bios.

I just bought three Misinforum MS-01s and I'm using the previously mentioned

- Crucial CT48G56C46S5 48GB DIMMs

It can take a very long time to boot once you make hardware change, mine stay off for 30 sec easily when I change the ram.I just bought three Misinforum MS-01s and I'm using the previously mentioned

I've not installed any storage, video cards, etcetera. When I start up the box, I don't get a video signal and it doesn't appear to POST. Has this RAM been verified by other MS-01 owners to work? I'll head to Microcenter this week and pick up a small RAM kit to test this out...it's possible I have bad RAM....and report back.

- Crucial CT48G56C46S5 48GB DIMMs

I have a PCIE GPU, no idea if it's normal or not but subsequent boot are ok.

The system usually takes 1 or 2 minutes for the first boot up due to memory training.I just bought three Misinforum MS-01s and I'm using the previously mentioned

I've not installed any storage, video cards, etcetera. When I start up the box, I don't get a video signal and it doesn't appear to POST. Has this RAM been verified by other MS-01 owners to work? I'll head to Microcenter this week and pick up a small RAM kit to test this out...it's possible I have bad RAM....and report back.

- Crucial CT48G56C46S5 48GB DIMMs

I've bought four of these 96GB kits from Crucial and two of them had a bad SODIMM. I bought my first MS-01 and 96GB kit and had a bad SODIMM so ordered a replacement and both in the second kit worked, then I bought a second MS-01 and another 96GB kit and that had a bad SODIMM but the replacement had two working SODIMMs.

These had the same symptom, no display after a few minutes. when I had the bad kits I tested one SODIMM at a time and when I determined one was working tested it in the other slot to make sure both RAM slots worked. I'm going to order a third MS-01 and I'm thinking I might get the Mushkin kit people some people are using instead.

These had the same symptom, no display after a few minutes. when I had the bad kits I tested one SODIMM at a time and when I determined one was working tested it in the other slot to make sure both RAM slots worked. I'm going to order a third MS-01 and I'm thinking I might get the Mushkin kit people some people are using instead.

Do you have anything in the pcie slot? It might not be the ram.I've bought four of these 96GB kits from Crucial and two of them had a bad SODIMM. I bought my first MS-01 and 96GB kit and had a bad SODIMM so ordered a replacement and both in the second kit worked, then I bought a second MS-01 and another 96GB kit and that had a bad SODIMM but the replacement had two working SODIMMs.

These had the same symptom, no display after a few minutes. when I had the bad kits I tested one SODIMM at a time and when I determined one was working tested it in the other slot to make sure both RAM slots worked. I'm going to order a third MS-01 and I'm thinking I might get the Mushkin kit people some people are using instead.

No, it was definitely the RAM, as I said I tested each SODIMM individually and the replacement kits all worked. I tested the RAM before I added my PCIe cards and NVMe storage.Do you have anything in the pcie slot? It might not be the ram.

Did not work

M.2 Accelerator with Dual Edge TPU (E-Key) in wifi slot

I was hoping one of the two TPUs would show up.

Worked

LSI9300-8E

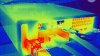

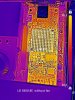

Here are some thermal images with no fan and with a cheap 50mm fan from amazon (Gdstime GDB5010).

I 3D printed a mount for it. I was originally planning on making it friction fit the heat sink but I didn't have the card to measure, so I used 1 screw into the heatsink fins to attach it.

Crucial RAM 96GB Kit (2x48GB) CT2K48G56C46S5 also worked for me.

M.2 Accelerator with Dual Edge TPU (E-Key) in wifi slot

I was hoping one of the two TPUs would show up.

Worked

LSI9300-8E

Here are some thermal images with no fan and with a cheap 50mm fan from amazon (Gdstime GDB5010).

I 3D printed a mount for it. I was originally planning on making it friction fit the heat sink but I didn't have the card to measure, so I used 1 screw into the heatsink fins to attach it.

Crucial RAM 96GB Kit (2x48GB) CT2K48G56C46S5 also worked for me.

Attachments

-

495.1 KB Views: 90

-

796.7 KB Views: 94

-

428.3 KB Views: 88

anyone tried this ram ?? TEAMGROUP Elite SODIMM DDR5 64GB (32x2GB) 5600Mhz (PC5-44800) CL46 Non-ECC Unbuffered 1.1V 262 Pin Laptop Memory Module Ram - TED564G5600C46ADC-S01 .. CL46-45-45, same as the crucial specs .. I don't think I can justify the price jump to go 2x48gb ..

I'll give it another try. Shame I already put these systems into my rack before trying everything out....

Reporting back -- the RAM is good! Thank you for your help!

Now I just have to research the SSD sleds and figure out why my Samsung / Sabrent 4TB SSDs aren't being recognized in the MS01, but they are recognized by my MBP via USB adapter.

The M2 slider is in the correct position, so it isn't that. I don't see any additional power setting in the instruction manual. Hmm. Drive initialization on my MBP works. Shame I don't an NVME adapter that would work with this sled.

Now I just have to research the SSD sleds and figure out why my Samsung / Sabrent 4TB SSDs aren't being recognized in the MS01, but they are recognized by my MBP via USB adapter.

The M2 slider is in the correct position, so it isn't that. I don't see any additional power setting in the instruction manual. Hmm. Drive initialization on my MBP works. Shame I don't an NVME adapter that would work with this sled.

After messing with a handful of dual M.2 PCIe cards, I finally got one that fits the MS-01 with bifurcation running on the card itself.

TXB122 PCIe 3.1 x8 ASM2812 from AliExpress

Now have four 4TB storage drives and a 1TB as the boot drive.

Most cards with bifurcation are too long, but this one had a perforation on the PCB to snap it off if you didn't need to mount 22110 NVMe's. Also did the mod with covering the PCIe pins with captain tape, so all of my 64GB of RAM shows up just fine.

Ran some benchmarks and the two drives on the PCIe card run twice as fast reads compared to the two drives in the native M.2 slots (same writes).

Now off to test redundant software RAIDs.

TXB122 PCIe 3.1 x8 ASM2812 from AliExpress

Now have four 4TB storage drives and a 1TB as the boot drive.

Most cards with bifurcation are too long, but this one had a perforation on the PCB to snap it off if you didn't need to mount 22110 NVMe's. Also did the mod with covering the PCIe pins with captain tape, so all of my 64GB of RAM shows up just fine.

Ran some benchmarks and the two drives on the PCIe card run twice as fast reads compared to the two drives in the native M.2 slots (same writes).

Now off to test redundant software RAIDs.