Nice job, nice job. One hint, if x16 bandwidth isn't necessary, there's a hack(which i haven't tested but it has to work just fine) to uncouple GPUs to split load: USB-A to USB-A AOC(fiber) cable with risers. This shouldn't be like ethernet media converter, it just carries the pin signals across. Moreover there are a full-bandwidth (but expensive!!!) solutions to extend pcie to another external chassis via fiber, again, to uncouple and use cheaper, less risky solutions(if PSU powering those electrically-uncoupled 2-3 4090s would fail then those cards just fall off the bus without any fire risk).

AI build.

- Thread starter spyroot

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

Nice job, nice job. One hint, if x16 bandwidth isn't necessary, there's a hack(which i haven't tested but it has to work just fine) to uncouple GPUs to split load: USB-A to USB-A AOC(fiber) cable with risers. This shouldn't be like ethernet media converter, it just carries the pin signals across. Moreover there are a full-bandwidth (but expensive!!!) solutions to extend pcie to another external chassis via fiber, again, to uncouple and use cheaper, less risky solutions(if PSU powering those electrically-uncoupled 2-3 4090s would fail then those cards just fall off the bus without any fire risk).

I was searching actually many alternative option. I found one company but then lost details, they sell many many different option for PCI 5.0 fabric , board but crazy expensive. I think de-coupled power , PCI fabric ( optical solution would ideal here) , and motherboard. I did check OpenCompute 3.0 specs many good ideas. I think decent PCI raiser x16 full rate with good 6 PIN input will solve many many issue, but of course passive optical would much much better solution.

Very cool! I always curious how can I add more PSU to the same system as these 4090s are directly connecting to the PCI slot (not risers), power supply needs to coming from one single source. This 4 to 1 combined board is the key.

For PCIE to EPS I bought 4 of these Power Cable 030-0571-000 for my Tesla P40s. These are original nvidia part feels pretty solid but I'm not sure the rating of these cables. Many Tesla card uses EPS instead of PCEI connectors. I guess this 2 PCEI to 1 EPS converter fits the needs for CPUs.

For PCIE to EPS I bought 4 of these Power Cable 030-0571-000 for my Tesla P40s. These are original nvidia part feels pretty solid but I'm not sure the rating of these cables. Many Tesla card uses EPS instead of PCEI connectors. I guess this 2 PCEI to 1 EPS converter fits the needs for CPUs.

@spyroot How loud are those 2400W PSU?

Zero noise; I don't hear anything. The switch fan makes more noise. The Dell PSU is crazy loud (like a jet engine when you load a system)

same for HP. (i.e., typical server room noise).

But if you use a nocturne fan, you can reduce it significantly. That way, I recommend this Delta.

It takes more space, but it has three fans, and it takes intake air.

I'm going to train my model this week, so it will be about 7-10 days of training time so that we will see.

I'll post an update.

(also, I overcapacity, so 3 GPUs link to the same PSU, so it is about 1800 watts for

3 GPUs if you remove the power limit of 4090).

Be careful the cable that you shared will not work with any of these boards. EPSv12.Very cool! I always curious how can I add more PSU to the same system as these 4090s are directly connecting to the PCI slot (not risers), power supply needs to coming from one single source. This 4 to 1 combined board is the key.

For PCIE to EPS I bought 4 of these Power Cable 030-0571-000 for my Tesla P40s. These are original nvidia part feels pretty solid but I'm not sure the rating of these cables. Many Tesla card uses EPS instead of PCEI connectors. I guess this 2 PCEI to 1 EPS converter fits the needs for CPUs.

I was referring. It is EPSv12 on the motherboard. i.e., in all new boards, you always have 2xEPV12 on the

motherboard + 6 PIN.

It pins 5/6/7/8 12v and 1/2/3/4 ground(black). The PSU board is wired straight on all servers' breakout boxes,

so 3x12V + 13x12 black. So if you do a cable yourself. 3x12v from breakout -> must go to 4x12v (

straight). (4/5/6/7 PINs). In your notice, the black jumper on the PCI side cable

makes it a PCI cable.

I think its cables take 4 PINs from PDB to GPUs, and it probably rated 16 AWG if original super micro since it allows

you to get more out of PDB 8 pinout.

More or less what you need 4 Pin to 8 Pin EPS Power Adapter - Computer Power Cables - Internal | StarTech.com Europe, but then you need to feed from 6 pin board to Molex

and separate four pins, which will take two 6 ( from the breakout board).

Last edited:

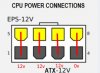

I guess this is the diagram for the EPS-12V pin, right?Be careful the cable that you shared will not work with any of these boards. EPSv12.

I was referring. It is EPSv12 on the motherboard. i.e., in all new boards, you always have 2xEPV12 on the

motherboard + 6 PIN.

It pins 5/6/7/8 12v and 1/2/3/4 ground(black). The PSU board is wired straight on all servers' breakout boxes,

so 3x12V + 13x12 black. So if you do a cable yourself. 3x12v from breakout -> must go to 4x12v (

straight). (4/5/6/7 PINs). In your notice, the black jumper on the PCI side cable

makes it a PCI cable.

I think its cables take 4 PINs from PDB to GPUs, and it probably rated 16 AWG if original super micro since it allows

you to get more out of PDB 8 pinout.

More or less what you need 4 Pin to 8 Pin EPS Power Adapter - Computer Power Cables - Internal | StarTech.com Europe, but then you need to feed from 6 pin board to Molex

and separate four pins, which will take two 6 ( from the breakout board).

Yes, it is just straight (no jumpers between pins, etc.), but note that ATX PSU always flips cables, so don't use any CPU cables fromI guess this is the diagram for the EPS-12V pin, right?

View attachment 30643

ATX PSU, if you are using Server PSU breakouts.

Many cables you find online essentially take 2x18 AWG wire and push it inside 8xcables instead of using 4x16AWG lines.

For example, this one is good cable.

Supermicro 8-Pin CPU to 8-Pin CPU 38cm Right Angle Power GPU Cable (CBL-PWEX-0923)

Copper Cable Assembly 8-Pin (P3.0) to 8-Pin (P4.2) Power Cable Supermicro Certified

So for the breakout board, you need to cut that side and convert that 8 PIN and convert to 6 PIN.

and use this before connecting anything to the motherboard for a sanity check

Got it thanks, the tester is super handy. I learned a hard way not all ATX cables are equal even with the same brand(EVGA). (I burned 3 hard drives with wrong ATX -> SATA powercable before, the pin on PSU side are different between two models.)Yes, it is just straight (no jumpers between pins, etc.), but note that ATX PSU always flips cables, so don't use any CPU cables from

ATX PSU, if you are using Server PSU breakouts.

Many cables you find online essentially take 2x18 AWG wire and push it inside 8xcables instead of using 4x16AWG lines.

For example, this one is good cable.

But it has eight pins on one side (i.e., the side going to the PDB board).

Supermicro 8-Pin CPU to 8-Pin CPU 38cm Right Angle Power GPU Cable (CBL-PWEX-0923)

Copper Cable Assembly 8-Pin (P3.0) to 8-Pin (P4.2) Power Cable Supermicro Certifiedstore.supermicro.com

So for the breakout board, you need to cut that side and convert that 8 PIN and convert to 6 PIN.

and use this before connecting anything to the motherboard for a sanity check

I did the same thing too 10 years ago, used a manufacturer A cable on manufacturer B modular PSU, magic smoke and everything.Got it thanks, the tester is super handy. I learned a hard way not all ATX cables are equal even with the same brand(EVGA). (I burned 3 hard drives with wrong ATX -> SATA powercable before, the pin on PSU side are different between two models.)

These days for custom builds I usually have to make EPS from 6pin GPU and vice versa all the time so I use a multimeter and ATX extractor tool.

Mad scientist vibes; excellent! With all your caution over the power path, be careful about Noctua fans on the PSUs; their static pressure is miniscule compared to the stock fans. You could use a big, quiet 140mm fan and a fabbed/3dp shroud funnelling into the PSU.

That was my concern as well. But the delta I use has 3 FAN so that + and this weekend, I did drill a small hole and put it to thermal sensor last weekend. Basically, I added an additional sensor inside a PSU to monitor temperature under the load.Mad scientist vibes; excellent! With all your caution over the power path, be careful about Noctua fans on the PSUs; their static pressure is miniscule compared to the stock fans. You could use a big, quiet 140mm fan and a fabbed/3dp shroud funnelling into the PSU.

I'll be implementing a very ugly solution soon with APC netbotz + smoke alarm for it. I obviously did test all of my setups, most importantly, the joint points(connections) of various "hot chips" with a thermal camera to make sure they cope well under max load for over 30 minutes with stable temperature. Realistically you can add external thermal cameras with monitoring for early warning but I'm a lazy ass.

My setups are as cheap as it gets, just a single 2-2.4kw PSU for 2 4090 and motherboard, which results in highest efficiency for the said PSUs(50% load is the sweet spot with most if not all PSUs)

My setups are as cheap as it gets, just a single 2-2.4kw PSU for 2 4090 and motherboard, which results in highest efficiency for the said PSUs(50% load is the sweet spot with most if not all PSUs)

Seems like you went with a XEON

Intel Xeon Platinum 8468 ES for $210

For context I'm debating between Sapphire Rapids, and Genoa based platforms right now for a 4 GPU setup.

Did you build this with a Sapphire Rapids Chip? If so which Xeon CPU did you go for with the LGA4677 socket? I'm seeing some engineering samples on ebay that are looking tantalizing.The server PSU is more or less cheap (of course, used). But

you can get Delta 2400 for 100-200 USD. That PSU is ten times better

than any ATX. If you are using Dell/HP, you probably want to replace the fan with

a nocturne to reduce noise. (. additional cost)

The breakout board cost 45 USD, so it is cheap as well, or use the one I show without any board,

just a connector and use your cable.

Power Supply Kit Archives - Parallel Miner

Customized server power supply kit for ASIC miners and GPU rigs.www.parallelminer.com

Deep In The Mines LLC

We are a Biostar authorized distributor. We sell motherboards, power supplies, cables, and breakout boards used for cryptocurrency miningwww.deepinthemines.com

Cables I ordered from China 14 AWG /16 AWG. You

must wait a bit, but you will get good-quality cables.

Check review people who buy just cable check quality five times : )

This tool does magic

The most expensive part motherboard, costs 1100-1400 USD.

(I would not recommend using 790 chipsets, so stay with c741,

it gambles on CPU support). (Consider that 12 months from now,

you will get a Platinum Xeon CPU on eBay for a price of 200-300 USD)

DDR5 RDIMM you can find for a reasonable price.

Actually, you can find DDR5 memory for servers cheaper than "sexy" DDR5

overclocked DDR5 for gamers.

GPU, I'm it a rabbit hole, really... ( I have done this for many years, so I have many GPUs for research systems

so it does not require a single time investment).

I guess investing makes more sense if you need to confirm your research, do many runs,

and don't have access ( free to TPU/GPU resources)

Lastly, I did a lot of benchmarks and comparisons; if you want to use ADA,

it beats the A100 that I tested every single time.

Now PUMP, Rads, etc. I just moved, but It was expensive.

I love this pump ( but I hate SATA behind). ( note the nice part that the pump

has integration with Linux so that you can get all the data from the lm-sensor)

ULTITUBE D5 100 PRO LEAKSHIELD Ausgleichsbehälter mit D5 NEXT Pumpe und Leckageschutzsystem - Aqua Computer

ULTITUBE D5 100 PRO LEAKSHIELD Ausgleichsbehälter mit D5 NEXT Pumpe und Leckageschutzsystem: Die Ausgleichsbehälter der ULTITUBE-Serie sind mit einer Röhre aus Borosilikatglas ausgestattet, das im Gegensatz zu dem häufig für Röhren-Ausgleichsbehälter eingesetzten Plexiglas eine sehr hohe Härte...shop.aquacomputer.de

Rads are expensive, but it does magic. ( note that the quality of the build,

you will buy once for the next ten years)

For the CPU, I use this. Expensive but not crazy

13090 Alphacool ES Jet LGA 4677 2U (SP5 optional)

Server and workstation optimized CPU cooler for Intel Xeon with 4 nozzles technology for high performance and reliable coolingshop.alphacool.com

So the main cost is GPUs.

P.S also forgot

Source for all crazy types of nuts, t-slots , brackets etc : )))8020 T-Slot Product Resources | Extruded Aluminum Building Systems

Learn about 80/20 t-slot profiles and parts, including fasteners, panels, casters, accessories, & more. Visit our site for more info on your build needs.8020.net

Intel Xeon Platinum 8468 ES for $210

For context I'm debating between Sapphire Rapids, and Genoa based platforms right now for a 4 GPU setup.