Does the following make sense?

What constraints am I missing?

Working backwards from bandwidth :

QDR infiniband 40gbs

3400MB/s unidirectional ish.

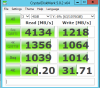

Consumer grade ssd sequential r/w (samsung 850 evo)

500 MB/s

4k r/w

90k iops r/w

I never know whether a K is 1024 or 1000. In my world 4k is 4096 bytes and 90k iops is 90,000 = 3. 6864 × 10^8 / 10^6 = so 350 MB/s give or take

If a zfs mirrored striped pool were efficient and I was targeting transactions 4k or larger I would saturate a qdr connection with a striped pool about 7 or 8 ssds wide

So

If I am constrained to qdr infiniband (rdma nfs)

and I accept 4k as a normal transaction size

then creating a single pool of striped mirrored ssd vdev much wider than 8 would be wasteful.

Thanks

Robert

What constraints am I missing?

Working backwards from bandwidth :

QDR infiniband 40gbs

3400MB/s unidirectional ish.

Consumer grade ssd sequential r/w (samsung 850 evo)

500 MB/s

4k r/w

90k iops r/w

I never know whether a K is 1024 or 1000. In my world 4k is 4096 bytes and 90k iops is 90,000 = 3. 6864 × 10^8 / 10^6 = so 350 MB/s give or take

If a zfs mirrored striped pool were efficient and I was targeting transactions 4k or larger I would saturate a qdr connection with a striped pool about 7 or 8 ssds wide

So

If I am constrained to qdr infiniband (rdma nfs)

and I accept 4k as a normal transaction size

then creating a single pool of striped mirrored ssd vdev much wider than 8 would be wasteful.

Thanks

Robert