Hey all! Currently have 8 x Toshiba PX05SRB192's installed in a Proliant DL360 G10 connected to HPE Smart Array P408i-a w/ 2GB cache. Current RAID Array config:

RAID 10

Stripe: 1024kb

Block Size: 512b

Write Cache Enabled

HPE Smart Path Enabled

Will be using this server as an ESXi host for a variety of VMs, so mixed use. I've been playing around with different stripe sizes, caching preferences, but figured I would throw it out here too for any recommendations in terms of achieving an optimal, balanced performance. I have yet to try disabling Smart Path, but will do that next and retest.

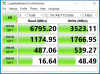

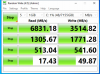

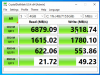

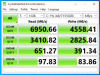

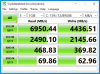

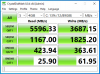

Anyways, current performance metrics at the above config are below. I feel like it is not performing nearly as well as it should to be honest.

RAID 10

Stripe: 1024kb

Block Size: 512b

Write Cache Enabled

HPE Smart Path Enabled

Will be using this server as an ESXi host for a variety of VMs, so mixed use. I've been playing around with different stripe sizes, caching preferences, but figured I would throw it out here too for any recommendations in terms of achieving an optimal, balanced performance. I have yet to try disabling Smart Path, but will do that next and retest.

Anyways, current performance metrics at the above config are below. I feel like it is not performing nearly as well as it should to be honest.

Last edited: