Hi,

I am just setting up my new TrueNAS server - and I have a ConnectX-5 100Gbps card that I have installed.

Machine have 128GB RAM, a Xeon E5-1650v3, SuperMicro X10SRi-F.

Network is set to 9k jumbo frames.

It is working as it should - but doing a iperf from my esxi box to the TrueNAS server I can "only" get around 77-82 Gbps

Is this as expected or is there any tuning I can do in TrueNas to make it run faster.

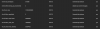

I have turned on autotune and rebooted the server, which gave me the following settings:

I know there are tuning for 10Gbps, but I wonder if my machine is simply too slow for 100GBps? My CPU usage goes to around 75% when running the iperf test.

Thanks in advance for any responses.

I have tuned a little according to: FreeBSD Network Performance Tuning @ Calomel.org - and my CPU dropped to around 50% and I gained on average 5 Gbps - so not a lot increase, but better CPU usage.

I wonder if I would gain the little extra I "need" to get to 100Gbps if I had a 8 core CPU? Like the E5-1680 v4

I am just setting up my new TrueNAS server - and I have a ConnectX-5 100Gbps card that I have installed.

Machine have 128GB RAM, a Xeon E5-1650v3, SuperMicro X10SRi-F.

Network is set to 9k jumbo frames.

It is working as it should - but doing a iperf from my esxi box to the TrueNAS server I can "only" get around 77-82 Gbps

Is this as expected or is there any tuning I can do in TrueNas to make it run faster.

Code:

Client connecting to 10.100.10.202, TCP port 5001

TCP window size: 516 KByte (WARNING: requested 512 KByte)

------------------------------------------------------------

[ 8] local 10.100.10.184 port 13844 connected with 10.100.10.202 port 5001

[ 4] local 10.100.10.184 port 59671 connected with 10.100.10.202 port 5001

[ 5] local 10.100.10.184 port 50657 connected with 10.100.10.202 port 5001

[ 3] local 10.100.10.184 port 16646 connected with 10.100.10.202 port 5001

[ 7] local 10.100.10.184 port 10172 connected with 10.100.10.202 port 5001

[ 6] local 10.100.10.184 port 24179 connected with 10.100.10.202 port 5001

[ ID] Interval Transfer Bandwidth

[ 8] 0.0-10.0 sec 15.7 GBytes 13.5 Gbits/sec

[ 4] 0.0-10.0 sec 12.7 GBytes 10.9 Gbits/sec

[ 5] 0.0-10.0 sec 15.7 GBytes 13.5 Gbits/sec

[ 3] 0.0-10.0 sec 15.7 GBytes 13.5 Gbits/sec

[ 7] 0.0-10.0 sec 14.1 GBytes 12.1 Gbits/sec

[ 6] 0.0-10.0 sec 15.9 GBytes 13.7 Gbits/sec

[SUM] 0.0-10.0 sec 89.8 GBytes 77.2 Gbits/sec

I know there are tuning for 10Gbps, but I wonder if my machine is simply too slow for 100GBps? My CPU usage goes to around 75% when running the iperf test.

Thanks in advance for any responses.

I have tuned a little according to: FreeBSD Network Performance Tuning @ Calomel.org - and my CPU dropped to around 50% and I gained on average 5 Gbps - so not a lot increase, but better CPU usage.

I wonder if I would gain the little extra I "need" to get to 100Gbps if I had a 8 core CPU? Like the E5-1680 v4

Last edited: