hmm..

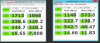

Your values are too bad. Tuning can help but not for these values

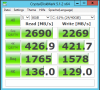

I have just made a Crystal benchmark on the machine I have access to,

Similar RAM, ESXi 6.5u1, OmniOS 151024 with vmxnet3 and sync=standard, Windows Server 2012 (test c , one 3way mirror from 3 Intel SSDs DC-S3610-800

, one 3way mirror from 3 Intel SSDs DC-S3610-800

The DC S3610 is the cheaper brother of the DC S3700, nearly as fast with less overprovisioning.

Write: 246 MB/s

Read: 350 MB/s

Your values are too bad. Tuning can help but not for these values

I have just made a Crystal benchmark on the machine I have access to,

Similar RAM, ESXi 6.5u1, OmniOS 151024 with vmxnet3 and sync=standard, Windows Server 2012 (test c

The DC S3610 is the cheaper brother of the DC S3700, nearly as fast with less overprovisioning.

Write: 246 MB/s

Read: 350 MB/s