Ok I know whats going in our head...

yeah this post wonders all over the place. hope you can follow!!!! hah sorry my adhd brain is on fire!!!!!!!

oh crap another n00b.. oh great.... your partially right. but

I have been doing computers long enough to tell you that my favorite O/S was … Dr Dos 7.0...

I used o-scope and schematics to repair IBM P/S 2 and Value Point systems...

fixed token ring cards and memory expansion cards.

I even learned early - yeah people that don't know what pre internet was...

I learned on the 8088 and 8086 what crystal was the driver for the cpu and was overclocking since then... take the 4mhz crystal out and put an 8 in...

hell my fav cpu was the cyrix 6x86!!!!!! LOL....

I was a benchmark whore... loved me some checkit … ok found this while typing this.. had to look... LOL

https://winworldpc.com/product/checkit-pro/pro-1x

I was so fluent on hardware but lost site when it started getting too hard to keep up and that was around the q6600 time...

just so many cpu types, cpu sockets, memory speeds.. just too much for me to keep up with...

ive been doing my main job as an Altiris Administrator since 2006 for a few companies now and yeah im out of the loop on what the cool jobs are today. I am seeing...virtualization, web services, storage and the sort...

I do my part to stay up with my old dwindling hardware but effectively am running...

win10, server 2016

esxi - full lab running 2k16, ad, dns, dhcp, etc... with Altiris and all of its solutions...

now venturing into freenas

my latest posts craz has been about 10gb on the cheap... im $55 in on old Mellanox cx4 style ConnectX-1 cards that I have working on win10, esxi, freenas 11 (put a ticket in as not working on 12)

so with that all out of the way... lets get to the learning im after!!!

my current job, they use what comes in the Lenovo systems... and they are just cruddy WD Blue drives. Is what it is.. but we deal with scientists and masses of data. I have told my coworkers that we need to look at SSD and I was told.. no too expensive. I took it upon myself to use anvil storage benchmark tool and started benchmarking ALL of our types of HD's... normal spinning sata drives, then our laptops that had the cruddy intel 180gb ssd's that were dying so much onto other things. we started getting a few SSD drives in for special requests and I started benchmarking them.

with that we now put SSD drives in everything and now even the new Lenovo's are getting NVMe drives!!! WOOOT!!!!

so so once again starting back up on the benchmarking of drives... BUT benchmarks are just that.. not real world to me.

with my 10gb network, I have learned a lot and EniGmA1987 was there for a reading and push me forward.

How to use ramdrives to test your true network... etc...

now im there... all tested and starting...

im not getting the numbers to make 10gb worth it to me... unless im missing the point and it isn't about file copies but more of say running multiple vms via 10gb link from iscsi freenas datastore to the esxi host????

so what is needed to really get storage speeds?

I took my plex machine and did tons of various copies to ramdisks, ramdisk locally across 10gb to various SSD and got freakishly wild and low numbers.. nothing high... and my server has server 2016 with essentials role, stable bit drive pool and SSD Optimizer for cache (just installed that)...

during my 10gb file copies

Tried from plex though 10gb nic and got...to remote

120gb Samsung SSD - 30MB/s transfer rate

500gb Crucial SSD - 200MB/s transfer rate

but on my ramdisk to ramdisk I get 700MB/s+

how can I achieve closer to these numbers and not spend a fortune?

I have been at end with trying various things and need to be schooled on it as I am just missing something...

examples...

ramdisk to ramdisk via 1gb network

ramdisk to ramdisk via 10gb network

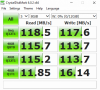

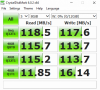

crystaldiskmark to just that ramdrive

so ive got better numbers but just don't understand how to get these on file copies...

I do photo and video editing and takes a while to copy files around... thus why this would be awesome-sauce!!!!!

anyway I got company so gotta leave it at that.... hopefully someone can help unclutter my mind...

yeah this post wonders all over the place. hope you can follow!!!! hah sorry my adhd brain is on fire!!!!!!!

oh crap another n00b.. oh great.... your partially right. but

I have been doing computers long enough to tell you that my favorite O/S was … Dr Dos 7.0...

I used o-scope and schematics to repair IBM P/S 2 and Value Point systems...

fixed token ring cards and memory expansion cards.

I even learned early - yeah people that don't know what pre internet was...

I learned on the 8088 and 8086 what crystal was the driver for the cpu and was overclocking since then... take the 4mhz crystal out and put an 8 in...

hell my fav cpu was the cyrix 6x86!!!!!! LOL....

I was a benchmark whore... loved me some checkit … ok found this while typing this.. had to look... LOL

https://winworldpc.com/product/checkit-pro/pro-1x

I was so fluent on hardware but lost site when it started getting too hard to keep up and that was around the q6600 time...

just so many cpu types, cpu sockets, memory speeds.. just too much for me to keep up with...

ive been doing my main job as an Altiris Administrator since 2006 for a few companies now and yeah im out of the loop on what the cool jobs are today. I am seeing...virtualization, web services, storage and the sort...

I do my part to stay up with my old dwindling hardware but effectively am running...

win10, server 2016

esxi - full lab running 2k16, ad, dns, dhcp, etc... with Altiris and all of its solutions...

now venturing into freenas

my latest posts craz has been about 10gb on the cheap... im $55 in on old Mellanox cx4 style ConnectX-1 cards that I have working on win10, esxi, freenas 11 (put a ticket in as not working on 12)

so with that all out of the way... lets get to the learning im after!!!

my current job, they use what comes in the Lenovo systems... and they are just cruddy WD Blue drives. Is what it is.. but we deal with scientists and masses of data. I have told my coworkers that we need to look at SSD and I was told.. no too expensive. I took it upon myself to use anvil storage benchmark tool and started benchmarking ALL of our types of HD's... normal spinning sata drives, then our laptops that had the cruddy intel 180gb ssd's that were dying so much onto other things. we started getting a few SSD drives in for special requests and I started benchmarking them.

with that we now put SSD drives in everything and now even the new Lenovo's are getting NVMe drives!!! WOOOT!!!!

so so once again starting back up on the benchmarking of drives... BUT benchmarks are just that.. not real world to me.

with my 10gb network, I have learned a lot and EniGmA1987 was there for a reading and push me forward.

How to use ramdrives to test your true network... etc...

now im there... all tested and starting...

im not getting the numbers to make 10gb worth it to me... unless im missing the point and it isn't about file copies but more of say running multiple vms via 10gb link from iscsi freenas datastore to the esxi host????

so what is needed to really get storage speeds?

I took my plex machine and did tons of various copies to ramdisks, ramdisk locally across 10gb to various SSD and got freakishly wild and low numbers.. nothing high... and my server has server 2016 with essentials role, stable bit drive pool and SSD Optimizer for cache (just installed that)...

during my 10gb file copies

Tried from plex though 10gb nic and got...to remote

120gb Samsung SSD - 30MB/s transfer rate

500gb Crucial SSD - 200MB/s transfer rate

but on my ramdisk to ramdisk I get 700MB/s+

how can I achieve closer to these numbers and not spend a fortune?

I have been at end with trying various things and need to be schooled on it as I am just missing something...

examples...

ramdisk to ramdisk via 1gb network

ramdisk to ramdisk via 10gb network

crystaldiskmark to just that ramdrive

so ive got better numbers but just don't understand how to get these on file copies...

I do photo and video editing and takes a while to copy files around... thus why this would be awesome-sauce!!!!!

anyway I got company so gotta leave it at that.... hopefully someone can help unclutter my mind...

Attachments

-

107.7 KB Views: 1