Hello,

I purchased four Samsung 983 DCT m.2 NVMe drives and their performance leaves much to be desired. While Samsung says that they should reach 3000MB/s read and 1400MB/s write, I don't reach those speeds.

I'm mostly concerned with read speed as these are meant to be holding my VHDs on my hypervisor.

Linux FIO gives me 1400MB/s (as well as the Ubuntu disk benchmark utility) as does the Unraid Disk Speed docker.

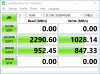

Crystal Disk Mark in Windows gives me better speed at 2200MB/s.

I downloaded the DC Toolkit for Windows and looked around at some of the other comments on these forums and I'm having trouble getting the toolkit to do anything with the drives.

I saw a suggestion on these forums to do a factory erase, and I can't even do that.

When I run "- -disk 0 - -erase" it asks me to confirm the disk erase, so I know for a fact that the command is correct (it instead says it can't wipe the OS disk, which is expected), but when I run it against "0:c" it loops back on usage.

I'd also like to see about how to upgrade the firmware if erasing doesn't help, but I'm not entirely sure how that works as the instructions are a bit unclear as to how to download the firmware for the flash.

PS. I also read that the DRAM cache might be disabled. Not sure how to turn that back on.

I purchased four Samsung 983 DCT m.2 NVMe drives and their performance leaves much to be desired. While Samsung says that they should reach 3000MB/s read and 1400MB/s write, I don't reach those speeds.

I'm mostly concerned with read speed as these are meant to be holding my VHDs on my hypervisor.

Linux FIO gives me 1400MB/s (as well as the Ubuntu disk benchmark utility) as does the Unraid Disk Speed docker.

Crystal Disk Mark in Windows gives me better speed at 2200MB/s.

I downloaded the DC Toolkit for Windows and looked around at some of the other comments on these forums and I'm having trouble getting the toolkit to do anything with the drives.

I saw a suggestion on these forums to do a factory erase, and I can't even do that.

When I run "- -disk 0 - -erase" it asks me to confirm the disk erase, so I know for a fact that the command is correct (it instead says it can't wipe the OS disk, which is expected), but when I run it against "0:c" it loops back on usage.

I'd also like to see about how to upgrade the firmware if erasing doesn't help, but I'm not entirely sure how that works as the instructions are a bit unclear as to how to download the firmware for the flash.

PS. I also read that the DRAM cache might be disabled. Not sure how to turn that back on.