This was prompted by some discussion in the comments section of the Dell 760 server review - basically that there's not enough data available about VROC in a production setting (i.e. not home user).

I have some experience with VROC in a production setting. Windows Server 2019. Only 1 box (we're a small shop). Purchase was late 2021.

See here for the Intel landing page:

Intel® Virtual RAID on CPU (Intel® VROC) Enterprise RAID Solution

Note the significant limitations, including:

Only specific drive models supported, RAID 10 limited to 4 drives.

My goal was a RAID 5 setup, as that level of expected performance (about equal to 1 NVMe drive) should be adequate. RAID 5 is advertised up to 24 drives. Cool I thought, I can start with 4 drives and expand as needed. That's what I did, expanding ultimately to 5 and 6 drives after months of problem free operation on 4 drives.

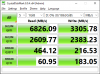

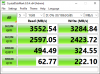

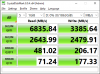

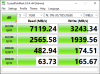

The performance was about as expected and was sufficient for my application.

The unexpected part is that for RAID 5 arrays larger than 4 drives, it does not perform at least some of the parity calculations on the fly. This is undocumented (as far as I could find). To run these calculations, one needs to execute a function within the VROC driver GUI, “verify and repair”. The larger the array, the more “errors” are found and fixed with each run of this function. Talking hundreds to thousands on 5 and 6 drive arrays and this process takes ~12 hours to complete. This is after only writing a few TB to the array (1-2).

How to Verify Data and Repair Inconsistencies on Existing RAID Volume...

RAID Volume Data Verify and Repair

I was disturbed by this and assumed it was indicative of a malfunction. I backed up the data and rebuilt the array. I updated firmware, BIOS and drivers. I added and removed drives to try and isolate the problem. The problem persisted - parity erros (but no bad blocks) whenever I tested with 5 or more drives. Since this is my only such hardware and this was a production backup server this was unpleasantly stressful.

Finally, I opened a ticket and worked through a support process with Supermicro and Intel. Intel ultimately replied that it was “expected behavior”. These calculations were deferred for performance reasons. This was news to me and apparently to Supermicro.

This was unacceptable for me, so I ended up splitting the array into 2 separate 4 drive RAID 5 arrays, thus costing me some additional complexity and the cost of an additional drive lost to parity. Since there's no shrinking an ReFS volume this was a pain. All told, I probably wasted 60 hours on this given the quantity of data that was already on the array and the time to move it around.

This problem was hinted at in some old Intel VROC forum posts. See the last post in this thread: VROC - RAID5 - Parity Errors

If I had a do-over I would not have gone with VROC. Since we do not use *nix in our shop, I probably would have backed off to SAS or (edit: SATA) SSD Hardware RAID.

Additionally, as we saw here: https://www.servethehome.com/intel-...ng-vroc-around-sapphire-rapids-server-launch/ I strongly suspect VROC is not long for the world. If so, this implies it will no longer be developed and we can expect support to suffer.

VROC is now up to version 8.0, but good luck finding much about it on Intel’s website. They seem to have pushed primary support to the OEMs?

This appears to be the most useful landing page I have found: Resources for Intel® Virtual RAID on CPU (Intel® VROC)

Notice how no new drives were added in the move from 7.8 to 8.0 (and really, drives added since 7.5 are paltry):

https://www.intel.com/content/dam/s...ware/Intel_VROC_VMD_Supported_Configs_8_0.pdf

Apologies if this comes off as rambling, but it's a long story. I am not an expert, but happy to answer any questions I can.

I have some experience with VROC in a production setting. Windows Server 2019. Only 1 box (we're a small shop). Purchase was late 2021.

See here for the Intel landing page:

Intel® Virtual RAID on CPU (Intel® VROC) Enterprise RAID Solution

Note the significant limitations, including:

Only specific drive models supported, RAID 10 limited to 4 drives.

My goal was a RAID 5 setup, as that level of expected performance (about equal to 1 NVMe drive) should be adequate. RAID 5 is advertised up to 24 drives. Cool I thought, I can start with 4 drives and expand as needed. That's what I did, expanding ultimately to 5 and 6 drives after months of problem free operation on 4 drives.

The performance was about as expected and was sufficient for my application.

The unexpected part is that for RAID 5 arrays larger than 4 drives, it does not perform at least some of the parity calculations on the fly. This is undocumented (as far as I could find). To run these calculations, one needs to execute a function within the VROC driver GUI, “verify and repair”. The larger the array, the more “errors” are found and fixed with each run of this function. Talking hundreds to thousands on 5 and 6 drive arrays and this process takes ~12 hours to complete. This is after only writing a few TB to the array (1-2).

How to Verify Data and Repair Inconsistencies on Existing RAID Volume...

RAID Volume Data Verify and Repair

I was disturbed by this and assumed it was indicative of a malfunction. I backed up the data and rebuilt the array. I updated firmware, BIOS and drivers. I added and removed drives to try and isolate the problem. The problem persisted - parity erros (but no bad blocks) whenever I tested with 5 or more drives. Since this is my only such hardware and this was a production backup server this was unpleasantly stressful.

Finally, I opened a ticket and worked through a support process with Supermicro and Intel. Intel ultimately replied that it was “expected behavior”. These calculations were deferred for performance reasons. This was news to me and apparently to Supermicro.

This was unacceptable for me, so I ended up splitting the array into 2 separate 4 drive RAID 5 arrays, thus costing me some additional complexity and the cost of an additional drive lost to parity. Since there's no shrinking an ReFS volume this was a pain. All told, I probably wasted 60 hours on this given the quantity of data that was already on the array and the time to move it around.

This problem was hinted at in some old Intel VROC forum posts. See the last post in this thread: VROC - RAID5 - Parity Errors

If I had a do-over I would not have gone with VROC. Since we do not use *nix in our shop, I probably would have backed off to SAS or (edit: SATA) SSD Hardware RAID.

Additionally, as we saw here: https://www.servethehome.com/intel-...ng-vroc-around-sapphire-rapids-server-launch/ I strongly suspect VROC is not long for the world. If so, this implies it will no longer be developed and we can expect support to suffer.

VROC is now up to version 8.0, but good luck finding much about it on Intel’s website. They seem to have pushed primary support to the OEMs?

This appears to be the most useful landing page I have found: Resources for Intel® Virtual RAID on CPU (Intel® VROC)

Notice how no new drives were added in the move from 7.8 to 8.0 (and really, drives added since 7.5 are paltry):

https://www.intel.com/content/dam/s...ware/Intel_VROC_VMD_Supported_Configs_8_0.pdf

Apologies if this comes off as rambling, but it's a long story. I am not an expert, but happy to answer any questions I can.

Last edited: