seqwrite: (g=0): rw=write, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=psync, iodepth=128

...

fio-3.5

Starting 8 processes

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: Laying out IO file (1 file / 4096MiB)

seqwrite: (groupid=0, jobs=8): err= 0: pid=73509: Fri Jan 11 10:47:01 2019

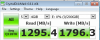

write: IOPS=27.9k, BW=3485MiB/s (3655MB/s)(32.0GiB/9402msec)

clat (usec): min=20, max=84356, avg=268.07, stdev=1128.03

lat (usec): min=21, max=84357, avg=272.10, stdev=1130.45

clat percentiles (usec):

| 1.00th=[ 27], 5.00th=[ 51], 10.00th=[ 52], 20.00th=[ 57],

| 30.00th=[ 65], 40.00th=[ 76], 50.00th=[ 82], 60.00th=[ 98],

| 70.00th=[ 151], 80.00th=[ 314], 90.00th=[ 478], 95.00th=[ 816],

| 99.00th=[ 2573], 99.50th=[ 4178], 99.90th=[13829], 99.95th=[22676],

| 99.99th=[47973]

bw ( MiB/s): min= 1, max= 6364, per=41.78%, avg=1456.15, stdev=929.15, samples=262144

iops : min= 1582, max= 6784, avg=3434.78, stdev=1291.01, samples=141

lat (usec) : 50=3.29%, 100=57.53%, 250=14.70%, 500=15.51%, 750=3.51%

lat (usec) : 1000=1.86%

lat (msec) : 2=2.17%, 4=0.90%, 10=0.36%, 20=0.11%, 50=0.05%

lat (msec) : 100=0.01%

cpu : usr=2.17%, sys=28.21%, ctx=245771, majf=0, minf=1736

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,262144,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

WRITE: bw=3485MiB/s (3655MB/s), 3485MiB/s-3485MiB/s (3655MB/s-3655MB/s), io=32.0GiB (34.4GB), run=9402-9402msec