Home Setup - Design changes

- Thread starter marcoi

- Start date

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

well there is some of your theoretical speed that's getting lost...

With "iperf3 server setup on freenas / client on ubuntu 18.4", your bandwith 12,8 GB/s , thats going to be ~1300 MB/s (o/c the bandwith is no static value and may change every few seconds for unknown reasons, especially on esxi).

And just realised that your read perf was significantly lower than write which is exactly a scenario I have seen with CDM/FreeNas/SSD Pools before. I never found an a solution but @gea had provided a possible explanation at some point. I think I was performing tests with S3700's back then, might be this thread: https://forums.servethehome.com/index.php?posts/164693/ , unfortunately no time to actually look it up, sorry

With "iperf3 server setup on freenas / client on ubuntu 18.4", your bandwith 12,8 GB/s , thats going to be ~1300 MB/s (o/c the bandwith is no static value and may change every few seconds for unknown reasons, especially on esxi).

And just realised that your read perf was significantly lower than write which is exactly a scenario I have seen with CDM/FreeNas/SSD Pools before. I never found an a solution but @gea had provided a possible explanation at some point. I think I was performing tests with S3700's back then, might be this thread: https://forums.servethehome.com/index.php?posts/164693/ , unfortunately no time to actually look it up, sorry

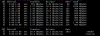

ok so i been messing with two ubuntu images using iperf3. both vms on the same host. I enabled tso and lro and jumbo frames. Tesitng using vmnet3 nics and storage switch where freenas is setup to use.

Info from: VMware Knowledge Base

So far this is the best test VM to VM.

Info from: VMware Knowledge Base

So far this is the best test VM to VM.

I looked at briefly. The host has 2 e5-2680 v2 CPU and only had the few test VMs running at the time. So I dont think im even causing the CPUs any real load.Have you looked at cpu load at that time?

I agree - I dont know if I should create a new Vswitch and move the vms onto it or reinstall ESXI.

i have the bios setting for optimized RAM and Dell Power to watt profile. Ill go back in and re-check.well vswitch is a quick test, but I'd be surprised if reinstall would help. Not sure we discussed that, but all bios settings/energy saving options are off?

worth a shot- so far Watts increased by 50-60 ish when i changed the bios to performance.Both bios and esxi should be on max performance