What kinda power consumption Are we talking per 512gb module? My board has 8 slots and Ive filled 6 of them with 16GB DIMMs..Never ever touched ESXi. Can't speak for it. Using them as read cache and vm storage for ZFS under GlusterFS and for testing as Intel CAS cache under MooseFS and BeeGeeFS. It's convenient to have an Optane drive just sitting in Ram slots I would not use otherwise without being forced to use pci adapter with extra cabling or expansive N-backplanes. Could also think of db storage, vm storage or any other storage. Know someone using them as temp scratch drive. But someone needs to be aware that these are in the most performant mode set up as 'interleave' which is kind of Raid0. But even as 'not-interleave' performance is superior in 99% use case excluding NVDimm-N not set up as simple block device.

Edit: Just forgot to use them as cheap additional ram. Where do u get 512GB ram for ~100EUR? But then I would recommend min 256GB ram already installed at minimum.

Notice: Page may contain affiliate links for which we may earn a small commission through services like Amazon Affiliates or Skimlinks.

Intel Optane DC Persistent Memory Module (PMM)

Intel has talked about Optane DC Persistent Memory Modules (PMM) publicly for over a…

For the record, in for 7. Before China does something to Taiwan, which we are all going to regret only a short bit later. Might make the Great Toilet Paper Shortage of 2020 look like a silly joke.

Also have a cheap X11SPL on the way, patched myself a 3.9 BIOS for 255W support already, and got an Adafruit MCP2221A to mod VRMs. Will try an Optane PMEM on this as well.

Also have a cheap X11SPL on the way, patched myself a 3.9 BIOS for 255W support already, and got an Adafruit MCP2221A to mod VRMs. Will try an Optane PMEM on this as well.

They will work on X11SPL with 62xx and 82xx's. Had no issues /// EDIT: Tested on Bios 3.8Also have a cheap X11SPL on the way, patched myself a 3.9 BIOS for 255W support already, and got an Adafruit MCP2221A to mod VRMs. Will try an Optane PMEM on this as well.

Got the one stick from @Bert in the mail today. Thank you, works! But very tight on the Asrock board with Noctua cooler, holding clamp is in the way. Will need to cut a small dent in the CPU cooler to relieve DIMM slot sideways pressure.

Arch Linux has ndctl but lacked ipmctl, so I made a nice AUR package: Index of /files/ipmctl/

Succeeded to update firmware with it (after waiting like @111alan for half an hour) so I guess it works. I checked various OEMs' firmware but they are all the same fw_ekvb0_1.2.0.5446_rel.bin. Stick is from mid of 2018 but only has 5 hours of total uptime.

Also "congratulations" to Intel for ramming such a neat technology into the ground through mindless product differentiation. Now on the rummage table for 6 cents on the dollar.

Arch Linux has ndctl but lacked ipmctl, so I made a nice AUR package: Index of /files/ipmctl/

Succeeded to update firmware with it (after waiting like @111alan for half an hour) so I guess it works. I checked various OEMs' firmware but they are all the same fw_ekvb0_1.2.0.5446_rel.bin. Stick is from mid of 2018 but only has 5 hours of total uptime.

Also "congratulations" to Intel for ramming such a neat technology into the ground through mindless product differentiation. Now on the rummage table for 6 cents on the dollar.

Note that these mods consume CPU TDP

Requires up to 121.5W TDP in 2-2-2 configuration

Requires up to 121.5W TDP in 2-2-2 configuration

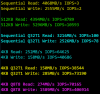

Got remaining 6 from @gb00s today, thank you!!  Looks like all from mid-2018, and never used. As was said, working on EPC621D8A with 8259CL and jumpers set correctly:

Looks like all from mid-2018, and never used. As was said, working on EPC621D8A with 8259CL and jumpers set correctly:

# ipmctl show -topology

DimmID | MemoryType | Capacity | PhysicalID| DeviceLocator

================================================================================

0x0001 | Logical Non-Volatile Device | 502.563 GiB | 0x0011 | CPU1_DIMM_A2

0x0101 | Logical Non-Volatile Device | 502.563 GiB | 0x0015 | CPU1_DIMM_D2

N/A | DDR4 | 32.000 GiB | 0x0010 | CPU1_DIMM_A1

N/A | DDR4 | 32.000 GiB | 0x0012 | CPU1_DIMM_B1

N/A | DDR4 | 32.000 GiB | 0x0013 | CPU1_DIMM_C1

N/A | DDR4 | 32.000 GiB | 0x0014 | CPU1_DIMM_D1

N/A | DDR4 | 32.000 GiB | 0x0016 | CPU1_DIMM_E1

N/A | DDR4 | 32.000 GiB | 0x0017 | CPU1_DIMM_F1

# ipmctl show -topology

DimmID | MemoryType | Capacity | PhysicalID| DeviceLocator

================================================================================

0x0001 | Logical Non-Volatile Device | 502.563 GiB | 0x0011 | CPU1_DIMM_A2

0x0101 | Logical Non-Volatile Device | 502.563 GiB | 0x0015 | CPU1_DIMM_D2

N/A | DDR4 | 32.000 GiB | 0x0010 | CPU1_DIMM_A1

N/A | DDR4 | 32.000 GiB | 0x0012 | CPU1_DIMM_B1

N/A | DDR4 | 32.000 GiB | 0x0013 | CPU1_DIMM_C1

N/A | DDR4 | 32.000 GiB | 0x0014 | CPU1_DIMM_D1

N/A | DDR4 | 32.000 GiB | 0x0016 | CPU1_DIMM_E1

N/A | DDR4 | 32.000 GiB | 0x0017 | CPU1_DIMM_F1

They all came with firmware 1.2.0.5117.... Looks like all from mid-2018, and never used ...

Kind of understand why. They have to use some proprietary SW and HW designs to leverage as much perf as possible. Just like the RAM disk situation, where the main factor limiting performance is the block device emulation driver, not RAM itself.Got the one stick from @Bert in the mail today. Thank you, works! But very tight on the Asrock board with Noctua cooler, holding clamp is in the way. Will need to cut a small dent in the CPU cooler to relieve DIMM slot sideways pressure.

Arch Linux has ndctl but lacked ipmctl, so I made a nice AUR package: Index of /files/ipmctl/

Succeeded to update firmware with it (after waiting like @111alan for half an hour) so I guess it works. I checked various OEMs' firmware but they are all the same fw_ekvb0_1.2.0.5446_rel.bin. Stick is from mid of 2018 but only has 5 hours of total uptime.

Also "congratulations" to Intel for ramming such a neat technology into the ground through mindless product differentiation. Now on the rummage table for 6 cents on the dollar.

But, I can never understand why they did not make Apache Pass and Barlow Pass cross-compatible. This is so discouraging to the buyers. I still want some Crow Pass sticks, but thinking about the price and the fact that these things may become utterly useless when I switch to Emerald Rapids or Granite Rapids, the TCO(If it can be called this way) is way too high.

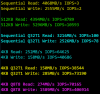

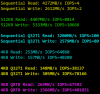

So I tested throughput and IOPS for ext4 and ZFS w/ and w/o LZ4 to get some ballpark figures.

Hardware: Supermicro X11SPL-F BIOS 3.9 CPU 8259CL RAM 1x32 GB Samsung RDIMM 2666 PMEM 1x512 GB Optane Firmware 01.02.00.5446. Both memory modules on first IMC and first channel.

Linux kernel 6.2.16 booted from USB with options

root=PARTUUID=af8fa598-02 rw console=ttyS0,115200n8 console=tty msr.allow_writes=on rootflags=compress=zstd intel_iommu=on audit=0 delayacct mitigations=off init_on_alloc=0 init_on_free=0

Optane Setup

ipmctl create -goal PersistentMemoryType=AppDirectNotInterleaved

ndctl create-namespace --mode sector

ext4 Setup

mkfs.ext4 -m0 -Elazy_itable_init=0 /dev/pmem0s

ZFS Setup

zpool create -f -o ashift=12 -o cachefile=/etc/zfs/zpool.cache -O dnodesize=legacy -O normalization=formD -O mountpoint=none -O canmount=off -O compression=lz4 -O aclinherit=passthrough -O acltype=posixacl -O xattr=sa -O relatime=on -O dedup=off zpool /dev/pmem0s

zfs create zpool/comp

zfs create zpool/nocomp

zfs set compression=lz4 mountpoint=/mnt/ssd/comp zpool/comp

zfs set compression=off mountpoint=/mnt/ssd/nocomp zpool/nocomp

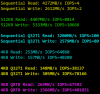

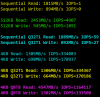

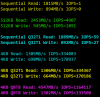

All numbers from a tweaked fio script called cdm_fio.sh approximating "CrystalDiskMark".

ZFS no compression:

ZFS with LZ4 compression:

ext4:

Hardware: Supermicro X11SPL-F BIOS 3.9 CPU 8259CL RAM 1x32 GB Samsung RDIMM 2666 PMEM 1x512 GB Optane Firmware 01.02.00.5446. Both memory modules on first IMC and first channel.

Linux kernel 6.2.16 booted from USB with options

root=PARTUUID=af8fa598-02 rw console=ttyS0,115200n8 console=tty msr.allow_writes=on rootflags=compress=zstd intel_iommu=on audit=0 delayacct mitigations=off init_on_alloc=0 init_on_free=0

Optane Setup

ipmctl create -goal PersistentMemoryType=AppDirectNotInterleaved

ndctl create-namespace --mode sector

ext4 Setup

mkfs.ext4 -m0 -Elazy_itable_init=0 /dev/pmem0s

ZFS Setup

zpool create -f -o ashift=12 -o cachefile=/etc/zfs/zpool.cache -O dnodesize=legacy -O normalization=formD -O mountpoint=none -O canmount=off -O compression=lz4 -O aclinherit=passthrough -O acltype=posixacl -O xattr=sa -O relatime=on -O dedup=off zpool /dev/pmem0s

zfs create zpool/comp

zfs create zpool/nocomp

zfs set compression=lz4 mountpoint=/mnt/ssd/comp zpool/comp

zfs set compression=off mountpoint=/mnt/ssd/nocomp zpool/nocomp

All numbers from a tweaked fio script called cdm_fio.sh approximating "CrystalDiskMark".

ZFS no compression:

ZFS with LZ4 compression:

ext4:

Got remaining 6 from @gb00s today, thank you!!Looks like all from mid-2018, and never used. As was said, working on EPC621D8A with 8259CL and jumpers set correctly:

# ipmctl show -topology

DimmID | MemoryType | Capacity | PhysicalID| DeviceLocator

================================================================================

0x0001 | Logical Non-Volatile Device | 502.563 GiB | 0x0011 | CPU1_DIMM_A2

0x0101 | Logical Non-Volatile Device | 502.563 GiB | 0x0015 | CPU1_DIMM_D2

N/A | DDR4 | 32.000 GiB | 0x0010 | CPU1_DIMM_A1

N/A | DDR4 | 32.000 GiB | 0x0012 | CPU1_DIMM_B1

N/A | DDR4 | 32.000 GiB | 0x0013 | CPU1_DIMM_C1

N/A | DDR4 | 32.000 GiB | 0x0014 | CPU1_DIMM_D1

N/A | DDR4 | 32.000 GiB | 0x0016 | CPU1_DIMM_E1

N/A | DDR4 | 32.000 GiB | 0x0017 | CPU1_DIMM_F1

Do you know how many PMEM Asrock EPC621D8A with 8259CL support? I can't find any specification.

The board has 8 DIMM slots I'm wondering if that supports 4x512Gb Pmem paired with 4-RDIMM or more?

Thanks

No clue on that specific board. But I started reading up on using these on my r740xd and it seeming like there are tons of unsupported configurations. Obviously you need a 2nd gen scalable. Also, if you only use 1x cpu you can only use 128gb dcpmm for some reason. There were other restrictions as well but those were enough to make reevaluate using these.Do you know how many PMEM Asrock EPC621D8A with 8259CL support? I can't find any specification.

The board has 8 DIMM slots I'm wondering if that supports 4x512Gb Pmem paired with 4-RDIMM or more?

Thanks

I don't know. @RolloZ170 will.

Also try out Asrock Rack support. And if it's just to find out if they care enough to answer. Always influences my future buying decisions.

Lenovo has a good PDF on the crazy population rules: Intel Optane Persistent Memory 100 Series Product Guide

Check page 4 bottom "Implementation requirements". Looks like two 32 GB x4 RDIMM 2666 on every side (2 per IMC) and another 2 PMEM per side might work. To keep at 1:16 ratio limit in memory or mixed mode. For App Direct mode I don't know if you could stuff 2 RDIMMs and 6 PMEMs on the board. X11SPL-F seems to have gotten a revision 1.02 to improve stability in that regard.

Also try out Asrock Rack support. And if it's just to find out if they care enough to answer. Always influences my future buying decisions.

Lenovo has a good PDF on the crazy population rules: Intel Optane Persistent Memory 100 Series Product Guide

Check page 4 bottom "Implementation requirements". Looks like two 32 GB x4 RDIMM 2666 on every side (2 per IMC) and another 2 PMEM per side might work. To keep at 1:16 ratio limit in memory or mixed mode. For App Direct mode I don't know if you could stuff 2 RDIMMs and 6 PMEMs on the board. X11SPL-F seems to have gotten a revision 1.02 to improve stability in that regard.

I don't know. @RolloZ170 will.

Also try out Asrock Rack support. And if it's just to find out if they care enough to answer. Always influences my future buying decisions.

Lenovo has a good PDF on the crazy population rules: Intel Optane Persistent Memory 100 Series Product Guide

Check page 4 bottom "Implementation requirements". Looks like two 32 GB x4 RDIMM 2666 on every side (2 per IMC) and another 2 PMEM per side might work. To keep at 1:16 ratio limit in memory or mixed mode. For App Direct mode I don't know if you could stuff 2 RDIMMs and 6 PMEMs on the board. X11SPL-F seems to have gotten a revision 1.02 to improve stability in that regard.

Thanks, I was looking at SuperMicro's guide. It has similar recommendation.

If so I guess 4x512Gb should work. I ordered 2xOptane 512Gb from ebay listed @$149 and my offered was accepted @$130. Might get more in the future when it comes down more. I'll use them mainly with memory mode in order to spin up more VMs.A total of six DCPMMs can be supported per processor (one in each memory channel), and a minimum of two DDR4 DIMMs are required per processor (one per memory controller).

X11DPi-N(T) rev. 2.01a got this improvements too, comparing to rev. 2.00X11SPL-F seems to have gotten a revision 1.02 to improve stability in that regard.

depends on the DIMM VRM module cpapbilities and BIOS support.Do you know how many PMEM Asrock EPC621D8A with 8259CL support? I can't find any specification.

ASRock Rack is not much informative on that themes.

optane support is mentioned in the WC621D8A-2T manual but not in EPC621D8A

I'm a stock market investor and speculator at night and from that point of view and correct me if I am wrong but those 512GB PMEM 100 just had a RRP of 2000 USD per piece. Now 120 or 130. I think they won't come down more, these are imho EOL and firesale prices. Next stop might be Unobtainium City.Might get more in the future when it comes down more.

With Reddit imploding a little more every day these days and users jumping onto lemmy or here or level1, maybe it'd be a good time to rescue the r/homelab slogan. ;-) Don't forget you probably want an -L suffix CPU for that.I'll use them mainly with memory mode in order to spin up more VMs.