I have two systems, each with an Intel x540 T2 10GB NIC:

System 1:

Threadripper Machine:

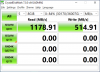

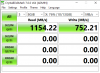

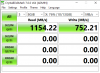

Read/Write speeds on the Threadripper machine using Crystal Disk Mark. Tested by mapping a Windows share of a RAMDisk set up on the I9 7900x. Read/Write speeds are perfect.

Intel Machine:

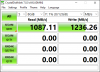

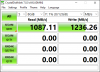

Read/Write speeds on the Intel machine using Crystal Disk Mark. Tested by mapping a Windows share of a RAMDisk set up on the x1900. Read speeds are great but write speeds are not.

Read/Write speeds should be maxing out here. Previous to getting these Intel NICS, I had two Mellanox Connectx2 SFP cards, I experienced the same slow write speed. I really do not know what the problem is. The issue is definitely not the cards, as concluded above, it’s happened on two separate pieces of hardware.

There is no switch involved, the two machines are directly connected.

Threadripper Machine’s Intel x540 NIC

Intel Machine - x540 NIC

Both cards seem to be running at 8x

Am I right in thinking the problem is on the AMD machine, as that's where it was writing too on the Intel machine test, in regard to the slower write speed, no?

Any suggestions would be great, thanks.

Iperf Test

Threadripper machine was client, Intel machine was the server:

Intel machine was client, Threadripper machine was the server:

System 1:

- X1900 (AMD Threadripper)

- 32GB RAM

- RAMDisk (for testing purposes)

- Windows 10

- I9 7900x (Intel)

- 32GB RAM

- RAMDisk (for testing purposes)

- Windows 10

Threadripper Machine:

Read/Write speeds on the Threadripper machine using Crystal Disk Mark. Tested by mapping a Windows share of a RAMDisk set up on the I9 7900x. Read/Write speeds are perfect.

Intel Machine:

Read/Write speeds on the Intel machine using Crystal Disk Mark. Tested by mapping a Windows share of a RAMDisk set up on the x1900. Read speeds are great but write speeds are not.

Read/Write speeds should be maxing out here. Previous to getting these Intel NICS, I had two Mellanox Connectx2 SFP cards, I experienced the same slow write speed. I really do not know what the problem is. The issue is definitely not the cards, as concluded above, it’s happened on two separate pieces of hardware.

There is no switch involved, the two machines are directly connected.

Threadripper Machine’s Intel x540 NIC

Intel Machine - x540 NIC

Both cards seem to be running at 8x

Am I right in thinking the problem is on the AMD machine, as that's where it was writing too on the Intel machine test, in regard to the slower write speed, no?

Any suggestions would be great, thanks.

Iperf Test

Threadripper machine was client, Intel machine was the server:

Intel machine was client, Threadripper machine was the server:

Attachments

-

28.9 KB Views: 15

Last edited: