Hey, been a while since I have posted here.

My current setup is serving me well, almost flawless for a good few years now. Theres a few issues with with it and would like to put some sort of roadmap to upgrading various aspects over the next year. Also I'm getting an itch to upgrade

Apologies for the long post! Probably should have broken it down into multiple posts as I upgrade each part. Basically I could do with some guidance on which direction to take to future proof the setup for 4-5 years.

Budget is around £4000 excluding VAT (UK) but spread out over multiple phases. Small potential to increase this depending on sale of old components, but ideally sales would fund this figure.

I'll start listing the spec/use cases then run though my current issues.

Current Hardware

The most logical step here feels to be a box specifically for pfSense, budget ideally under £500 (ex VAT).

I'd like this to support 10GbE to maintain as some level of performance with InterVLAN routing. This is mainly clients (LAN only) accessing media, but there's some cases of test environments running across multiple VLANs. CPU could be somewhat problematic.

Any of my substantial network use is configured to avoid routing.

Obviously this is going to increase idle power consumption, but think it will be possible to keep that increase under 30w.

There's a few options which all come in around the £500 mark (already have a spare SFP+ NIC), IPMI is a must. Will require 8GB RAM and a 1U case/fans.

Questions

1) Any suggestions on alternatives here? I'm leaning more towards the Atom board but unsure on performance

2) Is there any benefit to SATA DOM over a cheap M.2 drive?

3) Potential L3 switch suggestions?

Phase 2 - Storage upgrade

Currently have ~19TB usable. Data storage growth is around 2TB per year. Although this may increase with a potential move to 4K content soon. No deleting of content

My plan is to replace all existing 3TB drives with large capacity disks as part of a new ZFS zpool.

Drive options:

4) Given the varied use cases above, perhaps slightly lower capacity with more drives would be beneficial to performance? Although power consumption is a concern.

5) Should I be looking at smaller vdevs with such high capacity drives?

6) Are there still advisable drive configurations with ZFS eg. 8 drives per vdev?

7) Assuming I have read the documentation correctly, it's possible to simply add vdevs to a pool to increase available storage space?

8) Is RAIDZ2 still suitable for such high capacity drives or should I be looking at RAIDZ3?

Phase 3 - Storage performance

Currently a lot of the VMs running are stored on the SM961 1TB drive, these are backed up nightly to the ZFS pool, then backed up off site a few hours later. This leaves me with potential for 24 hours worth of data loss if the drive fails! This 1TB of storage surprisingly doesn't go very far which means quite a bit of manual moving around of VMs.

The current FreeNAS configuration has little to no performance optimisations. Just 48GB of RAM which should be used by the ARC.

After doing some research into ZFS, it looks like there's quite a few options which I could utilise. Specifically the L2ARC and ZIL.

Questions

9) Is it possible/sensible to store these VMs on my primary data pool with the SM961 drive added as a L2ARC?

9a) Should I be considering a different NVMe drive given the increase in writes?

10) What drive would be best for a ZIL?

Looking here https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/

Seems like one of the Intel Optane offerings will be best such as the 32GB M.2.

11) Can the L2ARC be configured for specific datasets only? Eg VM storage?

Seems incredibly pointless having the media (films) ever stored in cache.

Phase 4 - Server upgrade

I think the next phase would be to upgrade the primary server, then retire the secondary server (never need 16 cores of processing power!), replacing this with the Xeon E5-1620v2 board. This can be for another day!

--

Thanks to anyone who takes the time to read my ramblings, any input/suggestions would be appreciated.

My current setup is serving me well, almost flawless for a good few years now. Theres a few issues with with it and would like to put some sort of roadmap to upgrading various aspects over the next year. Also I'm getting an itch to upgrade

Apologies for the long post! Probably should have broken it down into multiple posts as I upgrade each part. Basically I could do with some guidance on which direction to take to future proof the setup for 4-5 years.

Budget is around £4000 excluding VAT (UK) but spread out over multiple phases. Small potential to increase this depending on sale of old components, but ideally sales would fund this figure.

I'll start listing the spec/use cases then run though my current issues.

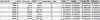

Current Hardware

- APC UPS - SMT1000RMI2U

- APC PDU ZeroU

- Dell PowerConnect 5525P

- Dell PowerConnect 5524 (stacked with above)

- MikroTik CRS317-1G-16S+RM - not used currently, this will be the core switch

- UniFi AP Pro

- Main Server running ESXi 6 - runs 24/7

- Supermicro X9SRL-F

- Xeon E5-1620v2

- 128GB ECC DDR3

- 2x M1015 HBA

- Samsung Evo 840 120GB boot drive

- Samsung SM961 1TB NVMe - primary datastore

- Samsung Evo 850 1TB - local backup datastore

- WD 6TB Red - Media storage due to storage constraints on ZFS pool

- 12x Hitachi/HGST 3TB 5k3000 drives (might be 1 or 2 7K3000 drives mixed in)

- Intel X520-DA2 SFP+ NIC

- Secondary server running ESXi 6 or what ever OS I need to experiment with

- Asus Z9PE-D8 WS (with IPMI chip)

- 2x Xeon E5-2660

- 128GB ECC DDR3

- Mixed old SSDs for testing

- 3-4 Hitachi 3TB 7K3000 drives

- Asus HD5450 (used to passthrough to VM)

- Intel X520-DA2 SFP+ NIC

- pfSense - running in a VM with NIC passed through

- DNS

- OpenVPN

- InterVLAN routing

- DynDNS

- Handle primary internet connection 300/20

- Handle secondary backup internet connection (low speed)

- Provide wireless networks for guests alongside my potentially less secure home automation/IoT devices network

- Storage on FreeNAS - M1015's passed through to VM for 3TB drives - 12x drives in Raidz2

- Media storage

- Backups

- Some VM volumes hosted

- Extended storage for workstations

- Home CCTV footage (probably a few days worth) - not being installed until June 2018

- Large MySQL databases used during development/client's staging sites

- Elasticsearch storage

- Downloading services

- Running staging environments for clients - note these are publicly accessible, downtime isn't ideal but causes no financial losses

- Continuous integration server (images/software builds)

- Development environments - basically run my Docker stack on this server to reduce any load on my workstation/resolve issues with Docker for Mac

- Experimenting with new OS/environments

- Processing large data sets

- Idle power consumption currently sits around 270-300w, would love to get this lower but admittedly that's going to be incredibly difficult, some rough usages per device left on 24/7

- Main server - 170-190w

- Dell 5524P - 40w

- Dell 5524 - 14w

- APC UPS - 30w

- Currently renovating the house which means the server gets powered down quite often to be moved around to try protect it from the dust. This results in no internet access! Also due to the network setup with pfSense, it takes 5-10 minutes before network access is restored from server startup!

- Also when ever I want to tweak/update the main server, there's no internet. Somewhat annoying when trying to read online documentation or if others are in the house.

- Hard drives are all dated from 2010/2011 (few warranty replacements so might be a 2012 drive in there) - getting concerned about potential failures.

- Storage configuration could be better

- Approaching low free storage space

- Currently there's ~24TB after parity.

- Add the 20% free space limit for ZFS and that leaves me with ~19TB

- Leaving me with just under 1TB free!

- Noise - mainly lack of fan control on the main server with ESXi results in them running at full speed

- Secondary server takes 5-10 minutes to boot ubuntu (think it's due to the amount of memory)

The most logical step here feels to be a box specifically for pfSense, budget ideally under £500 (ex VAT).

I'd like this to support 10GbE to maintain as some level of performance with InterVLAN routing. This is mainly clients (LAN only) accessing media, but there's some cases of test environments running across multiple VLANs. CPU could be somewhat problematic.

Any of my substantial network use is configured to avoid routing.

Obviously this is going to increase idle power consumption, but think it will be possible to keep that increase under 30w.

There's a few options which all come in around the £500 mark (already have a spare SFP+ NIC), IPMI is a must. Will require 8GB RAM and a 1U case/fans.

- Xeon D - previous gen

- Atom C3xxx - will require SFP+ NIC

- Xeon E3 1220v6 - will require SFP+ NIC

- Xeon Bronze - overkill and probably above £500!

Questions

1) Any suggestions on alternatives here? I'm leaning more towards the Atom board but unsure on performance

2) Is there any benefit to SATA DOM over a cheap M.2 drive?

3) Potential L3 switch suggestions?

Phase 2 - Storage upgrade

Currently have ~19TB usable. Data storage growth is around 2TB per year. Although this may increase with a potential move to 4K content soon. No deleting of content

My plan is to replace all existing 3TB drives with large capacity disks as part of a new ZFS zpool.

Drive options:

- Seagate IronWolf 12TB ~£305 per drive

- Seagate IronWolf Pro 12TB - ~£335 per drive - unsure if the extra £30 per drive for an additional two years warranty is worth it

- 6x 12TB in RAIDZ2 (33.3TB usable) with potential to add another 6x 12TB vdev once at capacity (66.62TB usable), this might be the most cost effective approach if drive prices drop enough by the time additional storage is required.

- 8x 12TB in RAIDZ2 (47.56T usable)

- 10x12TB in RAIDZ2 (63.84TB usable)

- 12x 12TB in RAIDz2 (76.14TB usable)

4) Given the varied use cases above, perhaps slightly lower capacity with more drives would be beneficial to performance? Although power consumption is a concern.

5) Should I be looking at smaller vdevs with such high capacity drives?

6) Are there still advisable drive configurations with ZFS eg. 8 drives per vdev?

7) Assuming I have read the documentation correctly, it's possible to simply add vdevs to a pool to increase available storage space?

8) Is RAIDZ2 still suitable for such high capacity drives or should I be looking at RAIDZ3?

Phase 3 - Storage performance

Currently a lot of the VMs running are stored on the SM961 1TB drive, these are backed up nightly to the ZFS pool, then backed up off site a few hours later. This leaves me with potential for 24 hours worth of data loss if the drive fails! This 1TB of storage surprisingly doesn't go very far which means quite a bit of manual moving around of VMs.

The current FreeNAS configuration has little to no performance optimisations. Just 48GB of RAM which should be used by the ARC.

After doing some research into ZFS, it looks like there's quite a few options which I could utilise. Specifically the L2ARC and ZIL.

Questions

9) Is it possible/sensible to store these VMs on my primary data pool with the SM961 drive added as a L2ARC?

9a) Should I be considering a different NVMe drive given the increase in writes?

10) What drive would be best for a ZIL?

Looking here https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/

Seems like one of the Intel Optane offerings will be best such as the 32GB M.2.

11) Can the L2ARC be configured for specific datasets only? Eg VM storage?

Seems incredibly pointless having the media (films) ever stored in cache.

Phase 4 - Server upgrade

I think the next phase would be to upgrade the primary server, then retire the secondary server (never need 16 cores of processing power!), replacing this with the Xeon E5-1620v2 board. This can be for another day!

--

Thanks to anyone who takes the time to read my ramblings, any input/suggestions would be appreciated.